言語モデルã®ç‰©ç†å¦ (Physics of Language Models) ã¨ã¯ã€FAIR (Meta) ã® Zeyuan Allen-Zhu ãŒæå”±ã—ãŸã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç ”究を進ã‚ã‚‹ãŸã‚ã®ã‚³ãƒ³ã‚»ãƒ—トã§ã™ã€‚ã–ã£ãり言ã†ã¨ã€ã€Œã‚ã®ãƒ¢ãƒ‡ãƒ«ã¯ã“ã†ã€ã¨ã‹ã€Œãã®ãƒ¢ãƒ‡ãƒ«ã¯ã“ã®ãƒ¢ãƒ‡ãƒ«ã‚ˆã‚Šã‚‚ã“ã†ã€ã¨ã„ã†ã‚ˆã†ãªåšç‰©å¦çš„ãªçŸ¥è˜ã‚’æ·±ã‚ã‚‹ã®ã§ã¯ãªãã€17世紀ã«ã‚±ãƒ—ラーやニュートンãŒç‰©ç†å¦ã«ãŠã„ã¦è¡Œã£ãŸã‚ˆã†ãªåŽŸç†ã«åŸºã¥ã„ãŸç ”究を進ã‚ã€ã€Œè¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãªãœã“ã®ã‚ˆã†ãªæŒ¯ã‚‹èˆžã„ã‚’ã™ã‚‹ã®ã‹ã€ã¨ã„ã†å•ã„ã«ç”ãˆã‚‰ã‚Œã‚‹ã‚ˆã†ã«ãªã‚‹ã¹ãã¨ã„ã†è€ƒãˆæ–¹ã§ã™ã€‚

言語モデルã®ç‰©ç†å¦ã®ç‰¹å¾´ã¯å¤§ãã2ã¤ã‚ã‚Šã¾ã™ã€‚

第一ã¯ã€ã‚¦ã‚§ãƒ–ã‹ã‚‰åŽé›†ã—ãŸã‚³ãƒ¼ãƒ‘スを使ã‚ãšã€ãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã¨ã„ã†ã“ã¨ã€‚ウェブã¯èª°ã‚‚全体åƒã‚’ç†è§£ã§ããªã„ã»ã©è¤‡é›‘ã§ã€ãƒŽã‚¤ã‚ºã«ã¾ã¿ã‚Œã¦ã„ã¾ã™ã€‚本物ã®ç‰©ç†å¦ã§ã‚‚空気抵抗や摩擦ãŒã‚ã‚‹ã¨ã€ã€Œé‰„çƒã¯ç¾½ã‚ˆã‚Šã‚‚æ—©ãè½ä¸‹ã™ã‚‹ã®ã§ã¯ãªã„ã‹ã€ã¨ã„ã†ã‚ˆã†ãªèª¤ã£ãŸèªè˜ã‚’生ã¿ã¾ã™ã€‚原ç†ã‚’見ã¤ã‘ã‚‹ã«ã¯çœŸç©ºã§å®Ÿé¨“ã‚’è¡Œã†ã®ãŒé©åˆ‡ã§ã™ã€‚ノイズã®å¤šã„ウェブデータもã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«ãŠã‘る空気抵抗ã®ã‚ˆã†ãªæ‚ªå½±éŸ¿ãŒã‚ã‚‹ã‹ã‚‚ã—ã‚Œã¾ã›ã‚“。言語モデルã®ç‰©ç†å¦ã§ã¯ã€ã€Œã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã§ã€ã€Œã‚¹ã‚¯ãƒ©ãƒƒãƒã‹ã‚‰ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã“ã¨ã‚’第一ã¨ã—ã¦ã„ã¾ã™ã€‚ãŸã¨ãˆã°ã€å¾Œã«ç´¹ä»‹ã™ã‚‹ã‚ˆã†ã«ã€10 万人ã®æž¶ç©ºã®äººç‰©ã®ä¼è¨˜ã‚’作æˆã—ã€ãã‚Œã®ã¿ã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã—ãŸå ´åˆã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã“れらã®äººç‰©ã«ã¤ã„ã¦ã©ã®ã‚ˆã†ãªã“ã¨ãŒè¨€ãˆã‚‹ã‹ã€ã¨ã„ã†ã‚ˆã†ãªå®Ÿé¨“ã‚’ã—ã¦ã„ã¾ã™ã€‚

第二ã¯ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®å†…部状態を調査ã—ã€ã©ã®ã‚¿ã‚¤ãƒŸãƒ³ã‚°ã§ã€ã©ã®å±¤ã§ã€ä½•ãŒã§ãるよã†ã«ãªã£ãŸã‹ã‚’調ã¹ã‚‹ã¨ã„ã†ã“ã¨ã€‚言語モデルãŒå‡ºåŠ›ã—ãŸæ–‡å—列ã¯è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒã€Œè€ƒãˆãŸã€ã“ã¨ã®ä¸€éƒ¨ã«éŽãŽã¾ã›ã‚“。言語モデルã®å‡ºåŠ›ã®è¡¨é¢ã ã‘見ã¦ã„ã¦ã¯ã€ä½•æ•…ãã®ã‚ˆã†ãªæŒ¯ã‚‹èˆžã„ã«ãªã£ãŸã®ã‹ã¾ã§ã¯åˆ†ã‹ã‚Šã¾ã›ã‚“。ã“ã®ãŸã‚ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã§ã¯ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®å†…部状態ã«è¸ã¿è¾¼ã¿ã€ãã‚ç´°ã‚„ã‹ãªåˆ†æžã‚’è¡Œã„ã¾ã™ã€‚ãŸã¨ãˆã°ã€å¾Œã«ç´¹ä»‹ã™ã‚‹ã‚ˆã†ã«ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«å¤šæ®µéšŽã®ç®—æ•°ã®å•é¡Œã‚’入力ã—ã¦ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒé€”ä¸æ®µéšŽã§é–“é•ãˆãŸã¨ãã€å®Ÿã¯è¨€èªžãƒ¢ãƒ‡ãƒ«è‡ªèº«ã‚‚ãã®æ®µéšŽã§è‡ªåˆ†ãŒé–“é•ãˆãŸã“ã¨ã«æ°—ã¥ã„ã¦ã„ã¾ã™ã€‚ãã‚Œã§ã‚‚言語モデルã¯å‡ºåŠ›ã—続ã‘ãªã„ã¨ã„ã‘ã¾ã›ã‚“ã‹ã‚‰ã€ã“ã®ã¾ã¾ã„ãã¨é–“é•ã†ã¨åˆ†ã‹ã£ãŸä¸Šã§æœ€å¾Œã¾ã§æµæš¢ã«å‡ºåŠ›ã—続ã‘ã¾ã™ã€‚ãã—ã¦å½“然ã€æœ€çµ‚çµè«–ã‚’é–“é•ãˆã¾ã™ã€‚å˜ã«æ£è§£ã—ãŸã€é–“é•ãˆãŸã€ã¨ã„ã†å‡ºåŠ›ãƒ¬ãƒ™ãƒ«ã®ç†è§£ã ã‘ã§ãªãã€å†…部ã¾ã§èª¿ã¹ã‚‹ã“ã¨ã§ã€ã‚ˆã‚Šæ·±ã„洞察を得るã“ã¨ã‚’目指ã—ã¾ã™ã€‚

本稿ã§ã¯ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã®åŸºæœ¬çš„ãªè€ƒãˆæ–¹ã‚’紹介ã™ã‚‹ã¨ã¨ã‚‚ã«ã€6 本ã®è«–æ–‡ã‹ã‚‰ãªã‚‹ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã®ä¸€é€£ã®ç ”究ã«ã¤ã„ã¦è©³ã—ã解説ã—ã¾ã™ã€‚ã“れらをèªã‚ã°è¨€èªžãƒ¢ãƒ‡ãƒ«ã®æŒ¯ã‚‹èˆžã„ã®åŽŸç†ã¸ã®ç†è§£ãŒæ·±ã¾ã‚‹ã»ã‹ã€å®Ÿéš›ã«è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ãŸã‚ã®çŸ¥è¦‹ã‚‚大ã„ã«æ·±ã¾ã‚‹ã¯ãšã§ã™

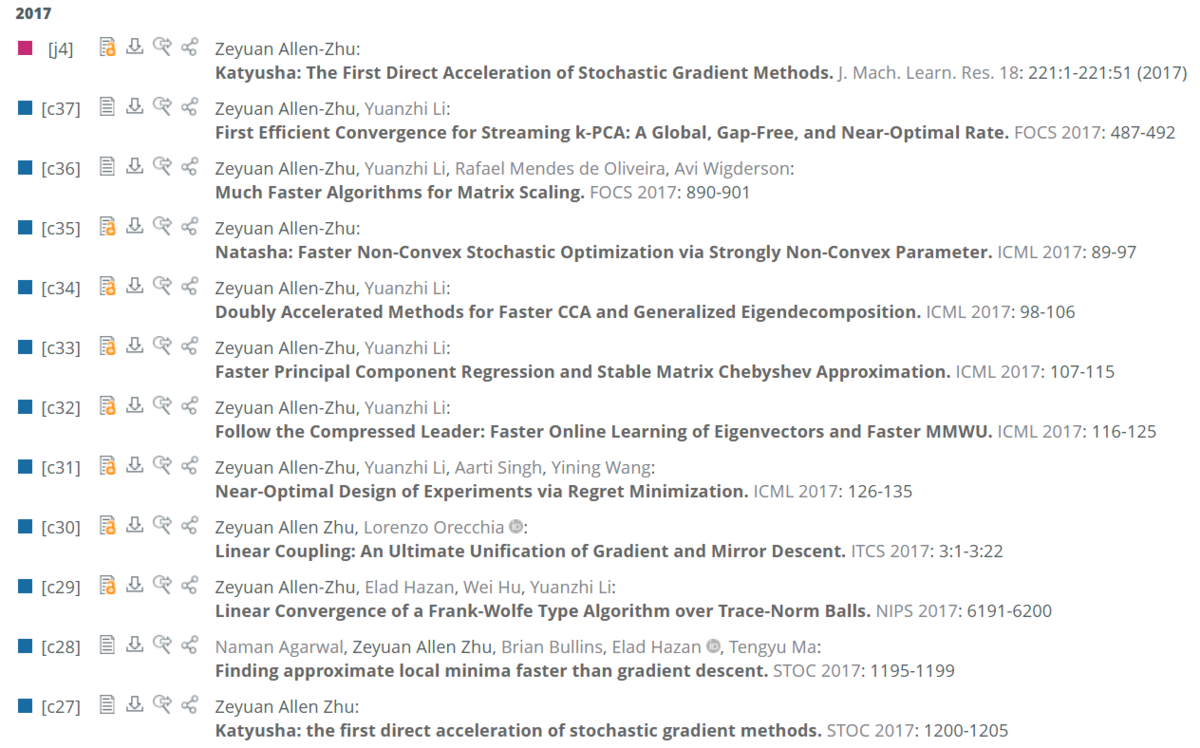

ãªãŠã€æ唱者㮠Zeyuan Allen-Zhu ã¯ï¼ˆã‚ã®ï¼‰LoRA è«–æ–‡ã®è‘—者ã®ä¸€äººã§ã‚ã‚‹ã“ã¨ã§æœ‰åã§ã™ãŒã€ã‹ã¤ã¦ã¯å›½éš›æƒ…å ±ã‚ªãƒªãƒ³ãƒ”ãƒƒã‚¯ã§é‡‘メダル 2 æžšã€ACM-ICPC World Finals 㧠2 ä½ã‚’å–ã‚‹ãªã©ç«¶æŠ€ãƒ—ãƒã‚°ãƒ©ãƒŸãƒ³ã‚°ã§åを馳ã›ãŸã»ã‹ã€FOCS ã‚„ STOC ã«æŽ¡æŠžã•ã‚Œã¤ã¤ ICML ã«ã€Œä¸€å¹´ã§ã€ã€Œãƒ•ã‚¡ãƒ¼ã‚¹ãƒˆã‚ªãƒ¼ã‚µãƒ¼ã§ã€ 5 本採択ã•ã‚Œã‚‹ãªã©ã®å®Ÿç¸¾ãŒã‚ã‚‹ã‚¹ãƒ¼ãƒ‘ãƒ¼ç ”ç©¶è€…ã§ã‚ã‚‹ã“ã¨ã‚’申ã—æ·»ãˆã¦ãŠãã¾ã™ã€‚

目次

- 目次

- 言語モデルã®ç‰©ç†å¦

- Physics of Language Models: Part 1, Learning Hierarchical Language Structures (arXiv 2023)

- Physics of Language Models: Part 2.1, Grade-School Math and the Hidden Reasoning Process (ICLR 2025)

- Physics of Language Models: Part 2.2, How to Learn From Mistakes on Grade-School Math Problems (ICLR 2025)

- Physics of Language Models: Part 3.1, Knowledge Storage and Extraction (ICML 2024)

- 言語モデルã¯äº‹å‰è¨“練時ã«ä½•ã‚‰ã‹ã®è³ªå•ã‚’見ã¦ãŠãå¿…è¦ãŒã‚ã‚‹

- 言語モデルã¯ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã™ã‚‹ã¨è³ªå•ã«ç”ãˆã‚‰ã‚Œã‚‹ã‚ˆã†ã«ãªã‚‹

- 言語モデルã¯ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã‚’ã—ãªã„ã¨å…¨ã¦ã®æƒ…å ±ãŒãã‚ã†ã¾ã§æƒ…å ±ã‚’æŠ½å‡ºã§ããªã„

- 言語モデルã¯ä¸€éƒ¨ã®äººç‰©ã«ã¤ã„ã¦ã®ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã ã‘ã§ã€ã†ã¾ãæƒ…å ±ã‚’è¨˜æ†¶ã§ãるよã†ã«ãªã‚‹

- Physics of language models: Part 3.2, knowledge manipulation (ICLR 2025)

- Physics of language models: Part 3.3, knowledge capacity scaling laws (ICLR 2025)

- ãŠã‚ã‚Šã«

言語モデルã®ç‰©ç†å¦

言語モデルã®ç‰©ç†å¦ã®ç°¡å˜ãªèª¬æ˜Žã¯å†’é ã§è¿°ã¹ãŸé€šã‚Šã§ã™ã€‚プãƒã‚¸ã‚§ã‚¯ãƒˆãƒšãƒ¼ã‚¸ã«ã¯ä»¥ä¸‹ã®ã‚¹ãƒ†ãƒ¼ãƒˆãƒ¡ãƒ³ãƒˆãŒæŽ²ã’られã¦ã„ã¾ã™ã€‚

Apples fall and boxes move, but universal laws like gravity and inertia are crucial for technological advancement. While GPT-5 or LLaMA-6 may offer revolutionary experiences tomorrow, we must look beyond the horizon. Our goal is to establish universal laws for LLMs that can guide us and provide practical suggestions on how we can ultimately achieve AGI. (リンゴã¯è½ã¡ã‚‹ã€‚ç®±ã¯å‹•ã。ã—ã‹ã—ã€é‡åŠ›ã‚„慣性ã®ã‚ˆã†ãªæ™®éçš„ãªæ³•å‰‡ã¯æŠ€è¡“ã®é€²æ©ã«ä¸å¯æ¬ ã§ã‚る。GPT-5 ã‚„ LLaMA-6 ãŒæ˜Žæ—¥ã€é©å‘½çš„ãªä½“験をもãŸã‚‰ã™ã‹ã‚‚ã—ã‚Œãªã„ãŒã€ç§ãŸã¡ã¯åœ°å¹³ç·šã®å‘ã“ã†å´ã‚’見æ®ãˆãªã‘ã‚Œã°ãªã‚‰ãªã„。ç§ãŸã¡ã®ç›®æ¨™ã¯ã€ç§ãŸã¡ã‚’å°Žãã€æœ€çµ‚çš„ã« AGI ã‚’é”æˆã™ã‚‹æ–¹æ³•ã«ã¤ã„ã¦ã®å®Ÿè·µçš„ãªæ案をæä¾›ã§ãã‚‹ LLM ã®æ™®éçš„ãªæ³•å‰‡ã‚’確立ã™ã‚‹ã“ã¨ã§ã‚る。)

「言語モデルã®ç‰©ç†å¦ã€ã¨è¨€ã£ã¦ã‚‚ã€åŠ›å¦ã‚„é›»ç£æ°—å¦ã‚’言語モデルã«å¯¾ã—ã¦é©ç”¨ã™ã‚‹ã®ã§ã¯ã‚ã‚Šã¾ã›ã‚“。「物ç†æ³•å‰‡ã®ã‚ˆã†ãªæ™®éçš„ãªæ³•å‰‡ã‚’言語モデルã«ãŠã„ã¦è¦‹ã¤ã‘ã‚‹ãŸã‚ã®ç ”究ã€ã¨ã„ã†ãらã„ã®æ„味ã§ã™ã€‚コンセプトåãŒå°‘ã—紛らã‚ã—ã„ã§ã™ã。もã†å°‘ã—æ„訳ã™ã‚‹ãªã‚‰ã€Œè¨€èªžãƒ¢ãƒ‡ãƒ«ã®çª®ç†å¦ã€ãらã„ã§ã—ょã†ã‹ã€‚æ唱者㮠Zeyuan Allen-Zhu 自身ãŒä¸å›½èªžã§ã€Œè¯è¨€æ¨¡åž‹ç‰©ç†å¦ã€ã¨è¡¨ç¾ã—ã¦ã„ã‚‹ã®ã§ã€æœ¬ç¨¿ã‚‚ãã‚Œã«åˆã‚ã›ã€Œè¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã€ã¨ã„ã†ç”¨èªžã‚’用ã„ã¾ã™ã€‚

本稿ã®å†’é ã§ã¯ã€ã‚¦ã‚§ãƒ–ã‹ã‚‰åŽé›†ã—ãŸã‚³ãƒ¼ãƒ‘スを使ã‚ãšã€ãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã“ã¨ãŒç¬¬ä¸€ã®ç‰¹å¾´ã ã¨è¿°ã¹ã¾ã—ãŸã€‚言語モデルã®ç‰©ç†å¦ã®ãƒ—ãƒã‚¸ã‚§ã‚¯ãƒˆãƒšãƒ¼ã‚¸ã§ã¯ã€ã“ã‚Œã¨å…±ã«ã€ãƒ™ãƒ³ãƒãƒžãƒ¼ã‚¯ã‚’é‡è¦–ã™ã‚‹ç¾åœ¨ã®é¢¨æ½®ã‚’批判ã—ã¦ã„ã¾ã™ã€‚è¿‘å¹´ã€æ¯Žæœˆã€æ¯Žé€±ã€æ¯Žæ—¥ã®ã‚ˆã†ã«ã€ãƒ™ãƒ³ãƒãƒžãƒ¼ã‚¯ã®æ–°ãŸãªã‚¹ã‚³ã‚¢ãŒæ‰“ã¡ç«‹ã¦ã‚‰ã‚Œã€å¤§ã€…çš„ã«å®£ä¼ã•ã‚Œã¦ã„ã¾ã™ã€‚ã—ã‹ã—ã€ãã‚Œã¯æœ¬å½“ã«é‡è¦ãªã“ã¨ã§ã—ょã†ã‹ï¼Ÿãã‚Œã¯æœ¬å½“ã«é€²æ©ã¨è¨€ãˆã‚‹ã§ã—ょã†ã‹ï¼Ÿãƒ™ãƒ³ãƒãƒžãƒ¼ã‚¯ã¯æ™‚ã¨ã¨ã‚‚ã«é™³è…化ã™ã‚‹é‹å‘½ã«ã‚ã‚Šã¾ã™ã€‚ベンãƒãƒžãƒ¼ã‚¯ãŒæœ‰åã«ãªã‚Œã°ãªã‚‹ã»ã©ã€ãã®ãƒ™ãƒ³ãƒãƒžãƒ¼ã‚¯ã¸ã®è¨€åŠã€è§£èª¬ã€å…·ä½“例ãŒã‚¦ã‚§ãƒ–ã«å¢—ãˆã¦ã„ãã¾ã™ã€‚テストデータã®ç”ãˆãŒã©ã“ã‹ã®ã‚¦ã‚§ãƒ–サイトã«è¼‰ã‚‹ã“ã¨ã‚‚ã‚ã‚‹ã‹ã‚‚ã—ã‚Œã¾ã›ã‚“。ãれらをå–り込んã æ–°ã—ã„モデルãŒãã®ãƒ™ãƒ³ãƒãƒžãƒ¼ã‚¯ã‚’ã†ã¾ã解ã‘るよã†ã«ãªã‚‹ã®ã¯å½“然ã§ã—ょã†ã€‚ã¡ã‚ƒã‚“ã¨ã—ãŸå®Ÿé¨“ã§ã¯ã€è¨“練データã‹ã‚‰ãƒ†ã‚¹ãƒˆãƒ‡ãƒ¼ã‚¿ã‚’フィルタリングã—ã¦ã„ã¾ã™ãŒã€åˆ¥è¨€èªžã«ç¿»è¨³ã—ã¦æŽ²è¼‰ã•ã‚ŒãŸã‚Šã€æ•°å¼ã‚’言葉ã§èª¬æ˜Žã—ã¦æŽ²è¼‰ã•ã‚ŒãŸã‚Šã—ãŸã‚‚ã®ã¯é™¤åŽ»ã‚’逃れã¦ã—ã¾ã†ã‹ã‚‚ã—ã‚Œã¾ã›ã‚“。ãã†ã„ã£ãŸæƒ…å ±æ¼æ´©ã¯å®Œå…¨ã«ã¯é˜²ãŽã‚ˆã†ã¯ãªãã€æ™‚ã¨ã¨ã‚‚ã«ãã®æ•°ã¯å¢—ãˆã¦ã—ã¾ã„ã¾ã™ã€‚ウェブã¯èª°ã‚‚全体åƒã‚’ç†è§£ã§ããªã„ã»ã©è¤‡é›‘ã§ã€ãƒŽã‚¤ã‚ºã«ã¾ã¿ã‚Œã¦ã„ã¾ã™ã€‚ã©ã®ã‚ˆã†ãªå½¢ã§ã“れらã®ãƒŽã‚¤ã‚ºãŒãƒ™ãƒ³ãƒãƒžãƒ¼ã‚¯ã«å½±éŸ¿ã™ã‚‹ã‹ã¯èª°ã«ã‚‚分ã‹ã‚Šã¾ã›ã‚“。言語モデルã®ç‰©ç†å¦ã§ã¯ã“ã®ç‚¹ã‚’æ·±ã懸念ã—ã€ã‚¦ã‚§ãƒ–ã‹ã‚‰åŽé›†ã—ãŸã‚³ãƒ¼ãƒ‘スを使ã‚ãšã€ãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã†ã“ã¨ã§ã€ç¢ºå®Ÿãªå½¢ã§ã“ã®å•é¡Œã‚’回é¿ã—ã¦ã„ã¾ã™ã€‚

第二ã®ç‰¹å¾´ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®å†…部状態を調査ã§ã¯ã€ç·šå½¢ãƒ—ãƒãƒ¼ãƒ“ングや低ランクプãƒãƒ¼ãƒ“ングã¨ã„ã†æŠ€è¡“を主ã«ç”¨ã„ã¾ã™ã€‚線形プãƒãƒ¼ãƒ“ングã§ã¯ã€å†…部状態を入力ã¨ã—ã¦å—ã‘å–る線形モデルã§ã©ã®ã‚ˆã†ãªã‚¿ã‚¹ã‚¯ãŒè§£ã‘ã‚‹ã‹ã‚’調査ã—ã¾ã™ã€‚低ランクプãƒãƒ¼ãƒ“ングã§ã¯ã€ãƒ¢ãƒ‡ãƒ«ã®ãƒ¡ã‚¤ãƒ³ãƒ‘ラメータを固定ã—ãŸçŠ¶æ…‹ã§ä½Žãƒ©ãƒ³ã‚¯æˆåˆ†ï¼ˆã„ã‚ゆる LoRAï¼‰ã‚’ä»˜åŠ ã—ã¦ã©ã®ã‚ˆã†ãªã‚¿ã‚¹ã‚¯ãŒè§£ã‘ã‚‹ã‹ã‚’調査ã—ã¾ã™ã€‚ã“ã®ã‚ˆã†ãªå˜ç´”ãªè£œåŠ©ãƒ¢ãƒ‡ãƒ«ã§ã‚¿ã‚¹ã‚¯ãŒè§£ã‘ã‚‹ モデルã¯æ—¢ã«å†…部ã§ãã®ã‚¿ã‚¹ã‚¯ã‚’解ã„ã¦ã„ã‚‹ã¨è€ƒãˆã¾ã™ã€‚言語モデルã®ç‰©ç†å¦ã®è«–æ–‡ä¸ã§ã¯ mentally(é ã®ä¸ã§ï¼‰ã¨ã„ã†å˜èªžãŒã‚ˆãç™»å ´ã—ã¾ã™ã€‚本稿ã§ã‚‚ã€ã€Œãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ãã®ã‚¿ã‚¹ã‚¯ã‚’解ã„ã¦ã„ã‚‹ã€ã€Œé ã®ä¸ã§åˆ†ã‹ã£ã¦ã„ã‚‹ã€ã¨ã„ã†ã‚ˆã†ãªè¡¨ç¾ã‚’用ã„ã¾ã™ã€‚例ãˆã°ã€å†’é ã§è¿°ã¹ãŸã‚ˆã†ã«ã€ãƒ¢ãƒ‡ãƒ«ãŒç®—æ•°ã®å•é¡Œã‚’解ã„ã¦ã„る途ä¸æ®µéšŽã«ãŠã„ã¦å†…部状態をå–り出ã—ã€ç·šå½¢ãƒ¢ãƒ‡ãƒ«ã«å…¥åŠ›ã™ã‚‹ã¨ãƒŸã‚¹ã—ã¦ã—ã¾ã£ãŸã‹ã€ã—ã¦ã„ãªã„ã‹ã‚’高ã„精度ã§åˆ†é¡žã§ãã¾ã™ã€‚ã“ã‚Œã¯ã¤ã¾ã‚Šã€ãƒŸã‚¹ã—ã¦ã—ã¾ã£ãŸã‹ã—ã¦ã„ãªã„ã‹ãŒã€å–り出ã—ã‚„ã™ã„å½¢ã§ï¼ˆç·šå½¢ãƒ¢ãƒ‡ãƒ«ã§ã‚‚å–り出ã›ã‚‹å½¢ã§ï¼‰å†…部状態ã«ã‚¨ãƒ³ã‚³ãƒ¼ãƒ‰ã•ã‚Œã¦ã„ã‚‹ã¨ã„ã†ã“ã¨ã§ã‚ã‚Šã€ã¤ã¾ã‚Šã€è‡ªåˆ†ãŒãƒŸã‚¹ã—ã¦ã—ã¾ã£ãŸã‹ã©ã†ã‹ã‚’モデルã¯é ã®ä¸ã§æ°—ã¥ã„ã¦ã„ã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚モデルã®é ã®ä¸ã®å‹•ãã¨ã„ã†ã¨ã€æ€è€ƒã®é€£éŽ– (Chain of Thoughtã€DeepSeek-R1 ã‚„ OpenAI o1 ã®é€”ä¸çµæžœãªã©ï¼‰ã‚’æ€ã„æµ®ã‹ã¹ã‚‹æ–¹ã‚‚多ã„ã‹ã‚‚ã—ã‚Œã¾ã›ã‚“。ã—ã‹ã—ã€ãƒ—ãƒãƒ¼ãƒ“ングã¯æ€è€ƒã®é€£éŽ–ã¨ã¯ç•°ãªã‚Šã¾ã™ã€‚æ€è€ƒã®é€£éŽ–ã¯ã„ã‚ã°å£°ã«å‡ºã—ã¦è€ƒãˆã¦ã„る状態ã§ã™ã€‚ã“ã‚Œã¯ãƒ¢ãƒ‡ãƒ«ã®æ€è€ƒã®ã”ã一部ã«ã™ãŽã¾ã›ã‚“。プãƒãƒ¼ãƒ“ングを使ãˆã°å£°ã«å‡ºã•ãšã«ãƒ¢ãƒ‡ãƒ«ãŒè€ƒãˆã¦ã„ã‚‹ã“ã¨ãŒã‚ã‹ã‚Šã¾ã™ã€‚以下ã«è©³ã—ã見るよã†ã«ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯å£°ã«å‡ºã—ãŸã“ã¨ä»¥å¤–ã«ã‚‚ã€å®Ÿã¯é ã®ä¸ã§è‰²ã€…ãªã“ã¨ã‚’考ãˆã¦ã„ã‚‹ã®ã§ã™ã€‚

言語モデルã®ç‰©ç†å¦ãƒ—ãƒã‚¸ã‚§ã‚¯ãƒˆã§ã¯ã€2025 å¹´ 3 月ç¾åœ¨ã€6 本ã®è«–æ–‡ãŒå…¬é–‹ã•ã‚Œã¦ã„ã¾ã™ã€‚大ãã 3 ã¤ã®ç« ã«åˆ†ã‹ã‚Œã¦ãŠã‚Šã€ç¬¬ä¸€ç« 㯠Hierarchical Language Structuresï¼ˆéšŽå±¤çš„è¨€èªžæ§‹é€ ï¼‰ã€ç¬¬äºŒç« 㯠Grade-School Math(å°å¦æ ¡ã®ç®—数)ã€ç¬¬ä¸‰ç« 㯠Knowledge(知è˜ï¼‰ã¨å付ã‘られã¦ã„ã¾ã™ã€‚ãã‚Œãžã‚Œã®è«–æ–‡ã¯ç‹¬ç«‹ã—ãŸè«–æ–‡ã¨ã—ã¦èªã‚ã¾ã™ãŒã€ä»¥ä¸Šã«è¿°ã¹ãŸã‚³ãƒ³ã‚»ãƒ—トãŒä¸€é€£ã®è«–文を繋ã縦糸ã¨ã—ã¦é€šã£ã¦ã„ã¾ã™ã€‚

以下ã€ãã‚Œãžã‚Œã®è«–文を解説ã—ã¾ã™ã€‚

Physics of Language Models: Part 1, Learning Hierarchical Language Structures (arXiv 2023)

日本語ã«è¨³ã™ã¨ã€ŒéšŽå±¤çš„è¨€èªžæ§‹é€ ã®å¦ç¿’ã€ã§ã™ã€‚

実ã¯ã“ã®è«–æ–‡ãŒè¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã®ä¸ã§æœ€ã‚‚難ã—ã„è«–æ–‡ã§ã™ã€‚言語モデルã®ç‰©ç†å¦ã‚’å¦ã¼ã†ã¨æ€ã£ãŸäººãŒæŒ«æŠ˜ã™ã‚‹è¦å› ã¯ã“ã“ã«ã‚るよã†ã«æ€ã„ã¾ã™ã€‚ã§ãã‚‹ã ã‘平易ãªèª¬æ˜Žã‚’心ãŒã‘ã¾ã™ãŒã€ã“ã®è«–æ–‡ã®å†…容ã¯ä»¥é™ã®ç« ã«å½±éŸ¿ã—ãªã„ã®ã§é›£ã—ã„ã¨æ€ã£ãŸæ–¹ã¯ã“ã®ç« ã¯é£›ã°ã—ã¦æ¬¡ã«é€²ã‚“ã§ã‚‚らã£ã¦å•é¡Œã‚ã‚Šã¾ã›ã‚“。

ã“ã®è«–æ–‡ã®ä¸»è¦ãªçµæžœã‚’一言ã§è¡¨ã™ã¨ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯è¤‡é›‘ãªæ–‡è„ˆè‡ªç”±æ–‡æ³•ã‚’æ£ç¢ºã«å¦ç¿’ã§ãã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚ãã—ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã¯ã€å‹•çš„計画法を駆使ã—ã¦ã“ã®å•é¡Œã‚’解ãã¾ã™ã€‚

ã“ã®è«–æ–‡ã¯è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã®è€ƒãˆæ–¹ã®ã†ã¡ã€ç¬¬ä¸€ã®ç‰¹å¾´ã§ã‚る「ãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã€ã¨ã„ã†ã¨ã“ã‚ã‚’çªãè©°ã‚ãŸã‚‚ã®ã¨è¨€ãˆã¾ã™ã€‚文脈自由文法ã¨ã„ã†ï¼ˆå®Œç’§ãªãƒªã‚¢ãƒªãƒ†ã‚£ã“ãç„¡ã„ã‹ã‚‚ã—ã‚Œãªã„ãŒï¼‰ã‚ˆãコントãƒãƒ¼ãƒ«ã•ã‚ŒãŸè¦å‰‡ã«æ²¿ã£ã¦ã‚³ãƒ¼ãƒ‘スを生æˆã—ã€ã“れを元ã«è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ã¾ã™ã€‚

å•é¡Œè¨å®š

文脈自由文法ã¨ã¯ã€[root] ã¨ã„ã†è¨˜å·ã‹ã‚‰ã¯ã˜ã‚ã¦

[root] → [æ–‡] [æ–‡] → [åè©žå¥] [å‹•è©žå¥] [åè©žå¥] → [å† è©ž] [åè©ž] | [固有åè©ž] [å‹•è©žå¥] → [å‹•è©ž] [åè©žå¥] [å† è©ž] → "the" | "a" [åè©ž] → "cat" | "dog" | "saw" | "telescope" [å‹•è©ž] → "saw" | "loved" [固有åè©ž] → "John" | "Mary"

ã¨ã„ã†ã‚ˆã†ãªç”Ÿæˆè¦å‰‡ã«æ²¿ã£ã¦ç½®æ›ã‚’ç¹°ã‚Šè¿”ã—ã¦ç”Ÿæˆã™ã‚‹å½¢å¼è¨€èªžã®ã‚¯ãƒ©ã‚¹ã§ã™ã€‚例ãˆã°ä¸Šã®æ–‡æ³•ã®å ´åˆã€

- [root] → [æ–‡] → [åè©žå¥] [å‹•è©žå¥] → [å† è©ž] [åè©ž] [å‹•è©ž] [åè©žå¥] → [å† è©ž] [åè©ž] [å‹•è©ž] [å† è©ž] [åè©ž] → the cat saw a dog

- [root] → [æ–‡] → [åè©žå¥] [å‹•è©žå¥] → [固有åè©ž] [å‹•è©ž] [åè©žå¥] → [固有åè©ž] [å‹•è©ž] [固有åè©ž] → Mary loved John

- [root] → [æ–‡] → [åè©žå¥] [å‹•è©žå¥] → [固有åè©ž] [å‹•è©ž] [åè©žå¥] → [固有åè©ž] [å‹•è©ž] [å† è©ž] [åè©ž] → John saw a saw

ã®ã‚ˆã†ãªæ–‡ãŒç”Ÿæˆã§ãã¾ã™ã€‚

é‡è¦ãªç‰¹å¾´ãŒäºŒã¤ã‚ã‚Šã¾ã™ã€‚

第一ã¯æ›–昧性。上ã®ä¾‹ã®ã‚ˆã†ã« saw ã¯å‹•è©žï¼ˆè¦‹ãŸï¼‰ã¨å詞(ã®ã“ãŽã‚Šï¼‰ã®äºŒã¤ã®å½¹å‰²ãŒã‚ã‚Šã¾ã™ã€‚出力ã•ã‚ŒãŸãƒˆãƒ¼ã‚¯ãƒ³ saw を見ãŸã ã‘ã§ã¯ã€ã©ã¡ã‚‰ã®å½¹å‰²ã‹ãŒåˆ†ã‹ã‚Šã¾ã›ã‚“。

第二ã¯é•·ã„ä¾å˜æ€§ã€‚上記ã®ä¾‹ã¯å˜ç´”ãªã®ã§ã“ã‚Œã¯ç™ºç”Ÿã—ã¾ã›ã‚“ãŒã€ä¾‹ãˆã° [åè©žå¥] → "the" [åè©ž] "that" [å‹•è©žå¥] ã‚„ç¾åœ¨å½¢ãƒ»è¤‡æ•°å½¢ãªã©ã®è¦å‰‡ãŒã‚ã‚Šã€the cats that saw a dog that loved a telescope ã¾ã§ç”Ÿæˆã—ãŸã¨ãã€æ¬¡ "jump" を出力ã—ã¦ã‚ˆã„ã®ã‹ "jumps" を出力ã—ã¦ã‚ˆã„ã®ã‹ã¯ã€é•·ã„節ã®å…ƒãŒå˜æ•°å½¢ãªã®ã‹è¤‡æ•°å½¢ãªã®ã‹ã‚’確èªã—ãªã„ã¨æ±ºå®šã§ãã¾ã›ã‚“(ç”ãˆï¼šcats ã¨è¤‡æ•°å½¢ãªã®ã§ jumps を出力ã—ã¦ã¯ãªã‚‰ãšã€jump を出力ã™ã‚‹ã¹ãã§ã™ã€‚)

ã“れらã®ç‰¹å¾´ã¯ç¾å®Ÿã®è¨€èªžã«ã‚‚ã‚ã‚Šã€æ–‡è„ˆè‡ªç”±æ–‡æ³•ã¯ãれを純粋ãªå½¢ã§å–り出ã—ã¦ã„ã¾ã™ã€‚実験ã§ã¯ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã“れらã®æ›–昧性ã¨é•·ã„ä¾å˜æ€§ã‚’確ã‹ãªå½¢ã§æ‰±ãˆã‚‹ã“ã¨ã‚’確èªã—ã¾ã™ã€‚

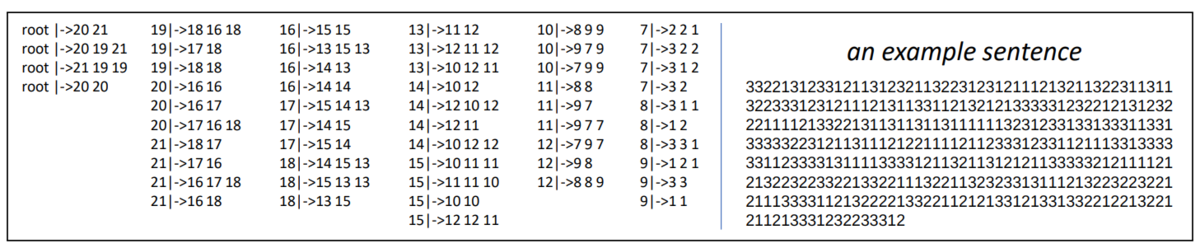

ã“ã®è«–æ–‡ã§ã¯ã€ä»¥ä¸‹ã®ã‚ˆã†ãªã‹ãªã‚Šè¤‡é›‘ãªæ–‡è„ˆè‡ªç”±æ–‡æ³•ã‚’用ã„ã¾ã™ã€‚ã“ã‚Œã¯éžå¸¸ã«æ›–昧性ãŒé«˜ãã€é•·ã„ä¾å˜æ€§ã‚’æŒã¡ã¾ã™ã€‚

ã“ã®æ–‡æ³•ã«å¾“ã„ã€é•·ã• 512 ã®ãƒ†ã‚ストをランダム㫠960 万個生æˆã—ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰ next token prediction 㧠1 エãƒãƒƒã‚¯è¨“ç·´ã—ã¾ã™ã€‚言語モデルã«ã¯æ–‡æ³•ã«ã¤ã„ã¦ã®çŸ¥è˜ã‚’直接ã¯ä½•ã‚‚æ示ã—ãªã„ã“ã¨ã«æ³¨æ„ã—ã¦ãã ã•ã„。文法ã‹ã‚‰ç”Ÿæˆã•ã‚ŒãŸæ–‡å—列をãŸã 訓練データã¨ã—ã¦å…¥åŠ›ã™ã‚‹ã ã‘ã§ã™ã€‚

テスト時ã«ã¯ã€è¨“ç·´ã—ãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã«ã„ã¡ã‹ã‚‰ãƒ†ã‚ストを生æˆã•ã›ãŸã‚Šã€æ–‡æ³•ã«å¾“ã£ã¦æ–°ã—ã生æˆã—ãŸæ–‡å—列を途ä¸ã¾ã§å…¥åŠ›ã—ã€ç¶šãを生æˆã•ã›ãŸã‚Šã—ã¾ã™ã€‚

言語モデルã¯è¤‡é›‘ãªæ–‡è„ˆè‡ªç”±æ–‡æ³•ã‚’æ£ç¢ºã«å¦ç¿’ã§ãã‚‹

ã“ã†ã—ã¦è¨“ç·´ã•ã‚ŒãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ãŒã„ã¡ã‹ã‚‰ç”Ÿæˆã—ãŸãƒ†ã‚ストã¯ã»ã¼ç¢ºå®Ÿã«ï¼ˆ99%~ ã®ç¢ºçŽ‡ã§ï¼‰æ£ã—ã„文法ã§ã—ãŸã€‚ ã¾ãŸã€ç”Ÿæˆã—ãŸãƒ†ã‚ストã¯å分ãªå¤šæ§˜æ€§ã‚’æŒã¡ã€ã€ŒçœŸã®ç¢ºçŽ‡åˆ†å¸ƒã€ã¨ã® KL ダイãƒãƒ¼ã‚¸ã‚§ãƒ³ã‚¹ã¯å°ã•ã„ã“ã¨ãŒç¢ºèªã•ã‚Œã¾ã—ãŸã€‚文法ã®æ£ã—ã•ã‚’ãƒã‚§ãƒƒã‚¯ã™ã‚‹ã ã‘ã§ã¯ãªãã€å¤šæ§˜æ€§ã‚„分布è·é›¢ã‚’ãƒã‚§ãƒƒã‚¯ã™ã‚‹ã“ã¨ã¯é‡è¦ã§ã™ã€‚例ãˆã°ã€ä¸Šè¿°ã® the cats that saw a dog that loved a telescope ã®ä¾‹ã®å ´åˆã€ã¨ã‚Šã‚ãˆãš jumped ã¨éŽåŽ»å½¢ã§å‡ºåŠ›ã—ã¦ãŠã‘ã°ã€å˜æ•°ãƒ»è¤‡æ•°ã®å•é¡Œã‚’扱ã‚ãšã«æ¸ˆã¿ã€æ£ã—ã„文法ã®æ–‡ã‚’出力ã§ãã¾ã™ã€‚ã—ã‹ã—ã€ã“ã‚Œã§ã¯æ–‡æ³•ã‚’æ£ã—ãå¦ç¿’ã—ãŸã¨ã¯è¨€ãˆã¾ã›ã‚“。分布è·é›¢ã‚’調ã¹ã‚‹ã“ã¨ã§ç¢ºã‹ã«è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯æ–‡æ³•ã®ã‚らゆるパターンをæ£ã—ãã€é€ƒã’ã‚‹ã“ã¨ãªãã€å¦ç¿’ã§ãã¦ã„ã‚‹ã“ã¨ãŒç¢ºèªã§ãã¾ã™ã€‚「真ã®ç¢ºçŽ‡åˆ†å¸ƒã€ã¨ã®è·é›¢ã¯ãƒªã‚¢ãƒ«ãƒ‡ãƒ¼ã‚¿ã ã¨è¨ˆç®—ãŒã§ããªã„ã®ã§ã€ã“ã“ãŒç¢ºèªã§ãã‚‹ã“ã¨ã¯ã€Œãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã€ã—ãŸã“ã¨ã®åˆ©ç‚¹ã¨è¨€ãˆã¾ã™ã€‚

言語モデルã¯é ã®ä¸ã§å‹•çš„計画法を使ã£ã¦ã„ã‚‹

続ã„ã¦ã€å†…部状態ã®èª¿æŸ»ã«ã¤ã„ã¦ã§ã™ã€‚

ã¾ãšã€å†…部状態ã«å¯¾ã™ã‚‹ç·šå½¢ãƒ—ãƒãƒ¼ãƒ“ングã§ã€å„出力トークンã®å…ƒã¨ãªã‚‹éžçµ‚端記å·ï¼ˆ[åè©žå¥] ã‚„ [å‹•è©žå¥] ãªã©ï¼‰ã‚’æ£ç¢ºã«æŽ¨å®šã§ãã¾ã™ã€‚ã¤ã¾ã‚Šã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ "saw" ã®ã‚ˆã†ã«æ›–昧ãªãƒˆãƒ¼ã‚¯ãƒ³ã‚’出力ã—ã¾ã™ãŒã€é ã®ä¸ã§ã¯ã“ã‚ŒãŒåè©žã‹å‹•è©žã‹ã‚’分ã‹ã£ãŸä¸Šã§å‡ºåŠ›ã—ã¦ã„ã¾ã™ã€‚ç¹°ã‚Šè¿”ã—ã«ãªã‚Šã¾ã™ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«ã¯æ–‡æ³•ã«ã¤ã„ã¦ã®çŸ¥è˜ã‚’直接ã¯ä½•ã‚‚æ示ã—ãªã„ã“ã¨ã«æ³¨æ„ã—ã¦ãã ã•ã„。å˜ã« next token prediction ã§ç”Ÿã®ãƒ†ã‚ストã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã ã‘ã§ã€ã€Œåè©žã€ã€Œå‹•è©žã€ãªã©ã®æ–‡æ³•æ§‹é€ ã‚’é ã®ä¸ã§ç¿’å¾—ã§ãã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚ã“れもã€ã€Œãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã€ã—ãŸã“ã¨ã®åˆ©ç‚¹ã§ã™ã€‚ウェブã«ã¯æ–‡æ³•ã®è§£èª¬ãŒæŽ²è¼‰ã•ã‚Œã¦ãŠã‚Šã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãã‚Œã§æ–‡æ³•ã‚’å¦ã‚“ã å¯èƒ½æ€§ã‚‚ã‚る訳ã§ã™ãŒã€ãã†ã§ã¯ãªãã€æ–‡æ³•ãŒä¸€åˆ‡æ˜Žç¤ºã•ã‚Œã¦ã„ãªã„生ã®ãƒ†ã‚ストã ã‘ã‹ã‚‰ã§ã‚‚文法をé ã®ä¸ã§ç¿’å¾—ã§ãã‚‹ã¨ã„ã†ã“ã¨ãŒã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸå®Ÿé¨“ã§è§£æ˜Žã•ã‚Œã¾ã—ãŸã€‚

ã¾ãŸã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§å‹•çš„計画法を使ã£ã¦ã„ã‚‹ã¨ã„ã†ç¤ºå”†ã‚‚得られã¾ã—ãŸã€‚è¾¼ã¿å…¥ã£ã¦ã„ã‚‹ã®ã§ç°¡å˜ãªè§£èª¬ã«ç•™ã‚ã¾ã™ãŒã€æ–‡è„ˆè‡ªç”±æ–‡æ³•ã¯ãƒ«ãƒ¼ãƒ«ãƒ™ãƒ¼ã‚¹ã§è§£æžã™ã‚‹ã¨ãã€å‹•çš„計画法を用ã„ã¦ãƒ‘ースã—ãŸã‚Šé€æ¬¡ç”Ÿæˆã—ãŸã‚Šã™ã‚‹ã“ã¨ãŒå¯èƒ½ã§ã™ã€‚ã“ã‚Œã¯æ–‡è„ˆè‡ªç”±æ–‡æ³•ã®ç½®æ›ã¨ã¯é€†æ–¹å‘ã®æŽ¨è«–ã‚’ã™ã‚‹è¦é ˜ã§ã€the cat saw a dog ã«ãŠã„ã¦ã€1 トークン目ã‹ã‚‰ 2 トークン目 (the cat) ãŒåè©žå¥ã§ã€3 トークン目ã‹ã‚‰ 5 トークン目 (saw a dog) ãŒå‹•è©žå¥ã§ã‚ã‚Œã°ã€ã“れらをã‚ã‚ã›ã¦ 1 トークン目ã‹ã‚‰ 5 トークン目ã¯æ–‡ã‚’å½¢æˆã™ã‚‹ã€ã¨ã„ã†ã‚ˆã†ã«ã€åŒºé–“を大ããªå˜ä½ã«ãƒžãƒ¼ã‚¸ã—ã¦ã„ãã¾ã™ã€‚ã“ã®ã¨ãã€ã©ã®åŒºé–“ã¨ã©ã®åŒºé–“をマージã§ãã‚‹ã‹ã‚’èªè˜ã§ãã‚‹ã“ã¨ãŒé‡è¦ã§ã™ã€‚訓練済ã¿ã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’分æžã™ã‚‹ã¨ã€ãƒžãƒ¼ã‚¸ã§ãる区間ã®å³ç«¯ã‹ã‚‰å³ç«¯ã€ä¾‹ãˆã° [the cat] [saw a dog] ã§ã‚れ㰠dog ã‹ã‚‰ cat ã«å¯¾ã—ã¦æœ‰æ„ã«å¼·ã„アテンションãŒã•ã‚Œã¦ã„ã‚‹ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚マージã§ãる区間を計算ã™ã‚‹ã“ã¨ã¯è‡ªæ˜Žã§ã¯ã‚ã‚Šã¾ã›ã‚“ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã“れをã¡ã‚ƒã‚“ã¨è¨ˆç®—ã—ã¦ã„ã¾ã™ã€‚ã“ã®ã»ã‹ã€å‹•çš„計画法ãŒå›žã‚‹ãŸã‚ã«å¿…è¦ãªæƒ…å ±ã‚’è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§èªè˜ã—ãªãŒã‚‰ãƒˆãƒ¼ã‚¯ãƒ³ã‚’生æˆã—ã¦ã„ã¾ã™ã€‚動的計画法ã®å®Ÿè£…方法ã¯ç„¡æ•°ã«ã‚ã‚‹ã®ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ç¢ºå®Ÿã«å‹•çš„計画法を回ã—ã¦ã„ã‚‹ã¨ã¾ã§ã¯è¨€ãˆã¾ã›ã‚“ãŒã€ï¼ˆå°‘ãªãã¨ã‚‚文脈自由文法ã®å‹•çš„計画法を知ã£ã¦ã„る人ã‹ã‚‰è¦‹ã‚‹ã¨ï¼‰ã‹ãªã‚Šå‹•çš„計画法ã«è¿‘ã„振る舞ã„ã‚’ã—ã¦ã„ã‚‹ã“ã¨ãŒè¦³å¯Ÿã•ã‚Œã¾ã—ãŸã€‚ã¾ãŸã‚‚ã‚„ç¹°ã‚Šè¿”ã—ã«ãªã‚Šã¾ã™ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«ã¯æ–‡æ³•ã«ã¤ã„ã¦ã®çŸ¥è˜ã‚’直接ã¯ä½•ã‚‚æ示ã—ãªã„ã“ã¨ã«æ³¨æ„ã—ã¦ãã ã•ã„。å˜ã« next token prediction ã§ç”Ÿã®ãƒ†ã‚ストã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã ã‘ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§å‹•çš„計画法を習得ã§ãã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚

言語モデルã¯ãƒŽã‚¤ã‚ºã®ã‚るデータã§è¨“ç·´ã™ã‚‹ã¨ãƒŽã‚¤ã‚ºè€æ€§ãŒã¤ã

以上ã®å®Ÿé¨“ã§ã¯ã€è¨“練データã¯å®Œç’§ã«æ–‡æ³•ã«æ²¿ã£ãŸã‚‚ã®ã§ã—ãŸã€‚ã—ã‹ã—ã€ç¾å®Ÿã®ãƒ‡ãƒ¼ã‚¿ã«ã¯ãƒŽã‚¤ã‚ºãŒå…¥ã£ã¦ãŠã‚Šã€å¿…ãšã—ã‚‚æ£ã—ã„文法ã«æ²¿ã£ã¦ã„ã‚‹ã¨ã¯é™ã‚Šã¾ã›ã‚“。ã“ã®å•é¡Œã‚’考慮ã—ãŸå®Ÿé¨“ã‚’è¡Œã„ã¾ã™ã€‚

完璧ã«æ–‡æ³•ã«æ²¿ã£ãŸãƒ‡ãƒ¼ã‚¿ã ã‘ã§è¨“ç·´ã—ãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãƒŽã‚¤ã‚ºã«å¼±ã„ã§ã™ã€‚完璧ã«æ–‡æ³•ã«æ²¿ã£ãŸãƒ†ã‚¹ãƒˆãƒ‡ãƒ¼ã‚¿ x を用æ„ã—ã€å‰åŠéƒ¨åˆ† x[:50] ã‚’å–り出ã—ã€ä¸€éƒ¨ã®ãƒˆãƒ¼ã‚¯ãƒ³ã‚’ランダムã«å…¥ã‚Œæ›¿ãˆã¦ãƒŽã‚¤ã‚ºã®ã‚る入力 x'[:50] を作りã€ã“ã®ç¶šãを言語モデルã«ç”Ÿæˆã•ã›ã¾ã™ã€‚ãã®å‡ºåŠ›çµæžœã¨ãƒŽã‚¤ã‚ºã‚’入れるå‰ã®å‰åŠéƒ¨åˆ†ã‚’連çµã— x[:50] + output を検査ã™ã‚‹ã¨ã€ã“ã‚Œã¯æ–‡æ³•ã«æ²¿ã£ã¦ã„ã¾ã›ã‚“。イメージã¨ã—ã¦ã¯ã€å† 詞を忘れãŸã€å˜æ•°å½¢ãƒ»è¤‡æ•°å½¢ã‚’é–“é•ãˆãŸã€ãªã©å°‘ã—文法ミスã®ã‚るテã‚ストã®ç¶šãを生æˆã•ã›ã‚ˆã†ã¨ã—ã¦ã‚‚ã€ãƒŸã‚¹ã‚’ã„ã„æ„Ÿã˜ã«æŽ¨æ¸¬ã—ã¦åŸ‹ã‚ã¦ãれるã“ã¨ã¯ãªãã€ç¶šãã®ç”Ÿæˆã‚‚æ··ä¹±ã—ã¦é–“é•ã†ã¨ã„ã†ã“ã¨ã§ã™ã€‚

続ã„ã¦ã€è¨“練データã®ä¸€éƒ¨ï¼ˆä¾‹ï¼š15% ã®ãƒ‡ãƒ¼ã‚¿ï¼‰ã«ãƒŽã‚¤ã‚ºã‚’入れã¦ã€ã‚¹ã‚¯ãƒ©ãƒƒãƒã‹ã‚‰è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã—ã¦ã¿ã¾ã™ã€‚

ノイズã®ã‚るデータã§è¨“ç·´ã—ãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãƒ†ã‚¹ãƒˆæ™‚ã«ã‚‚ノイズã«å¼·ã„ã§ã™ã€‚å…ˆã»ã©ã¨åŒæ§˜ã®å®Ÿé¨“ã‚’è¡Œã†ã¨ã€ä»Šå›žã¯ x[:50] + output ãŒé«˜ã„確率(99%~)ã§æ£ã—ã„文法ã§ã—ãŸã€‚ãŸã ã—ã€å¸¸ã«æ£ã—ã„文法を出力ã•ã›ã‚‹ã«ã¯ä½Žã„温度ãŒå¿…è¦ã§ã™ã€‚温度を訓練時ã¨åŒã˜ã ã«ã™ã‚‹ã¨ã€ãƒŽã‚¤ã‚ºã®å‡ºåŠ›ã®ä»•æ–¹ã‚’「æ£ã—ãã€è¦šãˆã¦ã—ã¾ã„ã¾ã™ã€‚具体的ã«ã¯ã€ãƒŽã‚¤ã‚ºã®ç„¡ã„テストデータを入力ã™ã‚‹ã¨å¸¸ã«æ£ã—ã„文法ã®ç¶šãを生æˆã—ã€ãƒŽã‚¤ã‚ºã®ã‚るテストデータを入力ã™ã‚‹ã¨å¸¸ã«é–“é•ã£ãŸæ–‡æ³•ã®ç¶šãを生æˆã—ã€ä¸€ã‹ã‚‰ç”Ÿæˆã•ã›ã‚‹ã¨è¨“練データã®ã†ã¡ãƒŽã‚¤ã‚ºã‚’å«ã‚€å‰²åˆï¼ˆä¾‹ï¼šç¢ºçŽ‡ 0.15)ã§é–“é•ã£ãŸæ–‡æ³•ã®æ–‡ã‚’生æˆã—ã€ã‚¯ãƒªãƒ¼ãƒ³ãªãƒ‡ãƒ¼ã‚¿ã®å‰²åˆï¼ˆä¾‹ï¼šç¢ºçŽ‡ 0.85)ã§æ£ã—ã„文法ã®æ–‡ã‚’生æˆã™ã‚‹ã‚ˆã†ã«ãªã‚Šã¾ã™ã€‚Next token prediction ã§ã€ãã†ã„ã†å‡ºåŠ›åˆ†å¸ƒã«ãªã‚‹ã‚ˆã†ã«è¨“ç·´ã—ãŸã®ã ã‹ã‚‰å½“然ã¨ã„ãˆã°å½“然ã§ã™ã€‚温度を

ãªã©ã¨ä¸‹ã’ã‚‹ã¨ã€ã„ãšã‚Œã®å ´åˆã‚‚ノイズã®ç„¡ã„文を生æˆã™ã‚‹ã‚ˆã†ã«ãªã‚Šã¾ã™ã€‚

ã“ã“ã‹ã‚‰å¾—られる実践上ã®æ•™è¨“ã¨ã—ã¦ã¯

- ノイズè€æ€§ã®ã‚る言語モデルを作るã«ã¯è¨“練データã«ãƒŽã‚¤ã‚ºã‚’å«ã‚€ãƒ‡ãƒ¼ã‚¿ã‚’入れるã“ã¨ãŒé‡è¦

- æ£ã—ã„æ–‡ã ã‘を生æˆã•ã›ãŸã„ã¨ãã«ã¯ç”Ÿæˆæ™‚ã«æ¸©åº¦ã‚’下ã’ã‚‹ã“ã¨ãŒé‡è¦

ã¨ã„ã†ã“ã¨ã§ã™ã€‚ç›´æ„Ÿçš„ã«ã¯å½“ãŸã‚Šå‰ã§ã™ãŒã€ã“ã‚Œã¾ã§ã¯å ´å½“ãŸã‚Šçš„ã«æ¤œè¨¼ã•ã‚Œã¦ã„ãŸã“れらã®çŸ¥è¦‹ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã®æµå„€ã€ã¤ã¾ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸçŠ¶æ³ã§ã€å¯¾ç…§å®Ÿé¨“を通ã—ã¦ã€ç¢ºèªã•ã‚ŒãŸã“ã¨ãŒé‡è¦ã§ã™ã€‚

Physics of Language Models: Part 2.1, Grade-School Math and the Hidden Reasoning Process (ICLR 2025)

日本語ã«è¨³ã™ã¨ã€Œå°å¦æ ¡ã®ç®—æ•°ã¨éš ã‚ŒãŸæŽ¨è«–プãƒã‚»ã‚¹ã€ã§ã™ã€‚

ã“ã®è«–æ–‡ã®ä¸»è¦ãªçµæžœã‚’一言ã§è¡¨ã™ã¨ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ç®—æ•°ã®å•é¡Œã‚’人間ã¨ã¯å°‘ã—é•ã£ãŸãƒ—ãƒã‚»ã‚¹ã§è§£ã„ã¦ã„ã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚

ã“ã®è«–æ–‡ã§ã¯å°å¦æ ¡ãƒ¬ãƒ™ãƒ«ã®ç®—æ•°ã®äººå·¥çš„ãªå•é¡Œã‹ã‚‰ãªã‚‹ã‚³ãƒ¼ãƒ‘スを新ã—ã作æˆã—ã¾ã—ãŸã€‚例ãˆã°ä»¥ä¸‹ã®ã‚ˆã†ãªå•é¡Œæ–‡ã§ã™ã€‚

リãƒãƒ¼ãƒ“ãƒ¥ãƒ¼é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•° ã¯ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªç”¨ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã€ã¨ã€Œãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ を足ã—ãŸå€¤ã® 5å€ ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•° ã¯ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ã¨ã€Œã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã€ を足ã—ãŸå€¤ã« 12ã‚’åŠ ãˆãŸã‚‚ã® ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•° ã¯ã€ã€Œãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ã¨ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ を足ã—ãŸå€¤ã«ç‰ã—ã„。 リãƒãƒ¼ãƒ“ãƒ¥ãƒ¼é«˜æ ¡ã®ãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®æ•° ã¯ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªç”¨ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ã€Œã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã€ ã®åˆè¨ˆã¨ç‰ã—ã„。 ダンススタジオã®é€šå¦ã‹ã°ã‚“ã®æ•°ã¯ 17 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã¯ 13 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã¯ã„ãã¤ã‹ï¼Ÿ

ã“ã‚Œã«å¯¾ã™ã‚‹æ€è€ƒã®é€£éŽ–付ãã®å›žç”ã¯ä»¥ä¸‹ã®ã‚ˆã†ã«ãªã‚Šã¾ã™ã€‚

ダンススタジオã®é€šå¦ã‹ã°ã‚“ã®æ•°ã‚’ p ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€p = 17 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã‚’ W ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€W = 13 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã‚’ B ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€B = p + W = 17 + 13 = 7 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã‚’ g ã¨å®šç¾©ã™ã‚‹ã€‚ R = W + B = 13 + 7 = 20 ãªã®ã§ã€g = 12 + R = 12 + 20 = 9 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ w ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€w = g + W = 9 + 13 = 22 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ c ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€c = B × w = 7 × 22 = 16 ã¨ãªã‚‹ã€‚ ç”ãˆï¼š16

17 + 13 = 7 ã¨ãªã£ã¦ã„ã¾ã™ãŒã€ã“ã‚Œã¯èª¤å—ã§ã¯ãªãã€ã“ã®ãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã§ã¯ mod 23ã§è¨ˆç®—ã™ã‚‹ã‚ˆã†ã«ãªã£ã¦ã„ã¾ã™ã€‚ã“ã‚Œã¯å•é¡ŒãŒé•·ããªã£ãŸã¨ãã«ç„¡é§„ã«å¤§ããªå€¤ãŒå‡ºã¦ãã¦ã€è¨ˆç®—ãŒç„¡é§„ã«ã‚„ã‚„ã“ã—ããªã‚‹ã®ã‚’防ããŸã‚ã§ã™ã€‚以下ã€è¨“練もテストも全㦠mod 23ã®ä¸–ç•Œã§è¡Œã„ã¾ã™ã€‚

å•é¡Œæ–‡ã‚‚回ç”ã‚‚é•·ãã¦å°‘ã—ã‚„ã‚„ã“ã—ã„ã§ã™ãŒã€è¦ã™ã‚‹ã«ã€ã€Œãƒªãƒãƒ¼ãƒ“ãƒ¥ãƒ¼é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã€ã‚„ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ ã®ã‚ˆã†ãªé©å½“ãªåè©žã‹ã‚‰ãªã‚‹å¤‰æ•°ã‚’用æ„ã—ã¦ã€ãれらã®é–¢ä¿‚をテンプレートã«æ²¿ã£ã¦æ–‡ã¨ã—ã¦è¡¨ç¾ã—ã€ãれらを並ã¹ãŸã‚‚ã®ãŒã“ã®å•é¡Œæ–‡ã§ã™ã€‚ã“ã‚Œã¨åŒã˜è¦é ˜ã§ã€åŒã˜ã‚¿ã‚¤ãƒ—ã®å•é¡Œæ–‡ã¨å›žç”ã‚’ãŸãã•ã‚“自動生æˆã—ã¾ã™ã€‚テンプレートã«æ²¿ã£ãŸç”Ÿæˆã§ã™ãŒã€å¤šæ§˜æ€§ã¯å分ã§ã€å®Ÿè³ªçš„ã«ç•°ãªã‚‹å•é¡Œæ–‡ã®æ•°ã¯ 90 兆個ã«ã‚‚上りã¾ã™ã€‚ãªã®ã§ã€è¨“ç·´ã¨ãƒ†ã‚¹ãƒˆã§å•é¡Œæ–‡ãŒè¢«ã‚‹ã“ã¨ã¯ã‚ã‚Šå¾—ãšã€ãƒ¢ãƒ‡ãƒ«ãŒå•é¡Œã‚’暗記ã™ã‚‹å¿ƒé…ã‚‚ã‚ã‚Šã¾ã›ã‚“。

訓練時ã«ã¯ã€ã“れらを連çµã—ãŸ

リãƒãƒ¼ãƒ“ãƒ¥ãƒ¼é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•° ã¯ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªç”¨ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã€ã¨ã€Œãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ を足ã—ãŸå€¤ã® 5å€ ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•° ã¯ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ã¨ã€Œã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã€ を足ã—ãŸå€¤ã« 12ã‚’åŠ ãˆãŸã‚‚ã® ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•° ã¯ã€ã€Œãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ã¨ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ を足ã—ãŸå€¤ã«ç‰ã—ã„。 リãƒãƒ¼ãƒ“ãƒ¥ãƒ¼é«˜æ ¡ã®ãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®æ•° ã¯ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªç”¨ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ã€Œã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã€ ã®åˆè¨ˆã¨ç‰ã—ã„。 ダンススタジオã®é€šå¦ã‹ã°ã‚“ã®æ•°ã¯ 17 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã¯ 13 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã¯ã„ãã¤ã‹ï¼Ÿ ダンススタジオã®é€šå¦ã‹ã°ã‚“ã®æ•°ã‚’ p ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€p = 17 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã‚’ W ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€W = 13 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã‚’ B ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€B = p + W = 17 + 13 = 7 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã‚’ g ã¨å®šç¾©ã™ã‚‹ã€‚ R = W + B = 13 + 7 = 20 ãªã®ã§ã€g = 12 + R = 12 + 20 = 9 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ w ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€w = g + W = 9 + 13 = 22 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ c ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€c = B × w = 7 × 22 = 16 ã¨ãªã‚‹ã€‚ ç”ãˆï¼š16

を入力テã‚ストã¨ã—ã¦ã€ã‚„ã¯ã‚Š next token prediction ã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã—ã¾ã™ã€‚実験ã§ã¯ã€ã“ã®ç¨®ã®ãƒ†ã‚ストを約 5000 万個用æ„ã—ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ã¦ã„ã¾ã™ã€‚ãã©ã„よã†ã§ã™ãŒã€ã‚¦ã‚§ãƒ–上ã®ã‚³ãƒ¼ãƒ‘スã¯ä¸€åˆ‡ä½¿ã‚ãšã€ã“ã®è¦é ˜ã§ä½œæˆã—ãŸå•é¡Œæ–‡ã®ã‚³ãƒ¼ãƒ‘スã®ã¿ã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã—ã¾ã™ã€‚

言語モデルã¯æœªçŸ¥ã®å•é¡Œã‚’解ã‘ã‚‹

LLM ã®é¦´æŸ“ã¿ã®ã‚る皆ã•ã‚“ã§ã‚ã‚Œã°é©šãã®ãªã„ã“ã¨ã‹ã‚‚ã—ã‚Œã¾ã›ã‚“ãŒã€ã“ã†ã—ã¦è¨“ç·´ã•ã‚ŒãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã€åˆã‚ã¦è¦‹ãŸãƒ†ã‚¹ãƒˆç”¨ã®å•é¡Œæ–‡ã«å¯¾ã—ã¦ã‚‚精度良ã(99% 以上ã®æ£ç”率ã§ï¼‰æ£ç”ã§ãã¾ã™ã€‚

éžè‡ªæ˜Žãªç‚¹ã¨ã—ã¦ã¯ã€è¨“練時ã«è¦‹ãŸã“ã¨ã®ãªã„難ã—ã•ã®å•é¡Œã§ã‚‚解ã‘るよã†ã«ãªã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚訓練時ã«ã¯é«˜ã€… 21 ステップã§è§£ã‘ã‚‹å•é¡Œæ–‡ã—ã‹ä¸Žãˆãšã€ãƒ†ã‚¹ãƒˆæ™‚ã«ã„ããªã‚Š 28 ステップ以上必è¦ãªå•é¡Œã‚’見ã›ã¦ã‚‚ã€ç²¾åº¦ã‚ˆãç”ãˆã‚‹ã“ã¨ãŒã§ãã¾ã—ãŸã€‚ã“ã‚Œã¯äººé–“を超ãˆã‚‹ AI を作æˆã™ã‚‹ãŸã‚ã«é‡è¦ãªçµæžœã§ã™ã€‚言語モデルã«å¯¾ã™ã‚‹è¨“練データã¯äººé–“ãŒä½œæˆã—ã¾ã™ã‹ã‚‰ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒç²å¾—ã§ãる知能もデータを作æˆã™ã‚‹äººé–“ã®çŸ¥èƒ½ãŒé™ç•Œã«ãªã‚‹ã¨ã„ã†å•é¡ŒãŒã‚ã‚Šã¾ã™ã€‚ã—ã‹ã—ã€ã“ã®çµæžœã¯è¨“練データã®çŸ¥èƒ½ãŒé™ã‚‰ã‚Œã¦ã„ã¦ã‚‚ã€è‡ªç„¶ã«ãれ以上ã®çŸ¥èƒ½ã‚’ç²å¾—ã§ãã‚‹å¯èƒ½æ€§ã‚’示唆ã—ã¦ã„ã¾ã™ã€‚ã“ã‚Œã¾ã§ã‚‚ Weak-to-Strong Generalization [Burns+ ICML 2024] ã®ã‚ˆã†ã«ã“ã®å•é¡Œã«å¯¾ã™ã‚‹ç ”究çµæžœã¯ã‚ã‚Šã¾ã—ãŸãŒã€ã‚¦ã‚§ãƒ–コーパスを用ã„ã¦ã„ã‚‹ãŸã‚ã«ã€æœ¬å½“ã«ãƒ†ã‚¹ãƒˆå•é¡ŒãŒè¨“ç·´ã§ä½¿ç”¨ã—ãŸãƒ‡ãƒ¼ã‚¿ã‚ˆã‚Šã‚‚真ã«é›£ã—ã„ã‹ã‚’確実ã«æ‹…ä¿ã§ãã¦ã„ã¾ã›ã‚“ã§ã—ãŸã€‚æœ¬ç ”ç©¶ã§ã¯ã€ã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸè¨“練データを用ã„ã‚‹ã“ã¨ã§ã“ã“ã‚’ã†ã¾ãæ‹…ä¿ã§ãã¦ã„ã¾ã™ã€‚

言語モデルã¯æœ€çŸçµŒè·¯ã§ç”ãˆã‚’出ã™

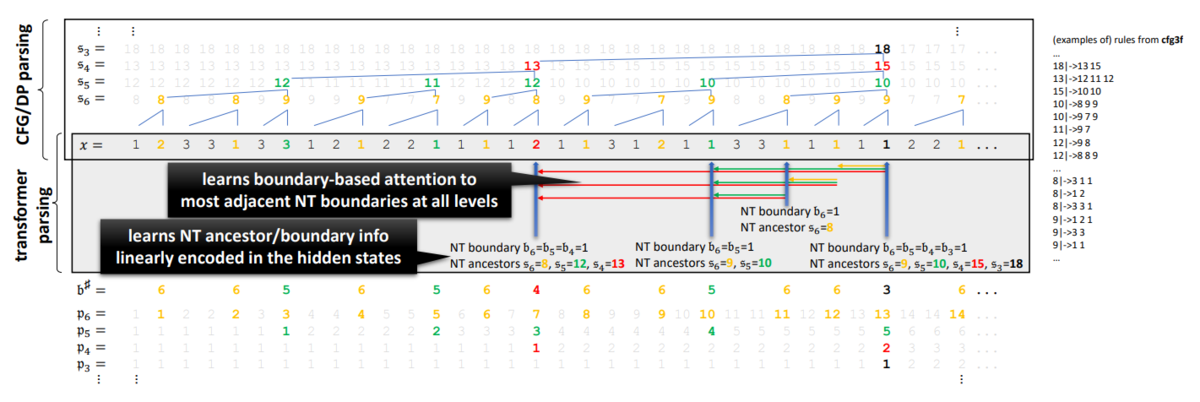

æ–‡ç« é¡Œã«æ£ç”ã™ã‚‹æ–¹æ³•ã¯ç„¡æ•°ã«ã‚ã‚Šã¾ã™ã€‚途ä¸ã§ä½™è¨ˆãªå¤‰æ•°ã‚’計算ã—ã¦ã—ã¾ã£ã¦ã‚‚ã„ã„ã§ã™ã—ã€æœ€çµ‚çš„ãªç”ãˆã•ãˆã‚ã£ã¦ã„ã‚Œã°æ£ç”ã¨åˆ¤å®šã•ã‚Œã¦ã„ã¾ã—ãŸã€‚ã—ã‹ã—ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒå‡ºåŠ›ã—ãŸæ€è€ƒã®é€£éŽ–を分æžã—ã¦ã¿ã‚‹ã¨ã€å•é¡Œæ–‡ä¸ã«ä½™è¨ˆãªå¤‰æ•°ãŒç™»å ´ã—ã¦ã„ãŸã¨ã—ã¦ã‚‚ã€å¤§æŠµã®å ´åˆä¸å¿…è¦ãªè¨ˆç®—を一切ã›ãšã€æœ€çŸã§ç”ãˆã¾ã§ãŸã©ã‚Šç€ã„ã¦ã„ã¾ã—ãŸã€‚ã“ã‚Œã¯è©¦è¡ŒéŒ¯èª¤ã‚’ã™ã‚‹äººé–“ã®å›žç”方法ã¨ã¯å¯¾ç…§çš„ã§ã™ã€‚ãªãœã“ã®ã‚ˆã†ãªã“ã¨ã«ãªã£ãŸã®ã‹ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®é ã®ä¸ã‚’覗ã„ã¦åˆ†æžã—ã¦ã¿ã¾ã™ã€‚

言語モデルã¯é ã®ä¸ã§å¤‰æ•°ã®ä¾å˜é–¢ä¿‚を分æžã—ã¦ã„ã‚‹

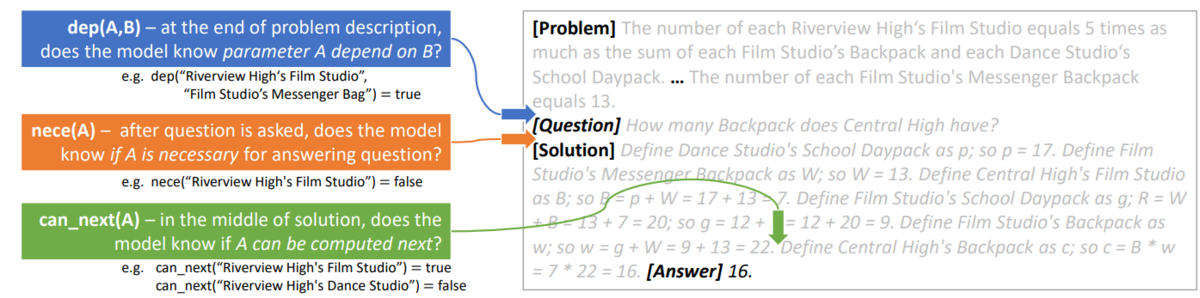

プãƒãƒ¼ãƒ“ングã«ã‚ˆã£ã¦ä»¥ä¸‹ã®ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚

- å•é¡Œã®å‰æãŒå…¥åŠ›ã•ã‚ŒãŸç›´å¾Œã€è³ªå•æ–‡ãŒå…¥åŠ›ã•ã‚Œã‚‹ç›´å‰ã®çŠ¶æ…‹ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã€å…¨ã¦ã®å¤‰æ•°å¯¾ã®ä¾å˜é–¢ä¿‚ãŒåˆ†ã‹ã£ã¦ã„る。例ãˆã°ã€ã€Œã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã€ã‚’計算ã™ã‚‹ã«ã¯ã€ã€Œãƒ€ãƒ³ã‚¹ã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã€ã¨ã€Œæ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã€ã‚’å…ˆã«çŸ¥ã£ã¦ãŠãå¿…è¦ãŒã‚ã‚‹ã¨ã„ã†ã“ã¨ã‚’é ã®ä¸ã§åˆ†ã‹ã£ã¦ã„ã¾ã™ã€‚

- 質å•æ–‡ãŒå…¥åŠ›ã•ã‚ŒãŸç›´å¾Œã€æ€è€ƒã®é€£éŽ–を始ã‚ã‚‹ç›´å‰ã®çŠ¶æ…‹ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã€è³ªå•æ–‡ã«å›žç”ã™ã‚‹ã®ã«å¿…è¦ãªå¤‰æ•°ã®é›†åˆãŒåˆ†ã‹ã£ã¦ã„る。逆ã«è¨€ãˆã°è³ªå•æ–‡ã«å›žç”ã™ã‚‹ã®ã«å¿…è¦ã®ãªã„余計ãªå¤‰æ•°ãŒä½•ã‹ã‚’分ã‹ã£ã¦ã„ã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚

- æ€è€ƒã®é€£éŽ–を生æˆã—ã¦ã„る途ä¸ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã€ã™ã§ã«è¨ˆç®—ã—ãŸå¤‰æ•°ã®é›†åˆã¨æ¬¡ã«è¨ˆç®—ã§ãる変数ã®é›†åˆãŒåˆ†ã‹ã£ã¦ã„る。

言語モデルã¯ã“れらã®ã“ã¨ã‚’é ã®ä¸ã§åˆ†ã‹ã£ã¦ã„ã‚‹ãŸã‚ã«ã€è³ªå•æ–‡ã«å›žç”ã™ã‚‹ãŸã‚ã«å¿…è¦ã§ã‚ã£ã¦ã€ã‹ã¤æ¬¡ã«è¨ˆç®—ã§ãる変数ã®é›†åˆã‹ã‚‰é †ç•ªã«è¨ˆç®—ã‚’ã™ã‚‹ã“ã¨ã§ã€ãƒŸã‚¹ãªãã‹ã¤æœ€çŸã§ç”ãˆã‚’å°Žã出ã›ã¦ã„ã‚‹ã¨ã„ã†ã‚ã‘ã§ã™ã€‚

ãã©ã„よã†ã§ã™ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«ã¯ã“ã†ã„ã£ãŸè§£ãæ–¹ã¯æ•™ãˆãšã€å˜ã« next token prediction ã§è¨“ç·´ã—ãŸã“ã¨ã‚’å†ã³æ€ã„èµ·ã“ã—ã¦ãã ã•ã„。å˜ã« next token prediction ã§ç”Ÿã®ãƒ†ã‚ストã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã ã‘ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã“ã†ã„ã£ãŸã€Œæ–‡ç« é¡Œã®æ£ã—ã„解ãæ–¹ã€ã‚’自動ã§ç²å¾—ã—ã¾ã™ã€‚

言語モデルãŒèª¤ç”ã™ã‚‹ã¨ãã€é ã®ä¸ã§ã‚‚ミスã—ã¦ã„ã‚‹

よã訓練ã•ã‚ŒãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã§ã‚‚ç®—æ•°ã®å•é¡Œã‚’é–“é•ã†ã“ã¨ãŒã‚ã‚Šã¾ã™ã€‚é–“é•ãˆãŸå ´åˆã«å†…部状態を分æžã—ã¦ã¿ã‚‹ã¨ã€å¤šãã®å ´åˆã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§æœ¬å½“ã¯ã¾ã 計算ã§ããªã„変数を計算ã§ãã‚‹ã¨å‹˜é•ã„ã—ã¦ã„ã¾ã—ãŸã€‚

é¢ç™½ã„ã“ã¨ã«ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒã¾ã 一文å—も出力ã—ã¦ã„ãªã„段階ã§ã€ãã®é ã®ä¸ï¼ˆå†…部状態)を見れã°ã€ã“ã®ãƒ¢ãƒ‡ãƒ«ã¯ã“ã®å¾Œå¿…ãšé–“é•ã„を犯ã™ãžã¨äºˆæ¸¬ã§ãã€å®Ÿéš›ãã®ã¾ã¾è¨€èªžãƒ¢ãƒ‡ãƒ«ã«å‡ºåŠ›ã•ã›ã‚‹ã¨é–“é•ã£ãŸå›žç”を出力ã—ã¾ã™ã€‚

ã“ã®ä»•çµ„ã¿ã‚’利用ã—ã¦ã€æŽ¨è«–ã®ç²¾åº¦ã‚’å‘上ã•ã›ã‚‹ã“ã¨ã¯æ¬¡ã®è«–æ–‡ã§æ‰±ã„ã¾ã™ã€‚

Physics of Language Models: Part 2.2, How to Learn From Mistakes on Grade-School Math Problems (ICLR 2025)

日本語ã«è¨³ã™ã¨ã€Œå°å¦æ ¡ã®ç®—æ•°ã®å•é¡Œã«ãŠã„ã¦é–“é•ã„ã‹ã‚‰å¦ã¶æ–¹æ³•ã€ã§ã™ã€‚

ã“ã®è«–æ–‡ã®ä¸»è¦ãªçµæžœã‚’一言ã§è¡¨ã™ã¨ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯è‡ªåˆ†ã§é–“é•ã„ã«æ°—ã¥ã„ã¦ä¿®æ£ã§ãã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚

使用ã™ã‚‹ãƒ‡ãƒ¼ã‚¿ã¯å…ˆã»ã©ã¨åŒã˜å°å¦æ ¡ã®ç®—æ•°ã®ãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã§ã™ã€‚

言語モデルã¯é–“é•ã„を出力ã—ãŸã‚ã¨ã€é ã®ä¸ã§å¾Œæ‚”ã—ã¦ã„ã‚‹

先程ã®è«–æ–‡ã§ã€é–“é•ãˆãŸå ´åˆã«å†…部状態を分æžã—ã¦ã¿ã‚‹ã¨ã€å¤šãã®å ´åˆã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§æœ¬å½“ã¯ã¾ã 計算ã§ããªã„変数を計算ã§ãã‚‹ã¨å‹˜é•ã„ã—ã¦ã„ãŸã¨è¿°ã¹ã¾ã—ãŸãŒã€ãã®è¨ˆç®—ã§ããªã„変数を「計算ã™ã‚‹ã€ã¨å®£è¨€ã—ãŸç›´å¾Œã€ä¸€å®šã®å‰²åˆï¼ˆ60% 程度)ã§ã¯é ã®ä¸ã§ã€Œã¾ã 計算ã§ããªã„ã€ã¨åˆ†ã‹ã£ã¦ã„ã¾ã™ã€‚ã¤ã¾ã‚Šã€ã€Œè¨ˆç®—ã™ã‚‹ã€ã¨è¨€ã£ãŸã“ã¨ã‚’é ã®ä¸ã§å¾Œæ‚”ã—ã¦ã„る。ãã—ã¦è¨ˆç®—ã™ã‚‹ã¨å®£è¨€ã—ã¦ã—ã¾ã£ãŸæ‰‹å‰ã€å¼•ãè¿”ã›ãšã«ãã®ã¾ã¾ãƒ‡ã‚¿ãƒ©ãƒ¡ãªè¨ˆç®—を出力ã—ã¾ã™ã€‚

ã“ã‚Œã¯è‡ªå·±å›žå¸°ãƒ¢ãƒ‡ãƒ«ã®è‡ªå·±æ¬ºçžž (self-delusions) [Ortega+ DeepMind Technical Report 2021] ã¨é–¢ä¿‚ã—ã¦ã„ã¾ã™ã€‚自己回帰型ã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ç™ºè¨€ã—ãŸã“ã¨ã‚’å–り消ã›ã¾ã›ã‚“。むã—ã‚自分ã®éŽåŽ»ã®ç™ºè¨€ãŒè‡ªåˆ†ã«å¯¾ã™ã‚‹å…¥åŠ›ã¨ãªã‚Šã€é–“é•ã£ãŸè‡ªåˆ†ã®ç™ºè¨€ã‚’æ£ã—ã„ã“ã¨ã¨æ€ã„込んã§ã—ã¾ã„ã¾ã™ã€‚言語モデルãŒæ™®é€šã«æ€è€ƒã®é€£éŽ–ã‚’ã™ã‚‹ã¨æ£è§£ã§ãã‚‹å•é¡ŒãŒã‚ã‚‹ã¨ã—ã¾ã™ã€‚ã“ã®å•é¡Œã‚’入力ã—ã€è°è«–ã®æœ€åˆã«ç”ãˆã‚’出力ã•ã›ã¦ã‹ã‚‰æ€è€ƒã®é€£éŽ–を始ã‚ã•ã›ã‚‹ã¨ã€æœ€åˆã«å‡ºã—ãŸç”ãˆãŒé–“é•ãˆã¦ã„ãŸå ´åˆã§ã‚‚ã€ç„¡ç†ã‚„ã‚Šãã®ç”ãˆãŒçµè«–ã«ãªã‚‹ã‚ˆã†ãªè°è«–ã®æµã‚Œã‚’ã¨ã‚Šã€æœ€çµ‚çš„ã«ãã®é–“é•ã£ãŸçµè«–ã‚’æ出ã™ã‚‹ã“ã¨ãŒè¦³å¯Ÿã•ã‚Œã¦ã„ã¾ã™ [McCoy+ arXiv 2023]。

éŽåŽ»ã®ç ”究ã¯è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒå£°ã«å‡ºã—ãŸã“ã¨ã«å¯¾ã™ã‚‹è¦³å¯Ÿã§ã—ãŸãŒã€ä»Šå›žã®å®Ÿé¨“ã§ã¯ã€ãã®ã‚ˆã†ãªã“ã¨ãŒèµ·ã“ã£ãŸã¨ãã€å®Ÿã¯è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é ã®ä¸ã§ã€Œã‚ã€ã„ã¾è‡ªåˆ†ã€é–“é•ãˆã¦ã‚‹ãªã€œã€ã¨å¾Œæ‚”ã—ã¦ã„る(ã—ã‹ã—ã‚‚ã†æ¢ã‚られãªã„ã®ã§ãã®ã¾ã¾å–‹ã‚Šç¶šã‘ã¦ã„る)ã¨ã„ã†ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚

言語モデルã¯é–“é•ã„ã®è¨‚æ£èƒ½åŠ›ã‚’身ã«ã¤ã‘られる

自分ã§é–“é•ãˆãŸã“ã¨ãŒåˆ†ã‹ã£ã¦ã„ã‚‹ãªã‚‰ã€è¨‚æ£ã—ã¦æ£ã—ã„çµè«–ã«ãŸã©ã‚Šç€ã„ã¦ã»ã—ã„ã‚‚ã®ã§ã™ã€‚

実際ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«è‡ªåˆ†ã®å‡ºåŠ›ã‚’訂æ£ã•ã›ã‚‹ç ”究ã¯ã„ãã¤ã‚‚ã‚ã‚Šã¾ã™ [Madaan+ NeurIPS 2023, Pan+ TACL 2024]。簡å˜ã«ã¯ã€ChatGPT ã«å¯¾ã—ã¦ã‚‚ã€ã€Œãれ間é•ãˆã¦ã„ã‚‹ã®ã§ä¿®æ£ã—ã¦ã€ã¨è¨€ã†ã ã‘ã§ã€Œã™ã¿ã¾ã›ã‚“ã€é–“é•ãˆã¦ã„ã¾ã—ãŸã€ã¨è¨€ã£ã¦ä¿®æ£ã—ã¦ãã‚Œã¾ã™ã€‚

ã“れらã¯é–“é•ãˆãŸç”ãˆã‚’完全ã«å‡ºåŠ›ã•ã›ã¦ã‹ã‚‰ã€è‡ªå·±ä¿®æ£ã•ã›ã‚‹ã‚‚ã®ã§ã™ã€‚ã—ã‹ã—ã€ä¸Šã«è¦‹ãŸã‚ˆã†ã«ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãƒŸã‚¹ã—ãŸç›´å¾Œã«ãƒŸã‚¹ã«æ°—ã¥ã„ã¦ã„ã‚‹ã®ã§ã€ãƒŸã‚¹ã—ãŸã¨æ°—ã¥ããªãŒã‚‰æœ€å¾Œã§å‡ºåŠ›ã•ã›ã‚‹ã®ã¯ç„¡é§„ã§ã™ã€‚ã“ã“ã§ã¯ã€ãƒŸã‚¹ã—ãŸã‚¹ãƒ†ãƒƒãƒ—ã®ç›´å¾Œã«ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«è‡ªåˆ†ã§ãƒŸã‚¹ã‚’ä¿®æ£ã•ã›ã‚‹æ–¹æ³•ã‚’考ãˆã¾ã™ã€‚

具体的ã«ã¯

ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã‚’ [BACK] ダンススタジオã®é€šå¦ã‹ã°ã‚“ã®æ•°ã‚’ p ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€p = 17 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒ¡ãƒƒã‚»ãƒ³ã‚¸ãƒ£ãƒ¼ãƒãƒƒã‚°ã®æ•°ã‚’ W ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€W = 13 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®æ•°ã‚’ B ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€B = p + W = 17 + 13 = 7 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ [BACK] æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®é€šå¦ã‹ã°ã‚“ã®æ•°ã‚’ g ã¨å®šç¾©ã™ã‚‹ã€‚ R = W + B = 13 + 7 = 20 ãªã®ã§ã€g = 12 + R = 12 + 20 = 9 ã§ã‚る。 æ˜ åƒã‚¹ã‚¿ã‚¸ã‚ªã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ w ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€w = g + W = 9 + 13 = 22 ã§ã‚る。 ã‚»ãƒ³ãƒˆãƒ©ãƒ«é«˜æ ¡ã®ãƒªãƒ¥ãƒƒã‚¯ã®æ•°ã‚’ c ã¨å®šç¾©ã™ã‚‹ã€‚ ã—ãŸãŒã£ã¦ã€c = B × w = 7 × 22 = 16 ã¨ãªã‚‹ã€‚ ç”ãˆï¼š16

ã®ã‚ˆã†ã«ã€ã‚ã–ã¨é–“é•ãˆãŸæŽ¨è«–ステップを入れã¦ãã®ç›´å¾Œã« [BACK] ã¨ã„ã†ç‰¹æ®Šãªãƒˆãƒ¼ã‚¯ãƒ³ã‚’入れãŸãƒ‡ãƒ¼ã‚¿ã‚’用æ„ã—ã¾ã™ã€‚一部ã®ãƒ‡ãƒ¼ã‚¿ã«å¯¾ã—ã¦ã“ã®ã‚ˆã†ãªå·¥å¤«ã‚’è¡Œã£ãŸã‚³ãƒ¼ãƒ‘スを用æ„ã—ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ã¾ã™ã€‚

ã“ã®ã‚ˆã†ã«ã€ã‚ã–ã¨é–“é•ãˆã¦è¨‚æ£ã—ãŸãƒ‡ãƒ¼ã‚¿ã§è¨“ç·´ã™ã‚‹ã¨ã€ãƒŸã‚¹ã‚’自己修æ£ã§ãるよã†ã«ãªã‚Šã€é›£ã—ã„テストデータã«å¯¾ã™ã‚‹æ£ç”率ãŒå¤§å¹…ã«ä¸Šæ˜‡ã—ã¾ã—ãŸã€‚

興味深ã„点ãŒäºŒã¤ã‚ã‚Šã¾ã™ã€‚

第一ã¯ã€é–“é•ã„ã®ã‚る推論ステップを訓練ã‹ã‚‰å–り除ã‹ãªãã¦ã‚‚ã†ã¾ãã„ãã¨ã„ã†ã“ã¨ã§ã™ã€‚é–“é•ã„を訓練データを入れるã¨è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒã‚ã–ã¨é–“é•ãˆã‚‹ã‚ˆã†ã«ãªã‚‹æ‡¸å¿µãŒã‚ã‚‹ã®ã§ã€å‡ã£ãŸã“ã¨ã‚’ã™ã‚‹ãªã‚‰ã°ã€è¨“練時ã€é–“é•ã„ã®ã‚¹ãƒ†ãƒƒãƒ—ã¨æ£è§£ã®ã‚¹ãƒ†ãƒƒãƒ—ãŒæ··ã–ã£ãŸãƒ†ã‚ストデータを言語モデルã«å…¥åŠ›ã—ã€é–“é•ã„ã®ã‚¹ãƒ†ãƒƒãƒ—ã«ã¤ã„ã¦ã¯è¨“ç·´æ失ã®è¨ˆç®—ã‹ã‚‰çœãã€[BACK] トークンã®å‡ºåŠ›æ–¹æ³•ã ã‘å¦ç¿’ã•ã›ã‚‹ã€ã¤ã¾ã‚Šã€ãƒžã‚¹ã‚ングをã™ã‚‹ã¨ã„ã†ã“ã¨ãŒè€ƒãˆã‚‰ã‚Œã¾ã™ãŒã€ãã®ã‚ˆã†ãªç´°å·¥ã‚’ã—ãªãã¦ã‚‚å˜ã«æ··ã–ã£ãŸãƒ‡ãƒ¼ã‚¿ã‚’使ã£ã¦ next token prediction ã‚’ã™ã‚‹ã ã‘ã§æ£ç”率ãŒä¸ŠãŒã‚Šã¾ã—ãŸã€‚

第二ã¯ã€é«˜ã„割åˆã§ï¼ˆä¾‹ãˆã°åŠæ•°ã®ã‚¹ãƒ†ãƒƒãƒ—ã«ï¼‰é–“é•ã„ã®ã‚¹ãƒ†ãƒƒãƒ—を入れãŸã¨ã—ã¦ã‚‚ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãƒ†ã‚¹ãƒˆæ™‚ã«ã‚ã–ã¨é–“é•ã„ã®ã‚¹ãƒ†ãƒƒãƒ—を挿入ã™ã‚‹äº‹ã¯ã»ã¨ã‚“ã©ãªãã€ä»¥å‰ã¨åŒæ§˜ã€å¤§æŠµã®å ´åˆã¯æœ€çŸã®ã‚¹ãƒ†ãƒƒãƒ—æ•°ã§æ£è§£ã«ãŸã©ã‚Šç€ã„ã¦ã„ãŸã“ã¨ã§ã™ã€‚大抵ã®å ´åˆã€å®Ÿéš›ã«é–“é•ãˆã¦ã—ã¾ã£ãŸã¨ãã«ã ã‘ã€[BACK] トークンを出力ã—ã¦ç™ºè¨€ã‚’訂æ£ã—ã¦ã„ã¾ã—ãŸã€‚

é–“é•ã„ã®è¨‚æ£èƒ½åŠ›ã¯äº‹å‰å¦ç¿’ã§èº«ã«ã¤ã‘ã‚‹å¿…è¦ãŒã‚ã‚‹

é–“é•ã„ã®è¨‚æ£èƒ½åŠ›ã¯ LoRA ファインãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã§ã¯èº«ã«ã¤ã‘ãªã„ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚元々ã®ãƒ¢ãƒ‡ãƒ«ã®ã‚ˆã†ã«ã€é–“é•ã„ã®ãªã„クリーンãªãƒ‡ãƒ¼ã‚¿ã§ãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ãŸå¾Œã€å…ˆã»ã©ã®ä¾‹ã®ã‚ˆã†ã«é–“é•ã„ã®ã‚¹ãƒ†ãƒƒãƒ—ã‚’å«ã‚€ãƒ‡ãƒ¼ã‚¿ã§ LoRA ファインãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã‚’ã—ã¦ã‚‚ã€é–“é•ã„を自己訂æ£ã™ã‚‹èƒ½åŠ›ã¯ã»ã¨ã‚“ã©èº«ã«ã¤ãã¾ã›ã‚“ã§ã—ãŸã€‚ã“ã‚Œã¯é–“é•ã„ã‚’é ã®ä¸ã§èªè˜ã™ã‚‹èƒ½åŠ›ã¨ã€é–“é•ã„を声ã«å‡ºã—ã¦è‡ªå·±è¨‚æ£ã™ã‚‹èƒ½åŠ›ã¯ã‹ã‘離れã¦ãŠã‚Šã€LoRA ファインãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ç¨‹åº¦ã®ä¿®æ£ã§ã¯èº«ã«ã¤ãªã„ã¨ã„ã†ã“ã¨ã‚’表ã—ã¦ã„ã¾ã™ã€‚

Physics of Language Models: Part 3.1, Knowledge Storage and Extraction (ICML 2024)

日本語ã«è¨³ã™ã¨ã€ŒçŸ¥è˜ã®è²¯è”µã¨æŠ½å‡ºã€ã§ã™ã€‚

ã“ã®è«–æ–‡ã®ä¸»è¦ãªçµæžœã‚’一言ã§è¡¨ã™ã¨ã€çŸ¥è˜ã®è²¯è”µã¨æŠ½å‡ºã¯åˆ¥ç‰©ã¨ã„ã†ã“ã¨ã§ã™ã€‚言語モデルã¯çŸ¥è˜ã®è²¯è”µã¯å¾—æ„ã§ã™ã€‚ã—ã‹ã—ã€çŸ¥è˜ã‚’抽出ã§ãるよã†ã«ã™ã‚‹ãŸã‚ã«ã¯å·¥å¤«ãŒå¿…è¦ã§ã™ã€‚

言語モデルã®ç‰©ç†å¦ã®ä¸»è¦ãªç‰¹å¾´ã¯ã€ã‚¦ã‚§ãƒ–ã‹ã‚‰åŽé›†ã—ãŸã‚³ãƒ¼ãƒ‘スを使ã‚ãšã€ãã£ã¡ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã“ã¨ã§ã‚ã£ãŸã“ã¨ã‚’æ€ã„出ã—ã¦ãã ã•ã„。

ã“ã“ã§ã‚‚ã‚„ã¯ã‚Šã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸå®Ÿé¨“ã‚’ã™ã‚‹ã¹ãã€æ–°ãŸã«ãƒ‡ãƒ¼ã‚¿ã‚»ãƒƒãƒˆã‚’作æˆã—ã¾ã™ã€‚具体的ã«ã¯å¤§é‡ã®æž¶ç©ºã®äººç‰©ã®ä¼è¨˜ã‚’作æˆã—ã¾ã™ã€‚åå‰ã€ç”Ÿå¹´æœˆæ—¥ã€å‡ºç”Ÿåœ°ã€å’æ¥ã—ãŸå¤§å¦ã€å¤§å¦ã®å°‚æ”»ã€å‹¤ã‚ã¦ã„ãŸä¼šç¤¾ã€ä¼šç¤¾ã®æ‰€åœ¨åœ°ã€ã“れらをランダムã«ç”Ÿæˆã—ã€ä¼è¨˜ã‚’作æˆã—ã¾ã™ã€‚ä¼è¨˜ã¯ãƒ†ãƒ³ãƒ—レートã«ã“れらã®ãƒ—ãƒãƒ•ã‚£ãƒ¼ãƒ«ã‚’当ã¦ã¯ã‚ãŸã‚‚ã®ã¨ã€ãƒ—ãƒãƒ•ã‚£ãƒ¼ãƒ«ã‚’プãƒãƒ³ãƒ—トã«å…¥ã‚Œã¦è¨€èªžãƒ¢ãƒ‡ãƒ« (Llama) ã«ç”Ÿæˆã•ã›ã‚‹ 2 種類作æˆã—ã¾ã™ã€‚ã©ã¡ã‚‰ã®å¤‰ç¨®ã‚’実験ã«ä½¿ã£ã¦ã‚‚çµæžœã‚’大ãã変ã‚らãªã„ã®ã§ã€ä»¥ä¸‹ã§ã¯å¤‰ç¨®ã«ã¤ã„ã¦ã¯è¨€åŠã—ã¾ã›ã‚“。

例ãˆã°ä»¥ä¸‹ã®ã‚ˆã†ãªãƒ†ã‚ストã§ã™ã€‚

アーニャ・ブライヤー・フォージャーã¯1996å¹´10月2日生ã¾ã‚Œã€‚ 幼少期をニュージャージー州プリンストンã§éŽã”ã™ã€‚ マサãƒãƒ¥ãƒ¼ã‚»ãƒƒãƒ„工科大å¦ã®æ•™æŽˆé™£ã‹ã‚‰æŒ‡å°Žã‚’å—ã‘る。 コミュニケーションå¦ã‚’専攻。 メタ・プラットフォームズã§å°‚é–€è·ã‚’経験。 カリフォルニア州メンãƒãƒ¼ãƒ‘ークã«å‹¤å‹™ã€‚

架空ã®äººç‰©ã®ä¼è¨˜ã‚’ 10 万人分作æˆã—ã¾ã™ã€‚

ã“ã‚Œã¨ã¯åˆ¥ã«ã€è³ªå•å¿œç”ã®ãƒ‡ãƒ¼ã‚¿ã‚‚作æˆã—ã¾ã™ã€‚例ãˆã°ä»¥ä¸‹ã®ã‚ˆã†ãªãƒ†ã‚ストã§ã™ã€‚

Q: アーニャ・ブライヤー・フォージャーã®å‹¤å‹™åœ°ã¯ï¼Ÿ A: カリフォルニア州メンãƒãƒ¼ãƒ‘ーク

言語モデルã¯äº‹å‰è¨“練時ã«ä½•ã‚‰ã‹ã®è³ªå•ã‚’見ã¦ãŠãå¿…è¦ãŒã‚ã‚‹

全員ã®ä¼è¨˜ãƒ†ã‚ストã¨ã€ä¸€éƒ¨ã®äººç‰©ï¼ˆè¨“練用人物)ã«ã¤ã„ã¦ã®è³ªå•å¿œç”テã‚ストを使ã£ã¦è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ã¾ã™ã€‚テスト時ã«ã¯ã€è¨“練時ã«è¦‹ã›ãªã‹ã£ãŸäººç‰©ï¼ˆãƒ†ã‚¹ãƒˆç”¨äººç‰©ï¼‰ã«ã¤ã„ã¦ã€è³ªå•æ–‡ã‚’言語モデルã«å…¥åŠ›ã—ã€æ£ã—ãç”ãˆã‚‰ã‚Œã‚‹ã‹ã‚’確èªã—ã¾ã™ã€‚ã™ã‚‹ã¨å¤§æ–¹ã®äºˆæƒ³é€šã‚Šã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯é«˜ã„精度(精度 86.6%)ã§è³ªå•ã«ç”ãˆã‚‹ã“ã¨ãŒã§ãã¾ã—ãŸã€‚

続ã„ã¦ã€å…¨å“¡ã®ä¼è¨˜ãƒ†ã‚ストã®ã¿ã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ã€ãã®å¾Œã€è¨“練用人物ã®è³ªå•å¿œç”テã‚ストã§ãƒ¢ãƒ‡ãƒ«ã‚’ファインãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ï¼ˆã‚¤ãƒ³ã‚¹ãƒˆãƒ©ã‚¯ã‚·ãƒ§ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ï¼‰ã—ã€ãƒ†ã‚¹ãƒˆç”¨äººç‰©ã«ã¤ã„ã¦ã®è³ªå•æ–‡ã‚’言語モデルã«å…¥åŠ›ã—ã¾ã—ãŸã€‚ã™ã‚‹ã¨ä¸æ€è°ãªã“ã¨ã«ç²¾åº¦ã¯å…¨ã出ã¾ã›ã‚“ã§ã—ãŸï¼ˆç²¾åº¦ 10% 未満)。ã¤ã¾ã‚Šã€ã‚¤ãƒ³ã‚¹ãƒˆãƒ©ã‚¯ã‚·ãƒ§ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ç”¨ã®ãƒ‡ãƒ¼ã‚¿ã‚’事å‰è¨“練時ã«è¦‹ã¦ã„ãªã„(ä¼è¨˜ã—ã‹è¦‹ã¦ã„ãªã„)ã¨ã‚¤ãƒ³ã‚¹ãƒˆãƒ©ã‚¯ã‚·ãƒ§ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã¯ã†ã¾ãã„ãã¾ã›ã‚“ã§ã—ãŸã€‚

ã“ã‚ŒãŒæ¤œè¨¼ã§ããŸã“ã¨ã‚‚ã€ã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸå®Ÿé¨“è¨å®šã®ãŠã‹ã’ã§ã™ã€‚ç¾å®Ÿã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã§ã¯ã€ãªãœã‹ã‚¤ãƒ³ã‚¹ãƒˆãƒ©ã‚¯ã‚·ãƒ§ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã ã‘ã§ä¸Šæ‰‹ãã„ãã“ã¨ãŒå¤šã„ã§ã™ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ãŒäº‹å‰è¨“練時ã«ä¼¼ãŸãƒ‡ãƒ¼ã‚¿ã‚’見ã¦ã„ãŸå¯èƒ½æ€§ãŒã‚ã‚Šã¾ã™ã€‚絶対ã«è³ªå•å¿œç”タスクã®ãƒ‡ãƒ¼ã‚¿ã‚’事å‰å¦ç¿’時ã«è¦‹ã›ãšã€æœ¬å½“ã«ä¼è¨˜ãƒ‡ãƒ¼ã‚¿ã ã‘ã§äº‹å‰å¦ç¿’ã—ãŸã“ã¨ã§ã€ã‚¤ãƒ³ã‚¹ãƒˆãƒ©ã‚¯ã‚·ãƒ§ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã ã‘ã§ã¯è³ªå•å¿œç”タスクãŒã†ã¾ãã„ã‹ãªã„ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚

ã—ã‹ã—ã€ãªãœã“ã®ã‚ˆã†ãªã“ã¨ãŒèµ·ãã‚‹ã®ã§ã—ょã†ã‹ï¼Ÿ

ã“ã®å¤±æ•—ã—ãŸãƒ¢ãƒ‡ãƒ«ã‚’検査ã—ã¦ã¿ã‚‹ã¨ã€ãƒ†ã‚¹ãƒˆç”¨äººç‰©ã«ã¤ã„ã¦ã‚‚ä¼è¨˜ã®ç¶šãã¯æ£ã—ã生æˆã§ãã¾ã™ï¼ˆç²¾åº¦ 99%)。ã¤ã¾ã‚Šã€ãƒ†ã‚¹ãƒˆç”¨äººç‰©ã«ã¤ã„ã¦ã®çŸ¥è˜ã¯è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ä¸ã«è²¯è”µã•ã‚Œã¦ã„ã¾ã™ã€‚ã—ã‹ã—ã€ãã‚ŒãŒå…¨ã抽出ã§ãã¾ã›ã‚“。

ã“ã‚Œã¯ç›´æ„Ÿçš„ã«ã¯ã€äº‹å‰è¨“練時ã«çŸ¥è˜ã®å–り出ã—方をå¦ã°ãªã‹ã£ãŸãŸã‚ã«ã€ãã‚ŒãŒèº«ã«ã¤ã‹ãªã‹ã£ãŸã¨è€ƒãˆã‚‰ã‚Œã¾ã™ã€‚

以下ã«ã“ã®ãƒ¡ã‚«ãƒ‹ã‚ºãƒ を詳ã—ã見ã¦ã„ãã¾ã™ã€‚

言語モデルã¯ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã™ã‚‹ã¨è³ªå•ã«ç”ãˆã‚‰ã‚Œã‚‹ã‚ˆã†ã«ãªã‚‹

全員ã®ä¼è¨˜ãƒ†ã‚ストã®ã¿ã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’スクラッãƒã‹ã‚‰è¨“ç·´ã—ã€ãã®å¾Œã€è¨“練用人物ã®è³ªå•å¿œç”テã‚ストã§ãƒ¢ãƒ‡ãƒ«ã‚’ファインãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã™ã‚‹ã¨ã€ãƒ†ã‚¹ãƒˆç”¨äººç‰©ã«ã¤ã„ã¦ã®è³ªå•å¿œç”ã«å¤±æ•—ã™ã‚‹ã“ã¨ã‚’è¿°ã¹ã¾ã—ãŸã€‚ã“ã“ã¾ã§ã®å®Ÿé¨“ã§ã¯ã€1人ã®äººç‰©ã«ã¤ã1ã¤ã®ä¼è¨˜ã®ã¿ã‚’使用ã—ã¦ã„ã¾ã—ãŸã€‚ä¼è¨˜å†…ã§ç´¹ä»‹ã™ã‚‹ãƒ—ãƒãƒ•ã‚£ãƒ¼ãƒ«ã®é †ç•ªã‚’ランダムã«å…¥ã‚Œæ›¿ãˆã¦ã€1人ã®äººç‰©ã«ã¤ãã€5ã¤ã®ä¼è¨˜ã‚’作æˆã—ã¦ã¿ã¾ã™ã€‚ã™ã‚‹ã¨ã€å…ˆç¨‹ã¨åŒã˜ã‚ˆã†ã«äº‹å‰å¦ç¿’ã§ã¯ä¼è¨˜ãƒ†ã‚ストã®ã¿ã—ã‹ä½¿ç”¨ã—ã¦ã„ãªãã¦ã‚‚ã€ã‚¤ãƒ³ã‚¹ãƒˆãƒ©ã‚¯ã‚·ãƒ§ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ãŒã†ã¾ãã„ãã€ãƒ†ã‚¹ãƒˆç”¨äººç‰©ã«ã¤ã„ã¦ã®è³ªå•å¿œç”ã«é«˜ã„精度ã§ï¼ˆç²¾åº¦ 96.6%)ç”ãˆã‚‰ã‚Œã‚‹ã‚ˆã†ã«ãªã‚Šã¾ã—ãŸã€‚

ã“ã‚Œã¯ãªãœã§ã—ょã†ã‹ï¼Ÿãƒ‡ãƒ¼ã‚¿å¢—å¼·ã®åŠ¹æžœã«ã—ã¦ã¯å¼·ã™ãŽã‚‹ã‚ˆã†ã«æ€ã„ã¾ã™ã€‚

言語モデルã¯ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã‚’ã—ãªã„ã¨å…¨ã¦ã®æƒ…å ±ãŒãã‚ã†ã¾ã§æƒ…å ±ã‚’æŠ½å‡ºã§ããªã„

データ増強をã—ã¦ã„ãªã„å…ƒã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚’考ãˆã¾ã™ã€‚ã“ã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã®å†…部状態を調査ã™ã‚‹ã¨ã€å„æƒ…å ±ã‚’å‡ºåŠ›ã™ã‚‹ç›´å‰ã¾ã§ã€ãã®æƒ…å ±ãŒé ã®ä¸ã«ã‚ã‚Šã¾ã›ã‚“。

例ãˆã°ã€ã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャー(架空ã®äººç‰©ï¼‰ã®ä¼è¨˜ã¯ä»¥ä¸‹ã®é€šã‚Šã§ã™ã€‚

アーニャ・ブライヤー・フォージャーã¯1996å¹´10月2日生ã¾ã‚Œã€‚ 幼少期をニュージャージー州プリンストンã§éŽã”ã™ã€‚ マサãƒãƒ¥ãƒ¼ã‚»ãƒƒãƒ„工科大å¦ã®æ•™æŽˆé™£ã‹ã‚‰æŒ‡å°Žã‚’å—ã‘る。 コミュニケーションå¦ã‚’専攻。 メタ・プラットフォームズã§å°‚é–€è·ã‚’経験。 カリフォルニア州メンãƒãƒ¼ãƒ‘ークã«å‹¤å‹™ã€‚

データ増強をã—ã¦ã„ãªã„言語モデルã«ã€Œã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャーã€ã¨ã ã‘入力ã™ã‚‹ã¨ã€ã“ã®ä¼è¨˜ã‚’å…¨ã¦æ£ã—ã生æˆã§ãã¾ã™ã€‚ã¤ã¾ã‚Šã€ã“ã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャーã®çŸ¥è˜ã‚’暗記ã—ã¦è²¯è”µã—ã¦ã„ã¾ã™ã€‚

ã“ã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã«

アーニャ・ブライヤー・フォージャーã¯1996å¹´10月2日生ã¾ã‚Œã€‚ 幼少期をニュージャージー州プリンストンã§éŽã”ã™ã€‚ マサãƒãƒ¥ãƒ¼ã‚»ãƒƒãƒ„工科大å¦ã®æ•™æŽˆé™£ã‹ã‚‰æŒ‡å°Žã‚’å—ã‘る。

ã¾ã§å…¥åŠ›ã—ãŸæ®µéšŽã§ã€å†…部状態ã‹ã‚‰å‹¤å‹™åœ°ã‚’予測ã—ã¦ã¿ã¦ã‚‚ã€äºˆæ¸¬ã§ããªã„ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚

アーニャ・ブライヤー・フォージャーã¯1996å¹´10月2日生ã¾ã‚Œã€‚ 幼少期をニュージャージー州プリンストンã§éŽã”ã™ã€‚ マサãƒãƒ¥ãƒ¼ã‚»ãƒƒãƒ„工科大å¦ã®æ•™æŽˆé™£ã‹ã‚‰æŒ‡å°Žã‚’å—ã‘る。 コミュニケーションå¦ã‚’専攻。 メタ・プラットフォームズã§å°‚é–€è·ã‚’経験。

ã¾ã§å…¥åŠ›ã—ã¦ã¯ã˜ã‚ã¦ã€ã‚«ãƒªãƒ•ã‚©ãƒ«ãƒ‹ã‚¢å·žãƒ¡ãƒ³ãƒãƒ¼ãƒ‘ークã«å‹¤å‹™ã—ã¦ã„ã‚‹ã¨ã„ã†æƒ…å ±ã‚’å†…éƒ¨çŠ¶æ…‹ã‹ã‚‰èªã¿å–れるよã†ã«ãªã‚Šã¾ã—ãŸã€‚

ã¤ã¾ã‚Šã€ã€Œã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャー〠→ 「カリフォルニア州メンãƒãƒ¼ãƒ‘ーク勤務ã€ã¨ã„ã†å½¢ã§çŸ¥è˜ãŒè²¯è”µã•ã‚Œã¦ãŠã‚‰ãšã€ã€Œã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャーã€ãƒ‹ãƒ¥ãƒ¼ã‚¸ãƒ£ãƒ¼ã‚¸ãƒ¼å·žãƒ—リンストン出身ã€ãƒžã‚µãƒãƒ¥ãƒ¼ã‚»ãƒƒãƒ„工科大å¦å’æ¥ã€ã‚³ãƒŸãƒ¥ãƒ‹ã‚±ãƒ¼ã‚·ãƒ§ãƒ³å¦å°‚æ”»ã€ãƒ¡ã‚¿ãƒ»ãƒ—ラットフォームズã«å‹¤å‹™ã€â†’「カリフォルニア州メンãƒãƒ¼ãƒ‘ーク勤務ã€ã¨ã„ã†å½¢ã§ã®ã¿çŸ¥è˜ãŒè²¯è”µã•ã‚Œã¦ã„ã¾ã™ã€‚一å•ä¸€ç”ã§ç”ãˆã‚‰ã‚Œã‚‹å½¢å¼ã§ã¯ãªãã€ã‚¹ãƒˆãƒ¼ãƒªãƒ¼ã¨ã—ã¦ã ã‘覚ãˆã¦ã„ã‚‹ã¨ã‚‚ã„ãˆã‚‹ã§ã—ょã†ã€‚å„人物ã«ã¤ã 1 ã¤ã®ä¼è¨˜ã—ã‹ãªã„å ´åˆã€ãã®ä¼è¨˜ã‚’生æˆã™ã‚‹ãŸã‚ã«ã¯ã€ãŸã—ã‹ã«ã“ã®è²¯è”µã®æ–¹æ³•ã ã‘ã§å分ã§ã™ã€‚ç¹°ã‚Šè¿”ã—ã«ãªã‚Šã¾ã™ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ next token prediction ã§ã®ã¿è¨“ç·´ã•ã‚Œã¦ã„ã‚‹ã“ã¨ã‚’æ€ã„出ã—ã¦ãã ã•ã„。モデルã¯ã€Œæ¬¡ã®ã€ãƒˆãƒ¼ã‚¯ãƒ³ã•ãˆå‡ºåŠ›ã§ããŸã‚‰è‰¯ã„ã®ã§ã™ã€‚10 ステップ先ã®ãƒˆãƒ¼ã‚¯ãƒ³ã‚’ã‚らã‹ã˜ã‚é ã®ä¸ã§æº–å‚™ã—ã¦ãŠãå¿…è¦ã¯ã‚ã‚Šã¾ã›ã‚“。ã§ã‚ã‚Œã°ã€ãã®ã‚ˆã†ãªä¸è¦ãªã‚¹ã‚ルãŒèº«ã«ä»˜ã‹ãªã‹ã£ãŸã¨ã—ã¦ã‚‚ä¸æ€è°ã§ã¯ãªã„ã§ã—ょã†ã€‚ã—ã‹ã—ã€ã“ã®è¨˜æ†¶æ–¹æ³•ã§ã¯è³ªå•å¿œç”タスクを解ãã“ã¨ãŒã§ãã¾ã›ã‚“。

一方ã€ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã—ã¦è¨“ç·´ã—ãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã§ã¯

アーニャ・ブライヤー・フォージャーã¯

ã¨å…¥åŠ›ã—ãŸæ®µéšŽã§ã‚«ãƒªãƒ•ã‚©ãƒ«ãƒ‹ã‚¢å·žãƒ¡ãƒ³ãƒãƒ¼ãƒ‘ークã«å‹¤å‹™ã—ã¦ã„ã‚‹ã¨ã„ã†æƒ…å ±ã‚’å†…éƒ¨çŠ¶æ…‹ã‹ã‚‰èªã¿å–れるよã†ã«ãªã‚Šã¾ã™ã€‚

ã¤ã¾ã‚Šã€ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã«ã‚ˆã‚Šã€ã„ã¤çŸ¥è˜ã‚’抽出ã•ã‚Œã‚‹ã‹ã‚ã‹ã‚‰ãªã„ã¨ã„ã†ãƒ—レッシャーを与ãˆãŸã“ã¨ã§ã€äººç‰©åã ã‘ã‹ã‚‰ã„ã¤ã§ã‚‚æƒ…å ±ã‚’å–り出ã›ã‚‹ã‚ˆã†ã«ãªã£ãŸã¨ã„ã†ã“ã¨ã§ã™ã€‚

言語モデルã¯ä¸€éƒ¨ã®äººç‰©ã«ã¤ã„ã¦ã®ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã ã‘ã§ã€ã†ã¾ãæƒ…å ±ã‚’è¨˜æ†¶ã§ãるよã†ã«ãªã‚‹

データ増強ã®å®Ÿé¨“ã§ã¯ã€å…¨ã¦ã®äººç‰©ã«ã¤ã„㦠5 ã¤ã®ä¼è¨˜ã‚’作æˆã—ã¾ã—ãŸãŒã€å®Ÿã¯ä¸€éƒ¨ã®äººç‰©ã«ã¤ã„ã¦ã ã‘データ増強を施ã—ã€ãれ以外ã®äººç‰©ã«ã¤ã„ã¦ã¯ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã‚’ã›ãšã«è¨“ç·´ã‚’ã—ãŸã¨ã—ã¦ã‚‚ã€ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã—ãªã‹ã£ãŸäººç‰©ã«ã¤ã„ã¦ã‚‚高ã„精度ã§æ£ç”ã§ãるよã†ã«ãªã‚Šã¾ã™ã€‚ã¤ã¾ã‚Šã€ä¸€éƒ¨ã®äººç‰©ã‚’通ã—ã¦ã€æ£ã—ã„記憶ã®ä»•æ–¹ã‚’å¦ã¹ã°ã€ä»–ã®äººç‰©ã«ã¤ã„ã¦ã‚‚ãれを応用ã§ãるよã†ã«ãªã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚

ã“ã®ã‚·ãƒŠãƒªã‚ªã¯ç¾å®Ÿã®è¨å®šã¨ã‚ˆã‚Šè¿‘ã„ã¨è€ƒãˆã‚‰ã‚Œã¾ã™ã€‚ç¾å®Ÿã§ã¯ä¸€éƒ¨ã®æœ‰å人物ã«ã¤ã„ã¦ã¯ã€ã„ãã¤ã‚‚ã®ä¼è¨˜ãŒä½œæˆã•ã‚Œã¦ã„ã¾ã™ã€‚ç¾å®Ÿä¸–ç•Œã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã“れらã®ãƒ‡ãƒ¼ã‚¿ã§æƒ…å ±ã®è‰¯ã„記憶方法をå¦ã‚“ã ãŠã‹ã’ã§ã€ãれ以外ã®äººç‰©ã«ã¤ã„ã¦ã‚‚ã†ã¾ã質å•å¿œç”ãŒã§ãるよã†ã«ãªã£ãŸã¨è€ƒãˆã‚‰ã‚Œã¾ã™ã€‚

ã“ã“ã‹ã‚‰å¾—られる実践上ã®æ•™è¨“ã¨ã—ã¦ã¯

- 下æµã‚¿ã‚¹ã‚¯ã«é–¢é€£ã®ã‚るインストラクションãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ãƒ†ã‚ストを事å‰å¦ç¿’時ã«ã‚‚使ã†ã“ã¨ã§ç²¾åº¦ãŒå‘上ã™ã‚‹ã€‚例ãˆã°ã€è³ªå•å¿œç”ã®ä¾‹ã‚’事å‰å¦ç¿’ã«å…¥ã‚Œã‚‹ã“ã¨ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯çŸ¥è˜ã®æŠ½å‡ºæ–¹æ³•ã‚’身ã«ä»˜ã‘ã‚‹ã“ã¨ãŒã§ãる。

- 事å‰è¨“練時ã«åŒã˜æƒ…å ±ã‚’æ§˜ã€…ãªæ–¹æ³•ã§è¡¨ç¾ã—ãŸãƒ†ã‚ストを用ã„ã‚‹ã“ã¨ã§ç²¾åº¦ãŒå‘上ã™ã‚‹ã€‚例ãˆã°ã€æƒ…å ±ã®æç¤ºé †åºã‚’変ãˆã‚‹ãƒ‡ãƒ¼ã‚¿å¢—å¼·ã‚’è¡Œã†ã“ã¨ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯çŸ¥è˜ã®æŠ½å‡ºç²¾åº¦ã‚’上ã’ã‚‹ã“ã¨ãŒã§ãる。

ã¨ã„ã†ã“ã¨ã§ã™ã€‚

Physics of language models: Part 3.2, knowledge manipulation (ICLR 2025)

日本語ã«è¨³ã™ã¨ã€ŒçŸ¥è˜ã®æ“作ã€ã§ã™ã€‚

ã“ã®è«–æ–‡ã®ä¸»è¦ãªçµæžœã‚’一言ã§è¡¨ã™ã¨ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯çŸ¥è˜ã®è²¯è”µãŒã§ãã¦ã‚‚ã€æ€è€ƒã®é€£éŽ–ãªã—ã«ã¯çŸ¥è˜ã®æ“作ã¯ã§ããªã„ã¨ã„ã†ã“ã¨ã§ã™ã€‚

データã¯å…ˆç¨‹ã¨åŒã˜æž¶ç©ºã®ä¼è¨˜ãƒ‡ãƒ¼ã‚¿ã‚’用ã„ã¾ã™ã€‚

言語モデルã¯è²¯è”µã—ãŸå½¢ã§ã—ã‹çŸ¥è˜ã‚’抽出ã§ããªã„

ä¼è¨˜ã®ã¿ã§ã‚¹ã‚¯ãƒ©ãƒƒãƒã‹ã‚‰è¨“ç·´ã—ãŸè¨€èªžãƒ¢ãƒ‡ãƒ«ã«å¯¾ã—ã¦

Q: アーニャ・ブライヤー・フォージャーã®ç”Ÿå¹´æœˆæ—¥ã¯ï¼Ÿ A: October 2, 1996

ã®ã‚ˆã†ã«ç”Ÿå¹´æœˆæ—¥ã‚’ç”ãˆã•ã›ã‚‹ã‚ˆã†ã«ãƒ•ã‚¡ã‚¤ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã—ã¾ã™ã€‚データ増強を行ã†ã¨ã€é«˜ã„精度ã§ç”ãˆã‚‰ã‚Œã‚‹ã‚ˆã†ã«ãªã‚‹ã“ã¨ã‚’å‰å›žã®è«–æ–‡ã§è¿°ã¹ã¾ã—ãŸã€‚

åŒã˜ã‚ˆã†ã«ã€

Q: アーニャ・ブライヤー・フォージャーã®ç”Ÿã¾ã‚Œå¹´ã¯ï¼Ÿ A: 1996

ã®ã‚ˆã†ã«ç”Ÿã¾ã‚Œå¹´ã‚’ç”ãˆã•ã›ã‚‹ã‚ˆã†ã«ãƒ•ã‚¡ã‚¤ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã—ã¦ã¿ã¾ã™ã€‚ã™ã‚‹ã¨ã€æ£ç”率ãŒæ¥µç«¯ã«ä½Žã„(精度 20% 程度)ã“ã¨ãŒåˆ†ã‹ã‚Šã¾ã—ãŸã€‚ã“ã‚Œã¯ãªãœã§ã—ょã†ã‹ã€‚

è«–æ–‡ã§ã®å®Ÿé¨“ã§ã¯ã‚¢ãƒ¡ãƒªã‚«å¼ã« October 2, 1996 ã¨ã„ã†å½¢ã§è¨˜è¿°ã•ã‚Œã¦ã„ã‚‹ã“ã¨ã«æ³¨æ„ã—ã¦ãã ã•ã„。

ã“ã‚ŒãŒèµ·ã“ã‚‹ã®ã¯å‰å›žã®çµæžœã¨åŒæ§˜ã®ç†ç”±ã§ã™ã€‚データ増強をã—ã¾ã—ãŸãŒã€ã“ã‚Œã¯ãƒ—ãƒãƒ•ã‚£ãƒ¼ãƒ«ã®ç¨®é¡žã”ã¨ã«é †ç•ªã‚’変ãˆãŸã¨ã„ã†ã ã‘ã§ã€ç”Ÿå¹´æœˆæ—¥ã‚’è¿°ã¹ã‚‹æ™‚ã«ã¯ã„ãšã‚Œã®ä¼è¨˜ã‚‚å…¨ã¦ã‚¢ãƒ¡ãƒªã‚«å¼ã§ October 2, 1996 ã®é †ç•ªã§ä¸¦ã‚“ã§ã„ã¾ã™ã€‚ゆãˆã«ã€ã“ã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ October 2, ãŒå…¥åŠ›ã•ã‚Œã‚‹ã¾ã§ 1996 ã¨ã„ã†æƒ…å ±ã‚’æŠ½å‡ºã§ããªã„よã†ã«ãªã£ã¦ã—ã¾ã£ã¦ã„ã¾ã™ã€‚

ã“ã®ã»ã‹ã€

Q: アーニャ・ブライヤー・フォージャーã®ç”Ÿã¾ã‚Œå¹´ã¯å¶æ•°ã‹ï¼Ÿ A: Yes

ã®ã‚ˆã†ãªåˆ†é¡žå•é¡Œã€

Q: アーニャ・ブライヤー・フォージャーã®ç”Ÿã¾ã‚Œã¯ã‚µãƒ–リナ・エウジオ・ズãƒãƒ¼ã‚°ã‚ˆã‚Šã‚‚æ—©ã„ã‹ï¼Ÿ A: No

ã®ã‚ˆã†ãªæ¯”較å•é¡Œã«ã¤ã„ã¦ã‚‚åŒæ§˜ã§ã™ã€‚アーニャ・ブライヤー・フォージャー㌠October 2, 1996 生ã¾ã‚Œã€ã‚µãƒ–リナ・エウジオ・ズãƒãƒ¼ã‚°ã¯ September 12, 1994 生ã¾ã‚Œã§ã‚ã‚‹ã“ã¨ã‚’言語モデルã¯çŸ¥ã£ã¦ã„ã‚‹ã®ã ã‹ã‚‰ã€ã©ã¡ã‚‰ãŒæ—©ã„ã‹ã‚’ç”ãˆã‚‹ã®ã‚‚ç°¡å˜ãã†ã§ã™ãŒã€ç”ãˆã‚‹ã“ã¨ãŒã§ãã¾ã›ã‚“。

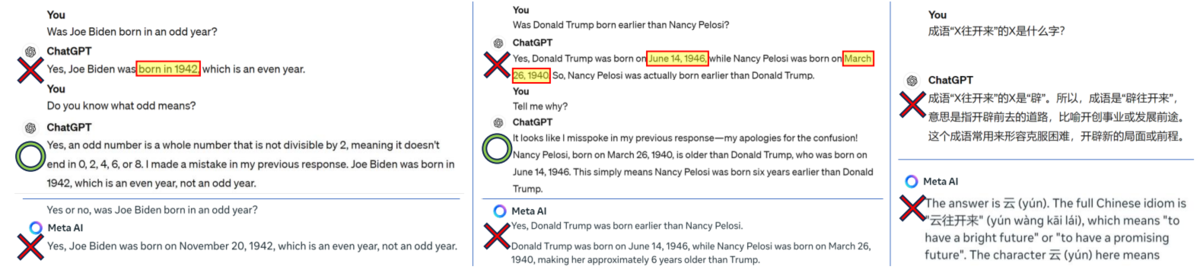

ã¤ã¾ã‚Šã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯çŸ¥è˜ã‚’貯蔵ã§ãる。訓練時ã«è¦‹ãŸé †åºã§ã‚ã‚Œã°ã€æŠ½å‡ºã‚‚ã§ãる。ã—ã‹ã—ã€çŸ¥è˜ã®æ“作ã¯ã§ããªã„ã¨ã„ã†ã“ã¨ã§ã™ã€‚GPT-4 ãªã©ã®ç¾å®Ÿã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã‚‚「ジョー・ãƒã‚¤ãƒ‡ãƒ³ã®ç”Ÿã¾ã‚Œå¹´ã¯å¥‡æ•°ã‹ï¼Ÿã€ã¨ã„ã£ãŸçŸ¥è˜ã®åˆ†é¡žå•é¡Œã‚’解ã‘ãªã„ã“ã¨ãŒè¦³å¯Ÿã•ã‚Œã¦ã„ã¾ã™ã€‚

ç¾å®Ÿã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã“ã®ç¨®ã®å•é¡Œã‚’解ã‘ã‚‹ã“ã¨ã‚‚ã‚ã‚Šã¾ã™ã€‚ã—ã‹ã—ãã‚Œã¯ã€ã‚¦ã‚§ãƒ–上ã§ãŸã¾ãŸã¾ãã®å•é¡Œãã®ã‚‚ã®ã€ã‚ã‚‹ã„ã¯ã»ã¨ã‚“ã©åŒã˜å•é¡ŒãŒè¼‰ã£ã¦ã„ãŸï¼ˆä¾‹ãˆã°èª°ã‹ãŒã€Œã‚¸ãƒ§ãƒ¼ãƒ»ãƒã‚¤ãƒ‡ãƒ³ã¯å¥‡æ•°å¹´ã«ç”Ÿã¾ã‚ŒãŸã€ã¨æ„味ãªãツイートã—ãŸã‹ã‚‚ã—ã‚Œã¾ã›ã‚“)ã¨ã„ã†ã ã‘ã§ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯çŸ¥è˜ã®æ“作ã§ãã®å•é¡Œã‚’解ã„ã¦ã„ãªã„å¯èƒ½æ€§ãŒé«˜ã„ã§ã™ã€‚ãã©ã„ã§ã™ãŒã€ã‚¦ã‚§ãƒ–コーパスãŒè¤‡é›‘ã™ãŽã‚‹ã®ã§æœ¬å½“ã«ãã†ãªã®ã‹ã¯èª°ã«ã‚‚分ã‹ã‚Šã¾ã›ã‚“。ã“ã®è«–æ–‡ã®å®Ÿé¨“ã«ã‚ˆã£ã¦ã€ã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã•ã‚ŒãŸçŠ¶æ³ä¸‹ã§ã¯ã€ãŸã—ã‹ã«è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯çŸ¥è˜ã®æ“作ãŒã§ããªã„ã“ã¨ãŒç¢ºèªã•ã‚Œã¾ã—ãŸã€‚

æ€è€ƒã®é€£éŽ–ã¯å½¹ã«ç«‹ã¤ãŒã€ãƒ†ã‚¹ãƒˆæ™‚ã«ã‚‚使ã‚ãªã„ã¨æ©Ÿèƒ½ã—ãªã„

ã“ã®å•é¡Œã®åˆ†ã‹ã‚Šã‚„ã™ã„対処法ã¯æ€è€ƒã®é€£éŽ–ã§ã™ã€‚

Q: アーニャ・ブライヤー・フォージャーã®ç”Ÿã¾ã‚Œå¹´ã¯ï¼Ÿ A: アーニャ・ブライヤー・フォージャー㯠October 2, 1996 生ã¾ã‚Œã€‚よã£ã¦ç”ãˆï¼š1996

ã¨ã„ã†ãƒ†ã‚ストã§ãƒ¢ãƒ‡ãƒ«ã‚’ファインãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã™ã‚‹ã¨ã€é«˜ã„精度ã§æ£ç”ã§ãるよã†ã«ãªã‚Šã¾ã™ã€‚

ã—ã‹ã—ã€ã“ã®ã‚ˆã†ã«è¨“ç·´ã•ã‚Œã¦ã‚‚ã€ãƒ†ã‚¹ãƒˆæ™‚ã«ã„ããªã‚Šç”ãˆã‚’出力ã™ã‚‹ã‚ˆã†ã«æ±‚ã‚られるã¨ã€ãƒ¢ãƒ‡ãƒ«ã¯èª¤ç”ã—ã¾ã™ã€‚ã¤ã¾ã‚Šã€ã‚ãã¾ã§æ€è€ƒã®é€£éŽ–ã®æ–¹æ³•ã‚’å¦ã‚“ã ã®ã§ã‚ã£ã¦ã€ç›´æŽ¥çš„ã«çŸ¥è˜ã‚’æ“作ã§ãるよã†ã«ãªã£ãŸã‚ã‘ã§ã¯ã‚ã‚Šã¾ã›ã‚“。

言語モデルã¯é€†æ¤œç´¢ãŒã§ããªã„

ã“ã‚Œã®æœ€ãŸã‚‹ä¾‹ãŒé€†æ¤œç´¢ã€ã¤ã¾ã‚Š

Q. October 2, 1996 ã«ç”Ÿã¾ã‚ŒãŸäººç‰©ã¯èª°ã‹ï¼Ÿ

ã¨ã„ã†ã‚¿ã‚¹ã‚¯ã§ã™ã€‚ã“ã®ã‚¿ã‚¹ã‚¯ã§ãƒ•ã‚¡ã‚¤ãƒ³ãƒãƒ¥ãƒ¼ãƒ‹ãƒ³ã‚°ã—ã€ãƒ†ã‚¹ãƒˆæ™‚ã«ä¸Žãˆã‚‰ã‚ŒãŸæ—¥ã«ç”Ÿã¾ã‚ŒãŸäººç‰©ãŒ1人ã—ã‹ã„ãªã‹ã£ãŸã¨ã—ã¦ã‚‚ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã“ã®å•é¡Œã«å…¨ãç”ãˆã‚‹ã“ã¨ãŒã§ãã¾ã›ã‚“。言語モデルãŒã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャー㯠October 2, 1996 ã«ç”Ÿã¾ã‚ŒãŸã“ã¨ã‚’知ã£ã¦ã„ãŸã¨ã—ã¦ã‚‚ã€ã§ã™ã€‚ã“ã‚Œã¯ã€ã€Œ[人物] 㯠[生年月日] 生ã¾ã‚Œã§ã‚ã‚‹ã€ã¨ã„ã†é †ç•ªã§ã—ã‹æƒ…å ±ã‚’è¦‹ãŸã“ã¨ãŒãªã„ã‹ã‚‰ã§ã™ã€‚

ã¤ã¾ã‚Šã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã«ãŠã„ã¦ã¯ã€ã€ŒA ã‹ã‚‰ B ãŒå–り出ã›ã‚‹ã€ãªã‚‰ã°ã€ŒB ã‹ã‚‰ A ãŒå–り出ã›ã‚‹ã€ã¨ã„ã†å¯¾ç§°å¾‹ãŒæˆç«‹ã—ã¾ã›ã‚“。ã“ã‚Œã¯æ¤œç´¢ã‚¨ãƒ³ã‚¸ãƒ³ã¨ã—ã¦ä½¿ã†ã«ã¯è‡´å‘½çš„ãªæ¬ 陥ã§ã™ã€‚ã—ã‹ã‚‚ã€ã“ã®ã‚ˆã†ãªé€†æ¤œç´¢ã¯æ€è€ƒã®é€£éŽ–ã§è§£ãã“ã¨ãŒé›£ã—ã„ã§ã™ã€‚

ã“ã‚Œã¯è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã‚ãã¾ã§è¨€èªžãƒ¢ãƒ‡ãƒ«ã§ã‚ã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚言語モデルã¯é †æ–¹å‘ã®æ¤œç´¢ãŒãã‚Œãªã‚Šã«ã§ãã‚‹ã®ã§ã€æ¤œç´¢ã‚¨ãƒ³ã‚¸ãƒ³ã®æ©Ÿèƒ½ã‚’内包ã™ã‚‹ã‚ˆã†ã«éŒ¯è¦šã—ãŒã¡ã§ã™ãŒã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯æ¤œç´¢ã‚¨ãƒ³ã‚¸ãƒ³ã§ã¯ãªã言語モデルã§ã™ã€‚「nextã€token prediction ã§è¨“ç·´ã•ã‚ŒãŸå残ãŒã€ã“ã“ã«ã¯ã£ãã‚Šç¾ã‚Œã‚‹ã‚ã‘ã§ã™ã€‚

ã“ã®å‚¾å‘㯠GPT-4 ãªã©ç¾å®Ÿã®ãƒ¢ãƒ‡ãƒ«ã§ã‚‚観察ã§ãã¾ã™ã€‚例ãˆã°ã€ã‚¸ã‚§ãƒ¼ãƒ³ãƒ»ã‚ªãƒ¼ã‚¹ãƒ†ã‚£ãƒ³ã€Žé«˜æ…¢ã¨å見ã€ã®ä¸€ç¯€ã‚’å–り出ã—ã€ã“ã®æ¬¡ã®æ–‡ã¯ï¼Ÿã¨ GPT-4 ã«èžã㨠65.9% ã®ç²¾åº¦ã§æ£ç”ã—ã¾ã™ãŒã€ã“ã®å‰ã®æ–‡ã¯ï¼Ÿã¨èžãã¨ã€ 0.8% ã®ç¢ºçŽ‡ã§ã—ã‹æ£ç”ã§ãã¾ã›ã‚“。ã“ã®ã‚ˆã†ã«äº‹å‰è¨“ç·´ã§å…¥åŠ›ã•ã‚Œã‚‹é †ç•ªãŒå¸¸ã«å›ºå®šã•ã‚Œã¦ã„るテã‚ストã«ãŠã„ã¦ã¯ã€æ£ç”率ã«æ¿€ã—ã„éžå¯¾ç§°æ€§ãŒç¾ã‚Œã¾ã™ã€‚

Physics of language models: Part 3.3, knowledge capacity scaling laws (ICLR 2025)

日本語ã«è¨³ã™ã¨ã€ŒçŸ¥è˜å®¹é‡ã®ã‚¹ã‚±ãƒ¼ãƒªãƒ³ã‚°å‰‡ã€ã§ã™ã€‚

ã“ã®è«–æ–‡ã®ä¸»è¦ãªçµæžœã‚’一言ã§è¡¨ã™ã¨ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãƒ‘ラメータ 1 ã¤ã«ã¤ãç´„ 2 ビットã®æƒ…å ±ã‚’è¨˜æ†¶ã§ãã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚

スケーリング則ã¨è¨€ã†ã¨å¾“æ¥ã¯ãƒ¢ãƒ‡ãƒ«ãƒ‘ラメータ数ã«å¯¾ã™ã‚‹æ失値やテスト性能を分æžã—ã¦ãã¾ã—ãŸã€‚ã“ã®ç ”究ã§ã¯ã€ï¼ˆã‚¢ãƒ¼ãƒ‹ãƒ£ãƒ»ãƒ–ライヤー・フォージャーã€å‹¤å‹™åœ°ã€ã‚«ãƒªãƒ•ã‚©ãƒ«ãƒ‹ã‚¢å·žãƒ¡ãƒ³ãƒãƒ¼ãƒ‘ーク)ã®ã‚ˆã†ãªä¸‰ã¤çµ„知è˜ã‚’ã„ãã¤æ ¼ç´ã§ãã‚‹ã‹ã€ã‚ˆã‚Šæ£ç¢ºã«ã¯ä½•ãƒ“ットã®çŸ¥è˜ã‚’æ ¼ç´ã§ãã‚‹ã‹ã¨ã„ã†ã“ã¨ã‚’分æžã—ã¦ã„ã¾ã™ã€‚

データã¯å…ˆç¨‹ã¨åŒã˜æž¶ç©ºã®ä¼è¨˜ãƒ‡ãƒ¼ã‚¿ã‚’用ã„ã¾ã™ã€‚

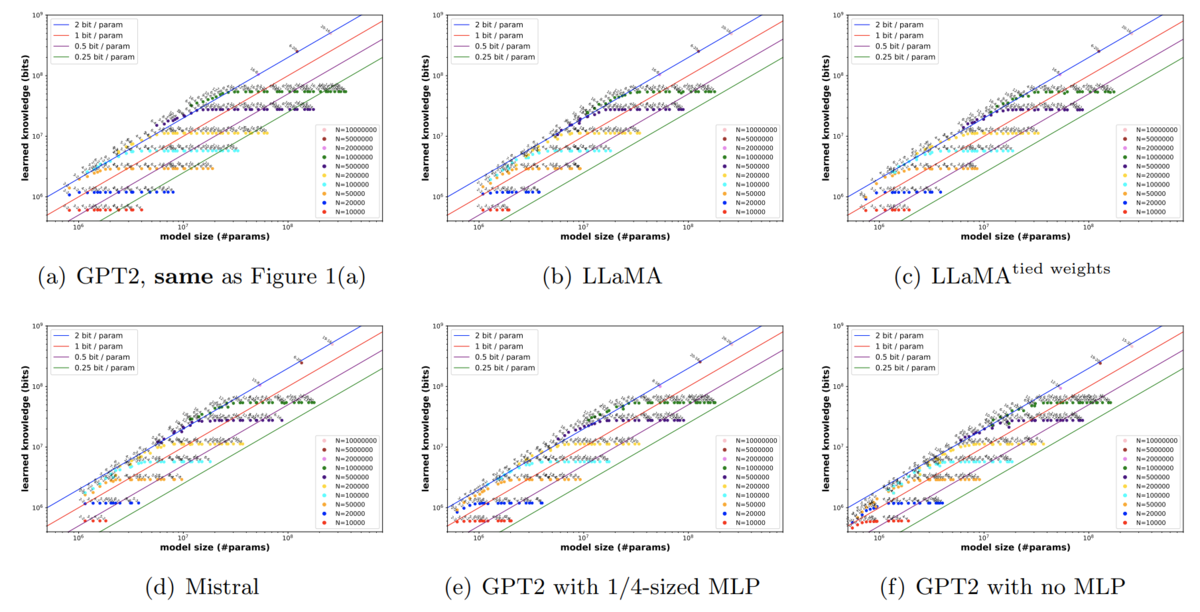

言語モデルã¯ãƒ‘ラメータ 1 ã¤ã«ã¤ãç´„ 2 ビットã®æƒ…å ±ã‚’è¨˜æ†¶ã§ãã‚‹

ä¼è¨˜ã®äººæ•°ã‚’ 1 万人ã‹ã‚‰ 1000 万人ã¾ã§è©¦ã—ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ãƒ‘ラメータを 100 万ã‹ã‚‰æ•°å„„ã¾ã§è©¦ã—ãŸã¨ã“ã‚ã€ä¸€è²«ã—㦠1 パラメータã‚ãŸã‚Š 2 ビットã®ãƒ—ãƒãƒ•ã‚£ãƒ¼ãƒ«æƒ…å ±ã‚’è¨˜æ†¶ã—ã¾ã—ãŸã€‚モデルアーã‚テクãƒãƒ£ã‚’ GPT-2ã€Llamaã€Mistral ãªã©ã¨å¤‰ãˆãŸã‚Šã€ç™»å ´ã™ã‚‹ä¼šç¤¾åや地åã®æ•°ã‚’変ãˆãŸã‚Šã—ã¦ã‚‚一貫ã—ã¦ã“ã®å‚¾å‘ãŒè¦‹ã‚‰ã‚Œã¾ã—ãŸã€‚

int8 é‡å化をã—ã¦ã‚‚記憶容é‡ã¯ä¸‹ãŒã‚‰ãªã„㌠int4 é‡å化をã™ã‚‹ã¨è¨˜æ†¶åŠ¹çŽ‡ãŒä¸‹ãŒã‚‹

é‡å化をã—ã¦åŒã˜å®Ÿé¨“ã‚’ã—ãŸã¨ã“ã‚ã€int8 ã§ã¯è¨˜æ†¶å®¹é‡ã¯ã»ã¨ã‚“ã©å¤‰ã‚ã‚Šã¾ã›ã‚“ã§ã—ãŸã€‚int8 ã®ç†è«–é™ç•Œã¯å½“然パラメータã‚ãŸã‚Š 8 ビットã§ã™ã‹ã‚‰ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ç†è«–値㮠25% 程度ã¯è¨˜æ†¶å®¹é‡ã‚’有効活用ã§ãã¦ã„ã‚‹ã“ã¨ã‚’表ã—ã¾ã™ã€‚一方 int4 é‡å化をã™ã‚‹ã¨ã€å®¹é‡ã¯ 2 å€ä»¥ä¸Šæ‚ªããªã£ã¦ã—ã¾ã„ã¾ã—ãŸã€‚ã¤ã¾ã‚ŠåŠ¹çŽ‡ã‹ã‚‰è¨€ã†ã¨ int8 ãŒæœ€é©ã ã£ãŸã¨ã„ã†ã“ã¨ã«ãªã‚Šã¾ã™ã€‚

25% ã¨ã„ã†ã¨å°‘ãªã„よã†ã«æ„Ÿã˜ã‚‹ã‹ã‚‚ã—ã‚Œã¾ã›ã‚“ãŒã€next token prediction ã«ã‚ˆã‚‹ãƒ¢ãƒ‡ãƒ«ã®è¨“ç·´ã ã‘ã§ã“ã“ã¾ã§é”æˆã§ãã‚‹ã®ã¯éžè‡ªæ˜Žã§ã™ã€‚ç†è«–値ã¯ã€ä¸‰ã¤çµ„知è˜ä»¥å¤–ã®ä½™è¨ˆãªãƒ†ã‚ストをããŽè½ã¨ã—ã¦ã€çŸ¥è˜ã‚’ zip ã§åœ§ç¸®ã—ã¦ã€ã‚®ãƒã‚®ãƒã«ã‚¹ãƒˆãƒ¬ãƒ¼ã‚¸ã«æ ¼ç´ã—ã¦ã‚ˆã†ã‚„ãé”æˆã§ãるよã†ãªã‚‚ã®ã§ã™ã€‚SGD ã§ãƒ¢ãƒ‡ãƒ«ã‚’訓練ã™ã‚‹ã¨ã„ã†é–“接的ãªæ–¹æ³•ã‚’用ã„ãªãŒã‚‰ã€ã—ã‹ã‚‚モデルã¯å˜ã«ä¸‰ã¤çµ„を暗記ã™ã‚‹ã ã‘ã§ãªãã€ãれ以外ã®èƒ½åŠ›ã‚‚ç²å¾—ã—ãªãŒã‚‰ã€ç†è«–値㮠25% ã‚’é”æˆã§ãã‚‹ã®ã¯ã€ã‹ãªã‚Šã™ã”ã„ã“ã¨ã ã¨è¨€ãˆã¾ã™ã€‚

ゴミデータã¯è¨˜æ†¶å®¹é‡ã‚’悪化ã•ã›ã‚‹ãŒã‚½ãƒ¼ã‚¹ã‚’明記ã™ã‚‹ã¨æ‚ªããªã‚‰ãªã„

質å•å¿œç”ã§ä½¿ã†äººç‰©ã®ã»ã‹ã«ã€è³ªå•å¿œç”ã§ä½¿ã‚ãªã„ç„¡æ„味ãªä¼è¨˜ã‚’大é‡ã«ä½œæˆã—ã€è¨“練データã«æ··ãœã¦è¨“ç·´ã—ã¾ã™ã€‚質å•å¿œç”ã§ä½¿ã†äººç‰©ã«ã¤ã„ã¦ã¯è¨“練㧠100 回ãšã¤è¦‹ã¾ã™ãŒã€ç„¡æ„味ãªä¼è¨˜ã¯ä¸€åº¦è¦‹ãŸã‚‰ä½¿ã„æ¨ã¦ã¾ã™ã€‚一度ã—ã‹ç¾ã‚Œãªã„ã®ã§ãƒ©ãƒ³ãƒ€ãƒ ãªä¼è¨˜ã¨åŒºåˆ¥ã§ãã¾ã›ã‚“。ã“ã®ã‚ˆã†ãªã‚´ãƒŸãƒ‡ãƒ¼ã‚¿ã¯é‡è¦ãªãƒ‡ãƒ¼ã‚¿ã‚’記憶ã™ã‚‹é‚ªé”ã«ãªã‚‹ã§ã—ょã†ã‹ã€‚

ç”ãˆï¼šå¤§å¤‰ãªé‚ªé”ã«ãªã‚Šã¾ã™ã€‚ã“ã®ã‚ˆã†ã«ã‚´ãƒŸãƒ‡ãƒ¼ã‚¿ã‚’æ··ãœã¦è¨“ç·´ã™ã‚‹ã¨ã€ãƒ‘ラメータã‚ãŸã‚Šã®è¨˜æ†¶å®¹é‡ãŒ 20 å€ä»¥ä¸Šæ‚ªåŒ–ã—ã¾ã™ã€‚ã¤ã¾ã‚Šã€äº‹å‰è¨“ç·´ã®ãƒ‡ãƒ¼ã‚¿ã®å“質ã¯éžå¸¸ã«é‡è¦ã§ã™ã€‚

ã—ã‹ã—ã€ç°¡å˜ãªè§£æ±ºç–ãŒã‚ã‚Šã¾ã™ã€‚é‡è¦ãªãƒ‡ãƒ¼ã‚¿ã®é ã«ä½•ã§ã‚‚良ã„ã®ã§ç‰¹æ®Šãªãƒˆãƒ¼ã‚¯ãƒ³ã‚’ã¤ã‘ã‚‹ã¨ã„ã†ã“ã¨ã§ã™ã€‚例ãˆã° [wikipedia.org] ã§ã‚‚良ã„ã§ã—ょã†ã€‚(もã¡ã‚ã‚“ [joisino.hatenablog.com] ã§ã‚‚良ã„ã§ã™ã€‚)ãã†ã™ã‚‹ã¨ã‚´ãƒŸãƒ‡ãƒ¼ã‚¿ã‚’æ··ãœãŸæ‚ªå½±éŸ¿ã¯ãªããªã‚Šã¾ã™ã€‚

訓練時ã«ã“ã®ãƒˆãƒ¼ã‚¯ãƒ³ãŒã¤ã„ãŸãƒ‡ãƒ¼ã‚¿ã¯é‡è¦ã§ã‚ã‚‹ã“ã¨ã¯æ˜Žç¤ºçš„ã«ã¯æ•™ãˆã¦ã„ã¾ã›ã‚“。やã¯ã‚Šå˜ã« next token prediction ã§è¨“ç·´ã—ãŸã ã‘ã§ã™ã€‚ãã‚Œã§ã‚‚言語モデルã¯ã“ã®ãƒˆãƒ¼ã‚¯ãƒ³ãŒé ã«ã¤ã„ãŸãƒ†ã‚ストã¯é‡è¦ã§ã‚ã‚‹ã“ã¨ã‚’自動的ã«å¦ç¿’ã§ããŸã¨ã„ã†ã“ã¨ã§ã™ã€‚

ç¾å®Ÿã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã§ã‚‚ Wikipedia ã®æƒ…å ±ãŒã‚ˆã抽出ã•ã‚Œã‚‹ã“ã¨ãŒã‚ã‚Šã¾ã™ãŒã€ã“ã®ãƒ¡ã‚«ãƒ‹ã‚ºãƒ ãŒä¸€å› ã§ã‚ã‚‹å¯èƒ½æ€§ãŒã‚ã‚Šã¾ã™ã€‚ç¾å®Ÿã®è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ã‚¦ã‚§ãƒ–コーパスを使用ã—ã¦ãŠã‚Šã€è¨“練プãƒã‚»ã‚¹ã‚‚複雑ãªã®ã§ã€æ®‹å¿µãªãŒã‚‰åŽŸå› ã¯æ˜Žç¢ºã«ã¯åˆ†ã‹ã‚Šã¾ã›ã‚“。ã—ã‹ã—ã€ã“ã®å®Ÿé¨“ã«ã‚ˆã‚Šã€ã‚³ãƒ³ãƒˆãƒãƒ¼ãƒ«ã—ãŸç’°å¢ƒã®ä¸ã§ã¯ã€ã“ã®ã‚ˆã†ãªä½¿ã„æ¨ã¦ã®ã‚´ãƒŸãƒ‡ãƒ¼ã‚¿ã¨å復的ã«ç¾ã‚Œã‚‹é‡è¦ãƒ‡ãƒ¼ã‚¿ã‚’見分ã‘るメカニズムãŒè¨€èªžãƒ¢ãƒ‡ãƒ«ã«å†…在ã—ã¦ã„ã‚‹ã“ã¨ãŒã‚ã‹ã‚Šã¾ã—ãŸã€‚

ã“ã®è«–文を通ã—ã¦ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã¯ãƒ‘ラメータ 1 ã¤ã«ã¤ãã€ç´„ 2 ビットã®æƒ…å ±ã‚’è¨˜æ†¶ã§ãã‚‹ã¨ã„ã†ã“ã¨ã‚’確èªã—ã¾ã—ãŸã€‚逆ã«ã€å¿…è¦ãªçŸ¥è˜ã®ç·é‡ãŒã‚ã‹ã£ã¦ã„ã‚‹å ´åˆã«ã¯ã€ãれをビットã§è¡¨ã—ãŸç´„2å€ã®ãƒ‘ラメータ数ã®ãƒ¢ãƒ‡ãƒ«ã‚’準備ã™ã‚Œã°å分ã¨ã„ã†ã“ã¨ã§ã™ã€‚7B モデルã§ã‚‚ 140 億ビットã¨ã„ã†ã“ã¨ã§ã€ã“ã‚Œã¯è‹±èªž Wikipedia ã®ç·çŸ¥è˜é‡ã‚’超ãˆã‚‹ã»ã©ã§ã™ã‹ã‚‰ã€ã‹ãªã‚Šã®çŸ¥è˜ã‚’言語モデルã«è¾¼ã‚ã‚‹ã“ã¨ãŒã§ãã¾ã™ã€‚

ãŠã‚ã‚Šã«

言語モデルã®ç‰©ç†å¦ã®ã‚³ãƒ³ã‚»ãƒ—トã¯æœ‰æœ›ã ã¨è€ƒãˆã¦ã„ã¾ã™ã€‚

ã‚‚ã¡ã‚ã‚“ã€ç‰©ç†å¦ã®åå‰ã‚’掲ã’ãŸã¨ã“ã‚ã§ã€å¿…ãšã—も物ç†å¦ã®ã‚ˆã†ãªãƒ–レイクスルーãŒä¿è¨¼ã•ã‚Œã‚‹è¨³ã§ã¯ã‚ã‚Šã¾ã›ã‚“。本質的ã«ç°¡å˜ãªã‚‚ã®ã‚’ã‚ã–ã¨è¤‡é›‘ã«è¨˜è¿°ã™ã‚‹ã“ã¨ã¯ã§ãã¦ã‚‚ã€æœ¬è³ªçš„ã«è¤‡é›‘ãªã‚‚ã®ã‚’ç°¡å˜ã«è¨˜è¿°ã™ã‚‹ã“ã¨ã¯ä¸å¯èƒ½ã§ã™ã€‚物ç†å¦ã«ã¯ ãªã©ã®ã‚¨ãƒ¬ã‚¬ãƒ³ãƒˆãªæ³•å‰‡ãŒãŸã¾ãŸã¾ã‚ã£ãŸã‹ã‚‰ãれを発見ã§ããŸã®ã§ã‚ã£ã¦ã€ãã‚‚ãも言語モデルã«ã‚¨ãƒ¬ã‚¬ãƒ³ãƒˆãªæ³•å‰‡ãŒãªã‘ã‚Œã°ç™ºè¦‹ã®ã—よã†ã‚‚ã‚ã‚Šã¾ã›ã‚“。

ãã‚Œã§ã‚‚ã€å¾“æ¥ã®ç ”究方法ã¨æ¯”ã¹ã‚Œã°ã€è¨€èªžãƒ¢ãƒ‡ãƒ«ã®ç‰©ç†å¦ã¯ã‚ˆã‚Šè‰¯ã„æ–¹å‘ã«å‘ã‹ã£ã¦ã„るよã†ã«æ€ãˆã¾ã™ã€‚実際ã€æ—¢ã«æœ‰ç›Šãªæ³•å‰‡ã‚’ã„ãã¤ã‚‚発見ã§ãã¦ã„ã¾ã™ã€‚ã“ã®å…ˆã« ã»ã©ã®ã‚¨ãƒ¬ã‚¬ãƒ³ãƒˆãªçµ±ä¸€æ³•å‰‡ãŒå¾…ã£ã¦ã„ã‚‹ã“ã¨ãŒä¿è¨¼ã•ã‚Œã¦ã„ãªãã¦ã‚‚ã€ã“ã®æ–¹å‘ã«é€²ã‚€ã“ã¨ã¯æœ‰ç›Šã§ã‚ã‚‹ã¨æ€ã„ã¾ã™ã€‚ã„ã¤ã‹çµ±ä¸€æ³•å‰‡ãŒè¦‹ã¤ã‹ã‚‹ã“ã¨ã‚’夢見ã¦ã“ã®æ–¹å‘ã«é€²ã¿ã¾ã—ょã†ã€‚AGI ãŒé”æˆã•ã‚Œã‚‹ãã®æ—¥ã¾ã§ã€‚

è‘—è€…æƒ…å ±

ã“ã®è¨˜äº‹ãŒãŸã‚ã«ãªã£ãŸãƒ»é¢ç™½ã‹ã£ãŸã¨æ€ã£ãŸæ–¹ã¯ SNS ãªã©ã§æ„Ÿæƒ³ã„ãŸã ã‘ã‚‹ã¨å¬‰ã—ã„ã§ã™ã€‚

æ–°ç€è¨˜äº‹ã‚„スライド㯠@joisino_ (Twitter) ã«ã¦ç™ºä¿¡ã—ã¦ã„ã¾ã™ã€‚ãœã²ãƒ•ã‚©ãƒãƒ¼ã—ã¦ãã ã•ã„ã。

ä½è—¤ 竜馬(ã•ã¨ã† りょã†ã¾ï¼‰

京都大å¦æƒ…å ±å¦ç ”究科åšå£«èª²ç¨‹ä¿®äº†ã€‚åšå£«ï¼ˆæƒ…å ±å¦ï¼‰ã€‚ç¾åœ¨ã€å›½ç«‹æƒ…å ±å¦ç ”究所助教。著書ã«ã€Žæ·±å±¤ãƒ‹ãƒ¥ãƒ¼ãƒ©ãƒ«ãƒãƒƒãƒˆãƒ¯ãƒ¼ã‚¯ã®é«˜é€ŸåŒ–ã€ã€Žã‚°ãƒ©ãƒ•ãƒ‹ãƒ¥ãƒ¼ãƒ©ãƒ«ãƒãƒƒãƒˆãƒ¯ãƒ¼ã‚¯ã€ã€Žæœ€é©è¼¸é€ã®ç†è«–ã¨ã‚¢ãƒ«ã‚´ãƒªã‚ºãƒ ã€ãŒã‚る。