WinRAR has been a staple in the PC community for decades, offering the ability to compress data into compact files for easier transfer. With that, however, comes the occasional security concern, and today we have an example of just such a situation. Reports have begun to circulate, highlighting an issue in all but the latest edition of WinRAR that enable software to execute without the Windows Mark of the Web (MotW) security warning pop-ups.

If you aren't familiar with the MotW warnings, you might recognize them as the pop-ups that warn you against running strange software from the internet. It typically includes a blurb explaining that it's dangerous to execute applications downloaded from unfamiliar sources, and includes both an option to continue regardless or to cancel the operation entirely. This system can apparently be skipped over entirely in older versions of WinRAR, making for a greater security risk.

The official release notes for version 7.11 confirm that this vulnerability has been nullified and goes on to detail the fixed issue. The notes state, "if symlink pointing at an executable was started from WinRAR shell, the executable Mark of the Web data was ignored." As long as you update to the latest version, this security flaw shouldn't be an issue.

WinRAR confirmed that the security flaw was identified by Shimamine Taihei of Mitsui Bussan Secure Directions, Inc. The concern was reported directly to the WinRAR team who were able to tackle the issue and resolve it by the time version 7.11 was released. In the report, the issue was described, "If a symbolic link specially crafted by an attacker is opened on the affected product, arbitrary code may be executed."

It's important to note that while this security flaw requires users to manually open links to initiate potential attacks, it still increases the security risk by skipping the pop-up Windows warning system entirely. The MotW system is just an extra layer, warning users before they execute suspicious code, to help stop malware from automatically propagating. However, the MotW pop-ups can be a crucial step in mitigating the spread of unwanted software. It's best to update your version of WinRAR to the latest version to avoid any potential mishaps going forward.

]]>In a surprising turn of events, an Nvidia engineer pushed a fix to the Linux kernel, resolving a performance regression seen on AMD integrated and dedicated GPU hardware (via Phoronix). Turns out, the same engineer inadvertently introduced the problem in the first place with a set of changes to the kernel last week, attempting to increase the PCI BAR space to more than 10TiB. This ended up incorrectly flagging the GPU as limited and hampering performance, but thankfully it was quickly picked up and fixed.

In the open-source paradigm, it's an unwritten rule to fix what you break. The Linux kernel is open-source and accepts contributions from everyone, which are then reviewed. Responsible contributors are expected to help fix issues that arise from their changes. So, despite their rivalry in the GPU market, FOSS (Free Open Source Software) is an avenue that bridges the chasm between AMD and Nvidia.

The regression was caused by a commit that was intended to increase the PCI BAR space beyond 10TiB, likely for systems with large memory spaces. This indirectly reduced a factor called KASLR entropy on consumer x86 devices, which determines the randomness of where the kernel's data is loaded into memory on each boot for security purposes. At the same time, this also artificially inflated the range of the kernel's accessible memory (direct_map_physmem_end), typically to 64TiB.

In Linux, memory is divided into different zones, one of which is the zone device that can be associated with a GPU. The problem here is that when the kernel would initialize zone device memory for Radeon GPUs, an associated variable (max_pfn) that represents the total addressable RAM by the kernel would artificially increase to 64TiB.

Since the GPU likely cannot access the entire 64TiB range, it would flag dma_addressing_limited() as True. This variable essentially restricts the GPU to use the DMA32 zone, which offers only 4GB of memory and explains the performance regressions.

The good news is that this fix should be implemented as soon as the pull request lands, right before the Linux 6.15-rc1 merge window closes today. With a general six to eight week cadence before new Linux kernels, we can expect the stable 6.15 release to be available around late May or early June.

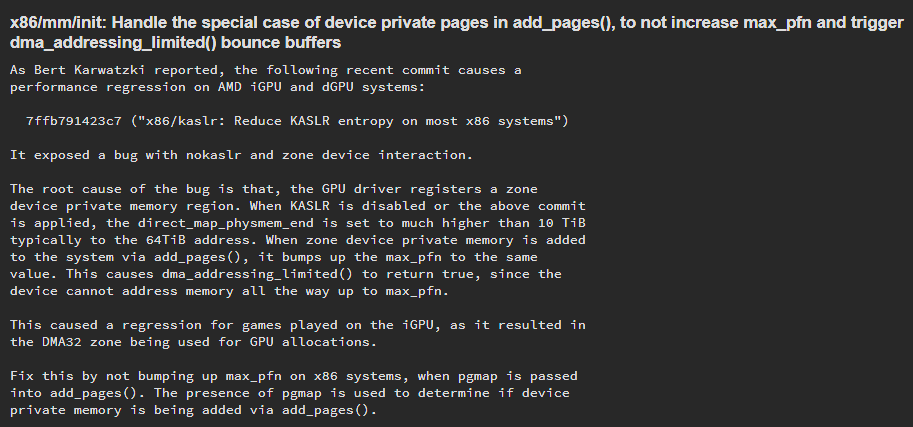

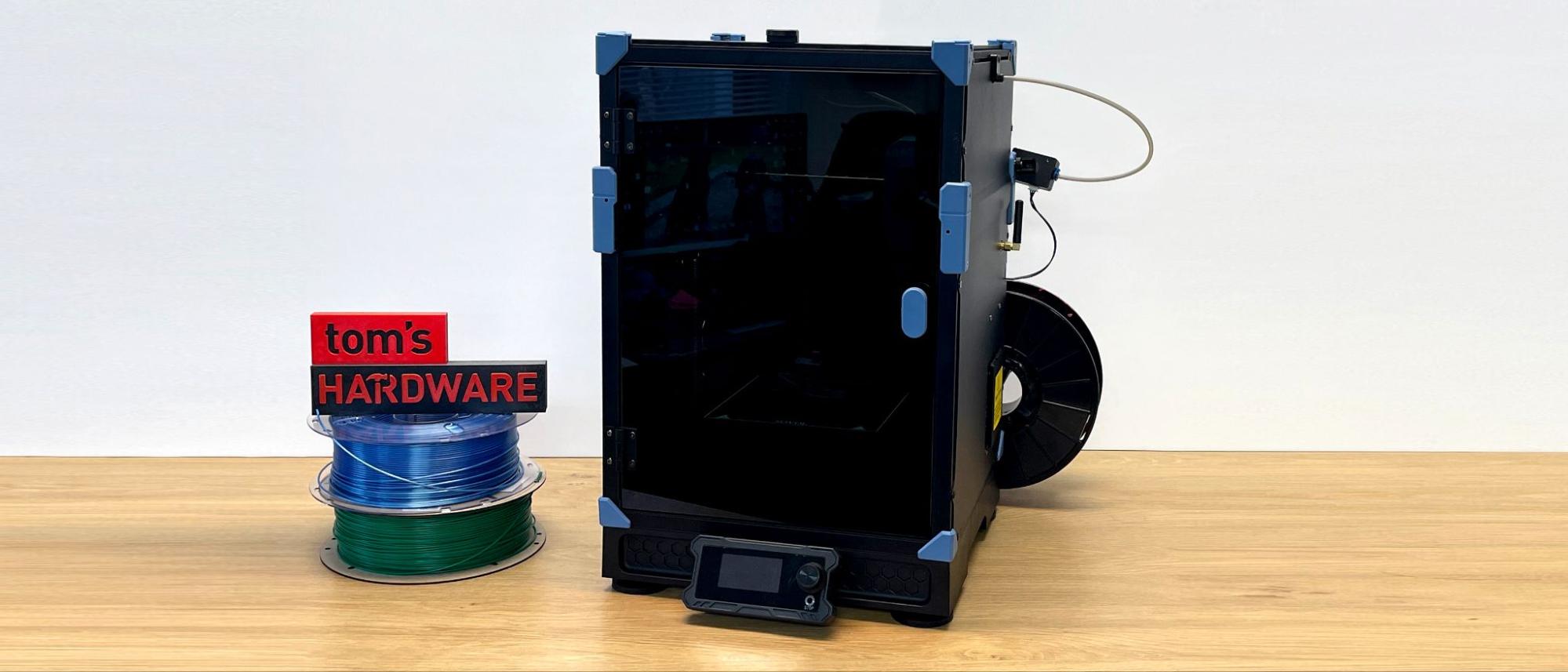

]]>The 3D printing community has seemingly been obsessed with overgrown maximum build volume printers. The petite Sovol Zero is a refreshing change from these backbreaking behemoths. It's a Core XY mini based on the open-source Voron 0 design, which we printed, built, and reviewed a few years ago. Unlike the original Voron, the Sovol Zero is a mostly assembled, plug-and-play machine.

Sovol has declared this to be the fastest 3D printer currently on the market, with acceleration rates up to 40,000mm/s². The profiles included with it have impressive speeds that are much faster than my Bambu Lab. However, 3D printers can’t go faster than physics, so the speeds this – or any – printer can hit are largely dependent on the size and shape of the model. For example, on the Cute Flexi Octopus I printed out, the printer barely pushed past 100mm/s due to all the tiny parts.

It takes full advantage of its enclosed build by including a hotend that can reach 350 degrees Celsius and a build plate to 120 degrees Celsius. There’s no need for an active heater on a machine this size. You can check the chamber temperature on the Mainsail screen but not on the Zero’s tiny interface. This makes printing in technical filaments a breeze.

The Zero has a retail price of $499 and is on sale for $429, which is quite a bargain in both price and time saved from the current print-it-yourself $694 Voron V0.2 kit. It also has a slightly larger 152 mm build plate, making it closer in size to Bambu’s A1 Mini and the Prusa Mini, both of which have 180 x 180 mm build plates.

The Sovol Zero is a delightful machine, but rather niche and with an odd mix of old and new tech. Performance-wise, it’s right up there with the best 3D printers we tested, but it narrowly fell short of making the list. My only true complaint would be the noisy fans and stepper motors that sound like happy droids whistling while they work. But is that really a negative?

Specifications: Sovol Zero

Sovol Zero: Included in the Box

The Sovol Zero comes mostly pre-assembled, with the screen, spool holder and filament runout sensor bagged separately. Also in the box are a paper copy of the manual, Wi-Fi antenna, USB drive with a copy of OrcaSlicer and calibration prints, power cord, scraper, nozzle cleaner, flush cutters, tool kit, PTFE tubing, a fan cover for the filter fan, a spare silicone brush, and spare brass and hardened steel nozzles.

Design of the Sovol Zero

According to rumors, the Sovol Zero was almost called the Sovol 08 Mini, but thankfully, someone talked the company out of it, and the Zero was allowed to stand on its own. If you’re curious about the name, the Zero is inspired by Voron’s open source Voron 0 design – technically the Voron 0.2 – which has a slightly smaller 120 x 120 x 120 mm build volume.

The Zero is like a classic muscle car: simple, heavy, and loud. There are not a lot of plastic parts. It’s mostly made of steel plates and glass panels, with some iconic Sovol Blue injected molded accents. It weighs at a hefty 30 pounds, way more than a typical bed slinger. It probably needed all the weight to keep it from vibrating right off your table because, like a muscle car, the tiny Zero hauls booty. It has super squishy, shock absorbing feet, and Klipper to cancel out the vibrations.

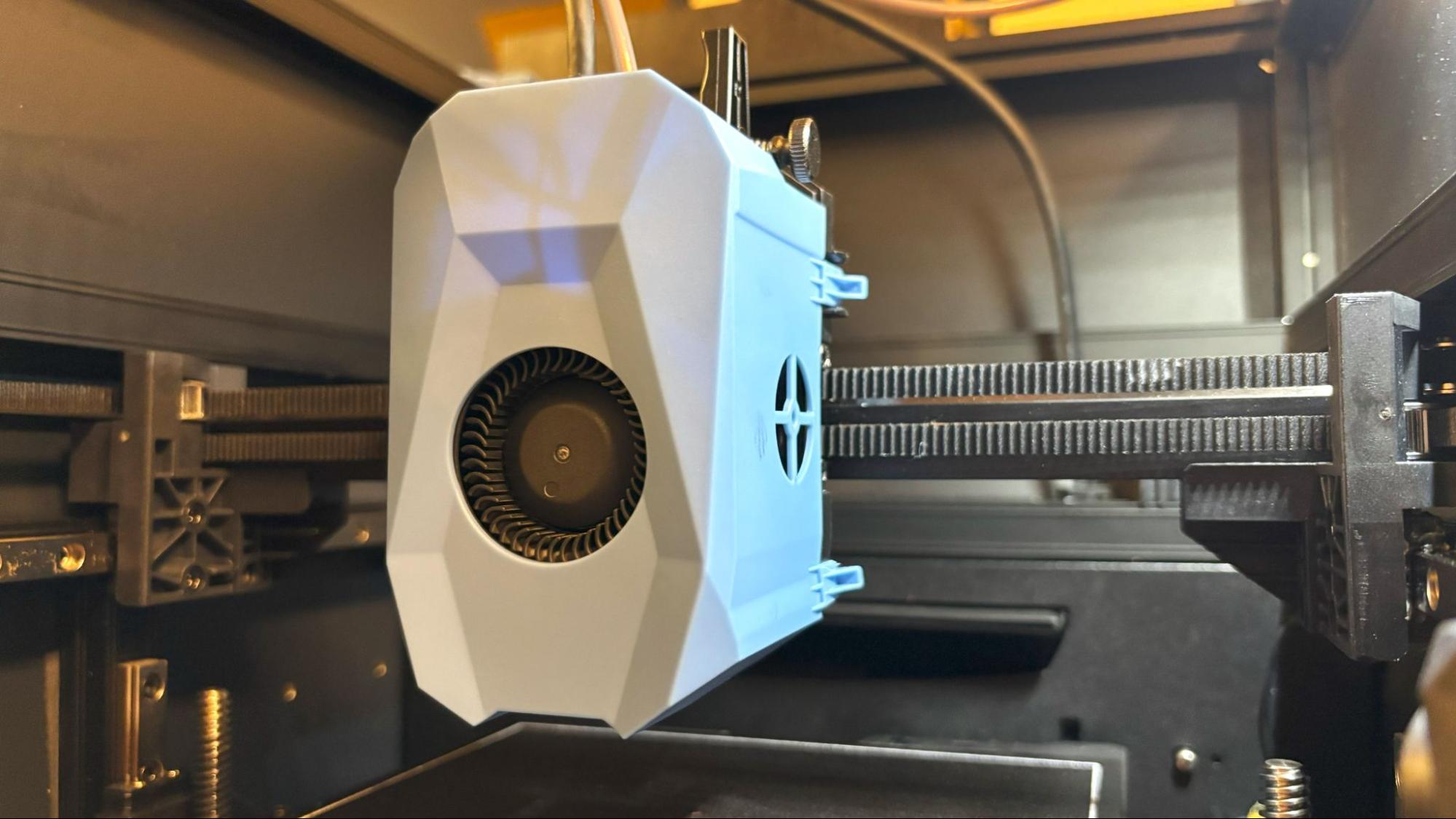

The tool head has a dual-gear extruder with the ubiquitous Sovol wheel flipped around to face the rear. It has a “normal” sized nozzle that looks like a standard V6, but Sovol is offering a drop-in replacement kit that includes the ceramic heater and thermistor pre-assembled for $36.99 or $11.99 for a kit. Sovol rates the nozzle flow at 50mm³/s, which should be able to keep up with its claim of 40,000mm/s² acceleration.

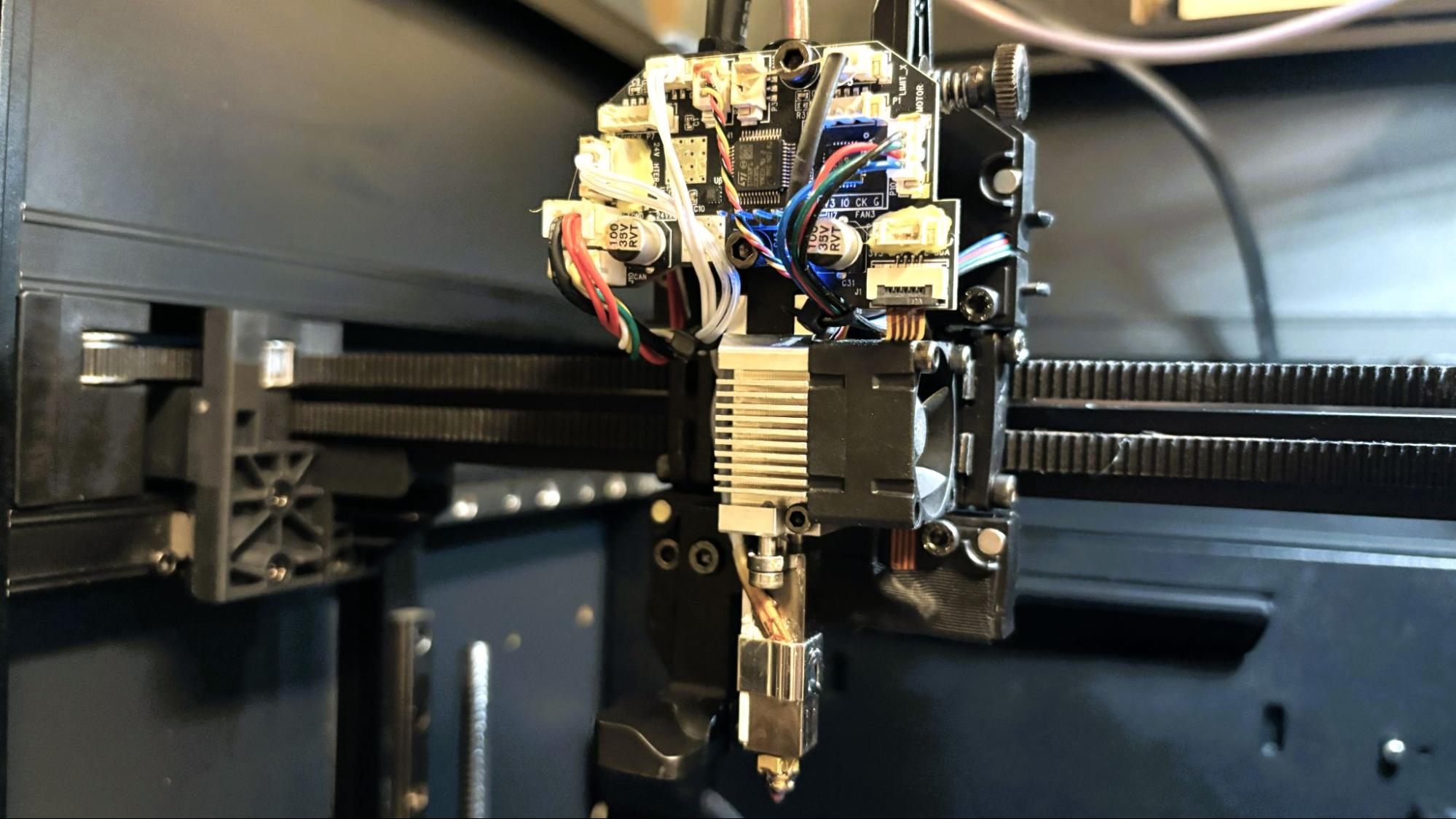

The 3.5-inch minimalist screen is a throw back to previous generations with a monochrome display and selector knob. It’s quite primitive compared to what we expect on modern printers, but honestly, I don’t use it much as Klipper lets me use Mailsail to send files from my PC.

The X and Y axis run on linear rails, and the Z axis runs on twin linear rails with two lead screws belted to a single stepper motor. The belts keep everything in sync.

For bed leveling, the Zero uses both a pressure sensor built into the hotend (which taps) and an eddy current sensor that zooms over the plate to scan it. It also has accelerometers in the tool head and the bed for Klipper’s vibration compensation, which reduces unsightly ringing artifacts caused by vibration.

A built-in camera allows you to monitor your prints from Klipper’s Mainsail screen. It’s not the best camera for capturing timelapses, but it will let you see how your prints are doing. Klipper also makes sending files extremely easy over WiFi without needing to talk to a Cloud server, plus there’s a port for a USB drive. The machine can also be used with the Obico App for remote monitoring and spaghetti detection.

The Sovol Zero has an abundance of cooling fans. There is a large 5020 parts cooling fan in the toolhead, a massive auxiliary cooling fan in the rear of the printer, and a filtered cavity heat dissipation fan on the right side. This fan requires a magnetic cover outside the case when running high-temperature filaments like ASA, ABS, or PC.

The motion system of the Sovol Zero may be noisy, but you’ll never hear it, given the complete cacophony of all the fans listed above. This may be the loudest, fastest printer we’ve seen since the FLSUN S1 Pro. The stepper motors are also quite chirpy and sound like an astromech complaining about how you haven’t changed your primary buffer panels like it asked - if you’re the nerdy kind, you might not mind the noise.

Assembling the Sovol Zero

The Sovol Zero comes almost fully assembled. The Wi-Fi antenna screws on to the right side of the printer, along with the filament runout sensor. The spool holder is screwed into the rear. In all, there are only three screws. The screen slots into the bottom front after attaching the ribbon cable.

Leveling the Sovol Zero

Bed leveling and Z offset are automatic and done at the beginning of every print. The eddy current sensor allows the scanning of the bed in a matter of seconds and does an excellent job.

Loading Filament on the Sovol Zero

Loading filament is very simple and easier than with our Voron. Simply place the spool into the side-mounted rack and feed the plastic into the reverse Bowden tube until it reaches the hotend.

The factory set the filament load temperature to 250 degrees Celsius, which is fine for most filaments. For running higher temperature materials like PC, ABS, ASA, and Nylon, this can be adjusted in the Macros.cfg in Klipper. We set it to 300 degrees Celsius to help clear more stubborn filaments.

Reverse the process to change colors or remove the filament.

Preparing Files / Software for Sovol Zero

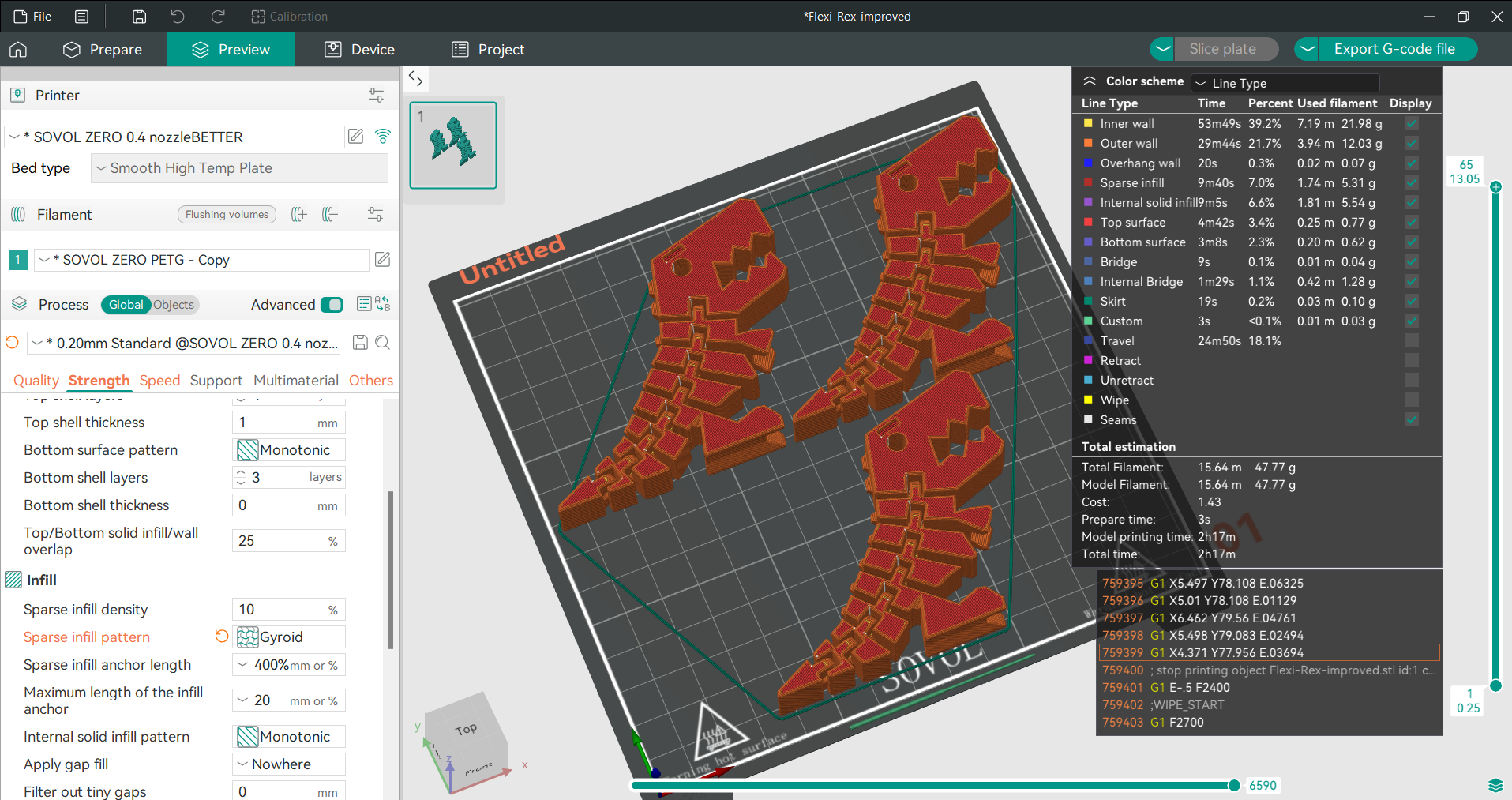

Sovol included a copy of OrcaSlicer, a free third-party slicer based on Open Source PrusaSlicer and BambuStudio. The included profile worked great for everything except TPU, which required some tweaking.

Printing on the Sovol Zero

The Sovol Zero comes with a sample coil of white PLA, which I didn’t bother using. If you want more colors and materials like silks and multicolor filaments, you should check out our guide to the best filaments for 3D printing for suggestions.

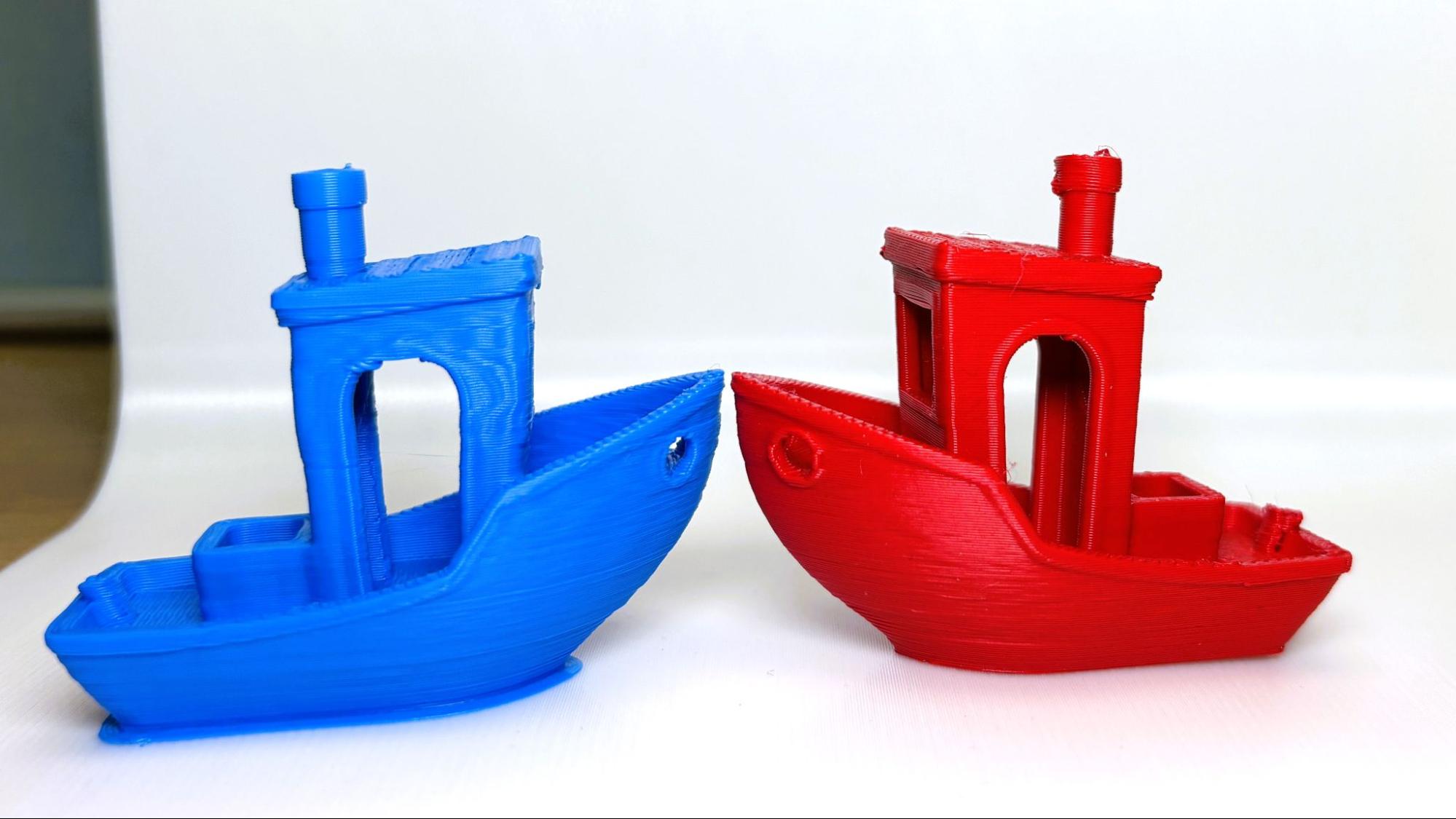

Speed Benchy’s are tricky, as you’re often judging someone’s slicer skills rather than the printer. The Zero came with a presliced and absolutely eye-popping eight minute and 27 second Benchy that printed a bit wobbly, but quite well for an under 10 minute print. When I tried to replicate it, I got a decent looking 14 minute and 49 second boat with slightly rough layers in the middle. There was no sign of ringing and just a stray bit of wisps. My boat was printed in Polymaker Red PLA, and the Sovol sliced boat is in Creality Blue PLA.

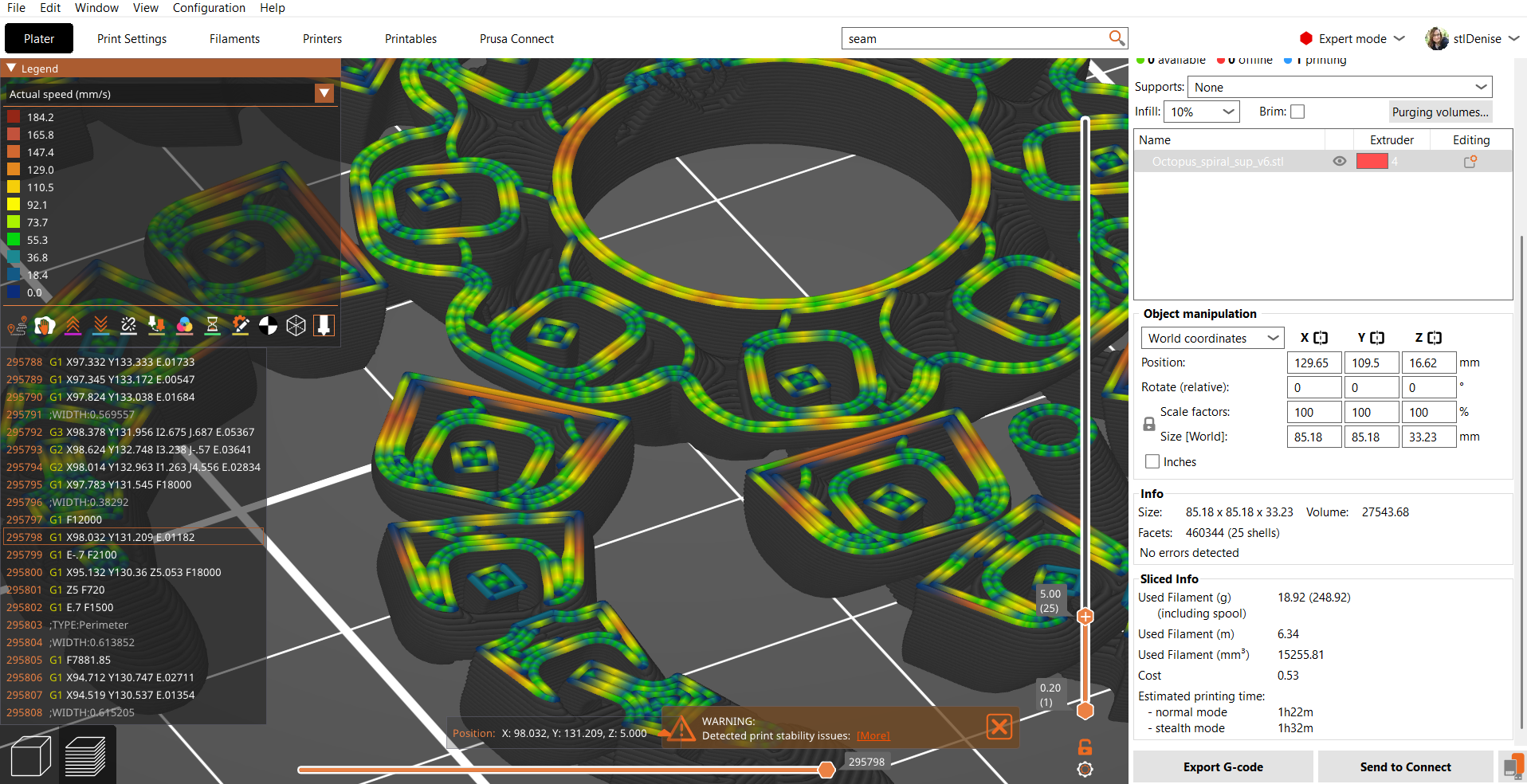

I should briefly touch on the paradox of superfast printers: they are limited by physics. No printer can hit its top speed immediately. It has to accelerate to that speed, then slow down when it has to corner. The flexi Cute Octopus I printed reminded me of that - because no matter how I sliced it, I couldn’t get it to print faster than around 85 minutes.

Looking at the speed charts, it didn’t make any sense. Then, I figured out that the charts showed me the estimated speed, not the actual speed. Prusa Slicer is able to show Actual Speed, so I sliced the Cute Octopus for my MK4, a machine with less than half the acceleration rates, and found that it could print it in 82 minutes. Below is the “actual speed” and you can see the printer is constantly revving up and slowing down.

The flexi Cute Octopus is an excellent print to test bed adhesion, plus I can give them away to kids when I travel to festivals. I was able to fit two Cute Octopi on the bed, and they printed in two hours and 58 minutes. I used a 0.2 mm layer height and PLA default speed settings, with a max speed of 500mm/s on the infill that it never reached. This was printed in Polymaker Red PLA.

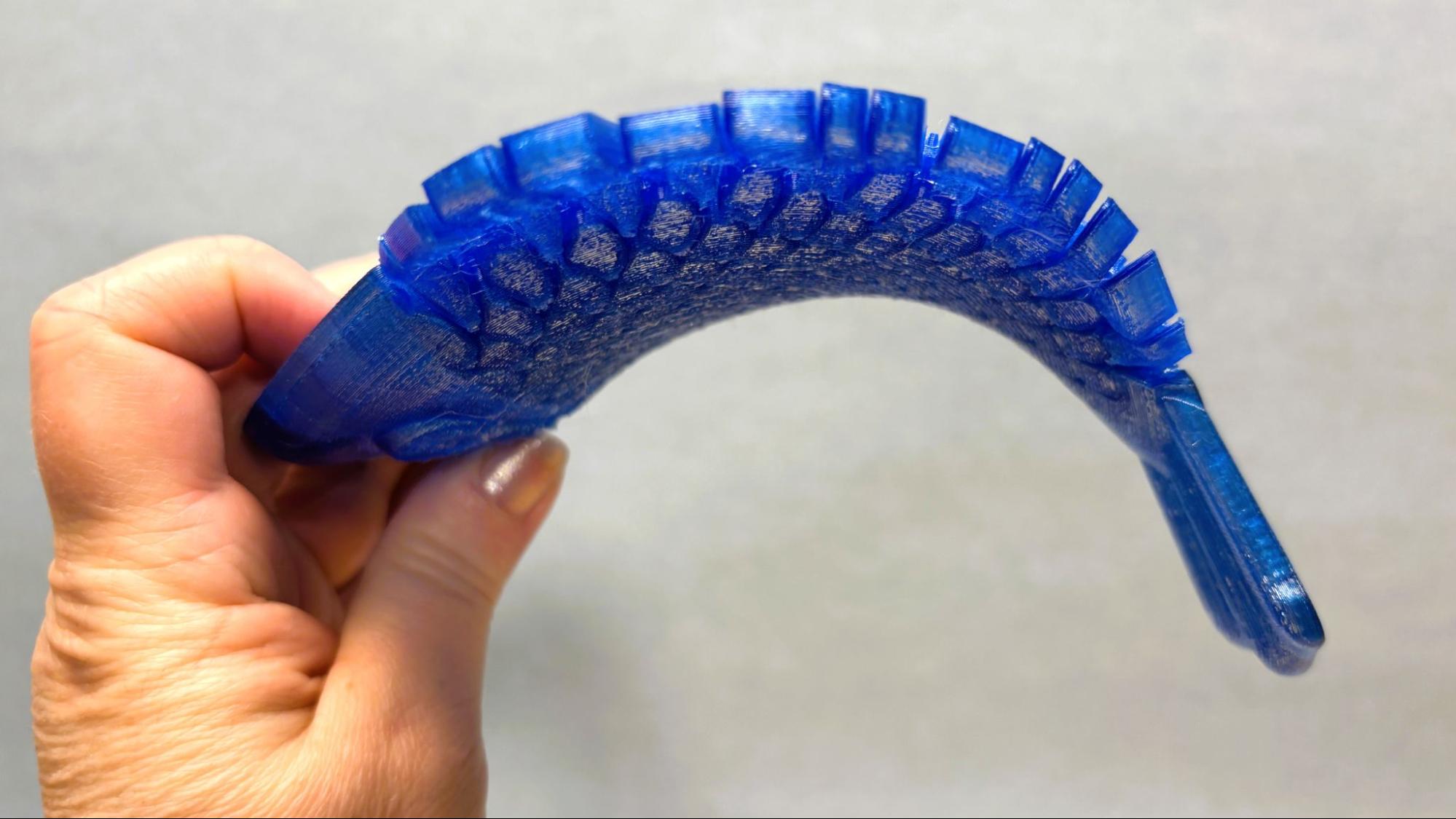

To test out the printer’s maximum build size, I printed this Floppy Flounder in PETG. It took five hours and 30 minutes, using a 0.2mm layer height, 3 walls and 10% infill. It printed great and was completely flexible with very little stringing. The top surface is a little rough, but that can be tuned by adjusting the flow or top speed settings. This was printed in ProtoMaker Translucent Blue PETG, a company that sadly is no longer in business.

The Zero did a good job with flexible filament. I gave it a barrel-shaped can holder that printed fairly well. It could have used some support around the bottom, but that’s more a model problem than a printer issue. The layers are smooth and even, though there are a few rough spots that look like a clog was trying to happen. This could easily be addressed by tuning the TPU profile. This printed in six hours and 13 minutes, using a 0.2 layer height and OrcaSlicer’s default settings. The material is Polymaker Red TPU.

Since the Sovol Zero does high temperature materials very well, I printed a handful of cable organizers in Jet Black Prusament ASA. All the clamps fit on one plate, which printed in 48 minutes and 55 seconds. The print is professional looking and strong with no visible layer lines.

This modular ClampDock is one of my favorite things to print. it currently holds my headphones to the edge of my desk. It needs to be strong, so this is printed out of ABS, PC and TPU. It was originally just going to be PC, but the hinge wouldn’t open, so I switched to ABS, which the Zero had an easier time with printing. This was printed with a 0.2 layer height, 5 walls and a slowed first layer for good adhesion. It took three hours and 10 minutes to print. Printed (mostly) in Polymaker PolyMax PC.

Bottom Line

The Sovol Zero is a compact Core XY 3D printer build like a tank with endless amounts of horsepower. It’s acceleration rate of 40,000mm/s² is backed by a nozzle flow rate that goes up to 50mm³/s. This is all very impressive, but you have to remember that physics exists, and 3D printers need time to ramp up to those speeds. The speed of your 3D printer will always be throttled by the size and shape of your model.

Even so, the models we test printed had excellent quality at speed, even if we didn’t set any records. The Zero’s enclosure worked very well, so high-temperature filaments weren’t a problem.

I’m a big fan of companies that leave Klipper alone and provide a profile to use with regular OrcaSlicer. These tools are more than complete on their own – and it hopefully saved Sovol money in not having to develop their own code and software.

Currently on sale for $429, the Zero is worth the price when you consider the quality of the build. This is a great printer if you want something like a Voron 0, but don’t want to build it yourself. If you want a regular-sized Core XY that’s super affordable, I’d recommend you check out the Elegoo Centauri Carbon. Beginners looking for bargain-priced color printers should check out the Bambu Lab A1 Mini, our favorite pick for beginners who want a little color in their life. It’s only $389 with a four-color AMS unit.

MORE: Best 3D Printers

MORE: Best Budget 3D Printers

MORE: Best Resin 3D Printers

]]>The Shenzhen 8K UHD Video Industry Cooperation Alliance, a group made up of more than 50 Chinese companies, just released a new wired media communication standard called the General Purpose Media Interface or GPMI. This standard was developed to support 8K and reduce the number of cables required to stream data and power from one device to another. According to HKEPC, the GPMI cable comes in two flavors — a Type-B that seems to have a proprietary connector and a Type-C that is compatible with the USB-C standard.

Because 8K has four times the number of pixels of 4K and 16 times more pixels than 1080p resolution, it means that GPMI is built to carry a lot more data than other current standards. There are other variables that can impact required bandwidth, of course, such as color depth and refresh rate. The GPMI Type-C connector is set to have a maximum bandwidth of 96 Gbps and deliver 240 watts of power. This is more than double the 40 Gbps data limit of USB4 and Thunderbolt 4, allowing you to transmit more data on the cable. However, it has the same power limit as that of the latest USB Type-C connector using the Extended Power Range (EPR) standard.

GPMI Type-B beats all other cables, though, with its maximum bandwidth of 192 Gbps and power delivery of up to 480 watts. While still not a level where you can use it to power your RTX 5090 gaming PC through your 8K monitor, it’s still more than enough for many gaming laptops with a high-end discrete graphics. This will simplify the desk setup of people who prefer a portable gaming computer, since you can use one cable for both power and data. Aside from that, the standard also supports a universal control standard like HDMI-CEC, meaning you can use one remote control for all appliances that connect via GPMI and use this feature.

The only widely used video transmission standards that also deliver power right now are USB Type-C (Alt DP/Alt HDMI) and Thunderbolt connections. However, this is mostly limited to monitors, with many TVs still using HDMI. If GPMI becomes widely available, we’ll soon be able to use just one cable to build our TV and streaming setup, making things much simpler.

]]>Right now, at Amazon, you can find the 15.6-inch Samsung Galaxy AI Book4 Edge laptop for one of its best prices to date. This Snapdragon X Plus-based laptop usually goes for around $899, but right now it's marked down to just $695. So far, no expiration has been specified for the discount, so we don't know for how long it will be made available at this rate. It is, however, labeled as a limited offer.

We haven't had the opportunity to review the Samsung Galaxy AI Book4 Edge so far, but we're plenty familiar with several Snapdragon-powered Copilot+ machines. Recently, some controversy arose when the Surface Laptop 7s were frequently returned due to compatibility issues. If you're considering this laptop, you might want to research a little and make sure your favorite games and apps are able to run well on Windows-on-Arm systems. On the positive side, once you go Arm, you should enjoy the best "long-lasting battery" life available on Windows devices.

Samsung 15-Inch Galaxy AI Book4 Edge: now $695 at Amazon (was $899)

This laptop is built around a Snapdragon X Plus X1P-42-100 processor. It has a 15.6-inch FHD display and relies on a Qualcomm Adreno GPU. It comes with 16GB of LPDDR5X and a 500GB internal SSD for storage.View Deal

The main processor driving the Samsung Galaxy AI Book4 Edge is a Snapdragon X Plus X1P-42-100. This CPU has eight cores with a base speed of 3.4 GHz and a single-core boost feature that takes it up to 3.8 GHz. For graphics, it relies on a Qualcomm Adreno GPU which outputs to a 15.6-inch anti-glare display with an FHD resolution of 1,920 x 1,080 pixels.

As far as memory goes, this edition comes with 16GB of LPDDR5X and a 500GB internal SSD is fitted for storage. It has a couple of 2W speakers integrated for audio output, but you also get a 3.5mm audio jack to take advantage of. It has an HDMI 2.1 port for outputting video to a secondary screen and a handful of USB ports, including one USB 3.2 port and two USB4 ports.

It is also worth noting that this price is cheaper than the current offer over at the official Samsung website. If you want to check out this deal for yourself, head over to the Samsung 15-inch Galaxy AI Book4 Edge product page on Amazon US for more information and purchase options.

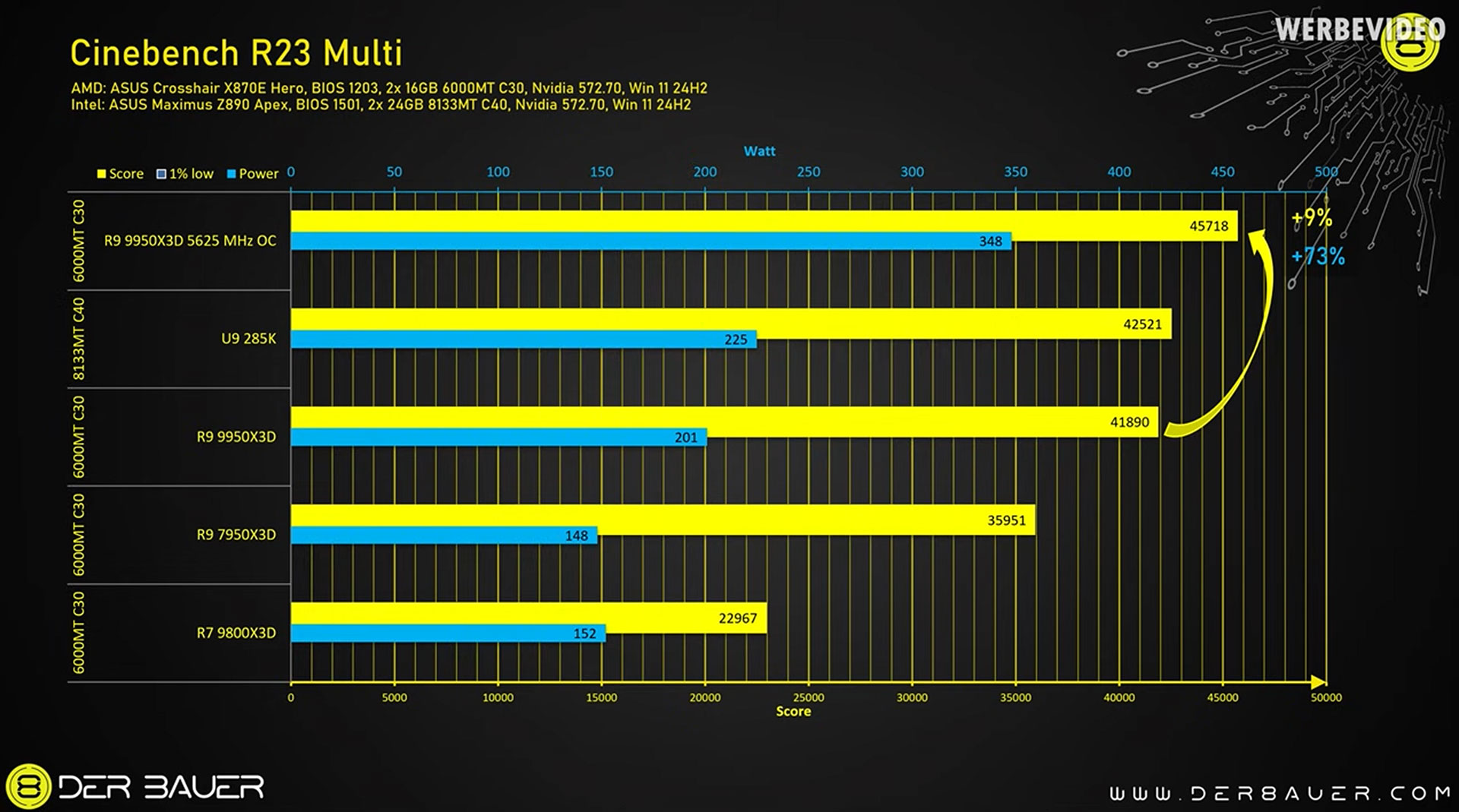

]]>PC overclocking and hardware expert Roman ‘Der8auer’ Hartung has shared his before-and-after AMD Ryzen 9 9950X3D delidding test results. His conclusion: enthusiasts can expect up to 10% higher performance with direct-die cooling, but the cost will be significantly higher power consumption. Alternatively, Der8auer observed that users could run the delidded CPU at stock settings and enjoy far lower temperatures, around 23 degrees Celsius lower in this case, plus improved efficiency. A comfortable compromise might be found between these sampled extremes.

In the above video we see Der8auer introduce the awesome AMD Ryzen 9950X3D, then establish a baseline performance / thermal profile, before the delidding operation. Subsequently, he used the same liquid cooler, settings, and benchmarks to see what benefits the delidding process could deliver.

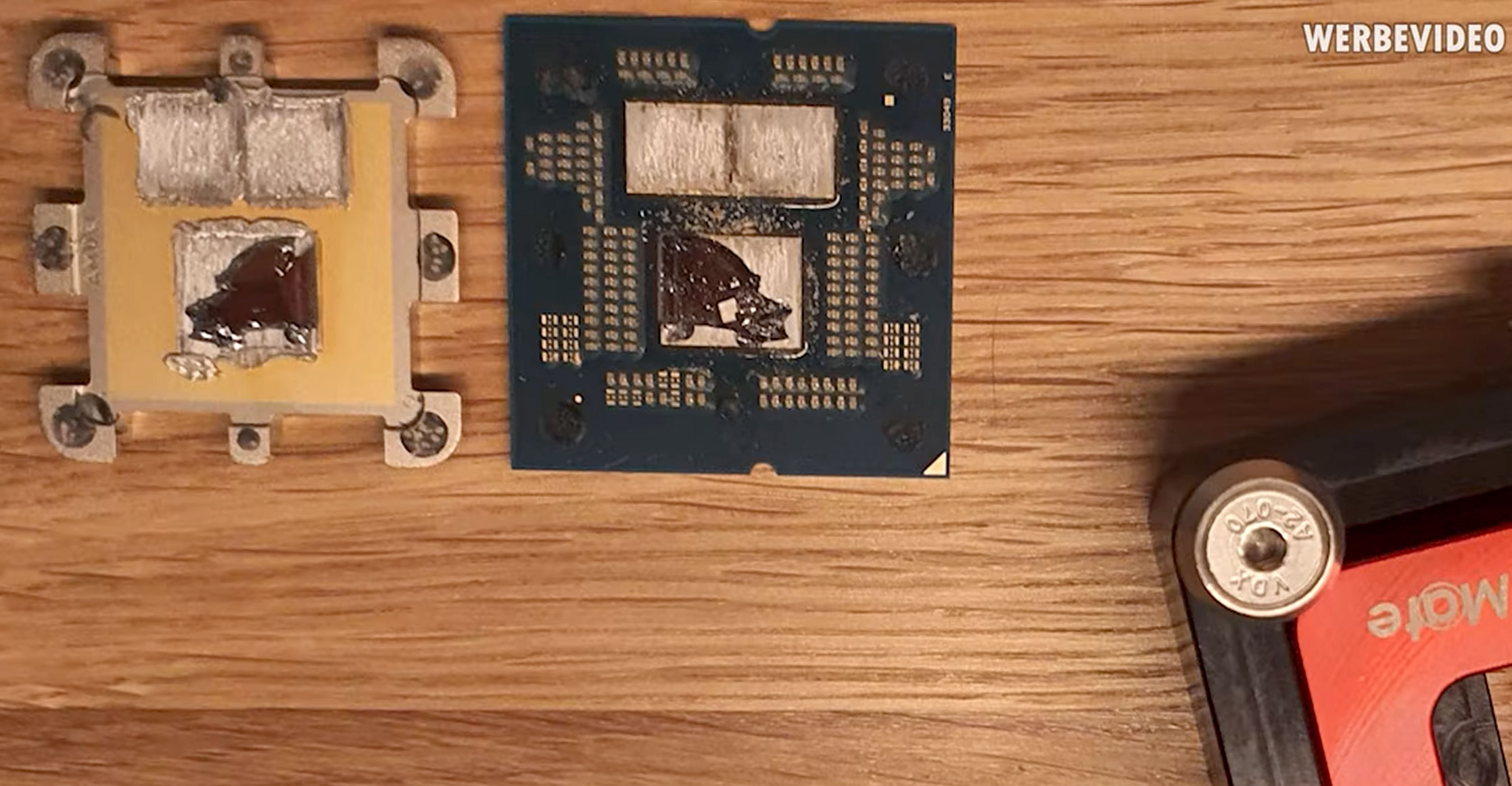

During the delidding process, Der8auer provided some sage advice. He used the still-compatible Delid-Die-Mate Ryzen 7000 device for the 9950X3D. Please take your time ‘wiggling’ the HIS, perhaps up to 100 times, “until it falls off by itself,” plead the overclocker. An example of a rush job he shared (reproduced below) should be warning enough for would-be delidders to be patient.

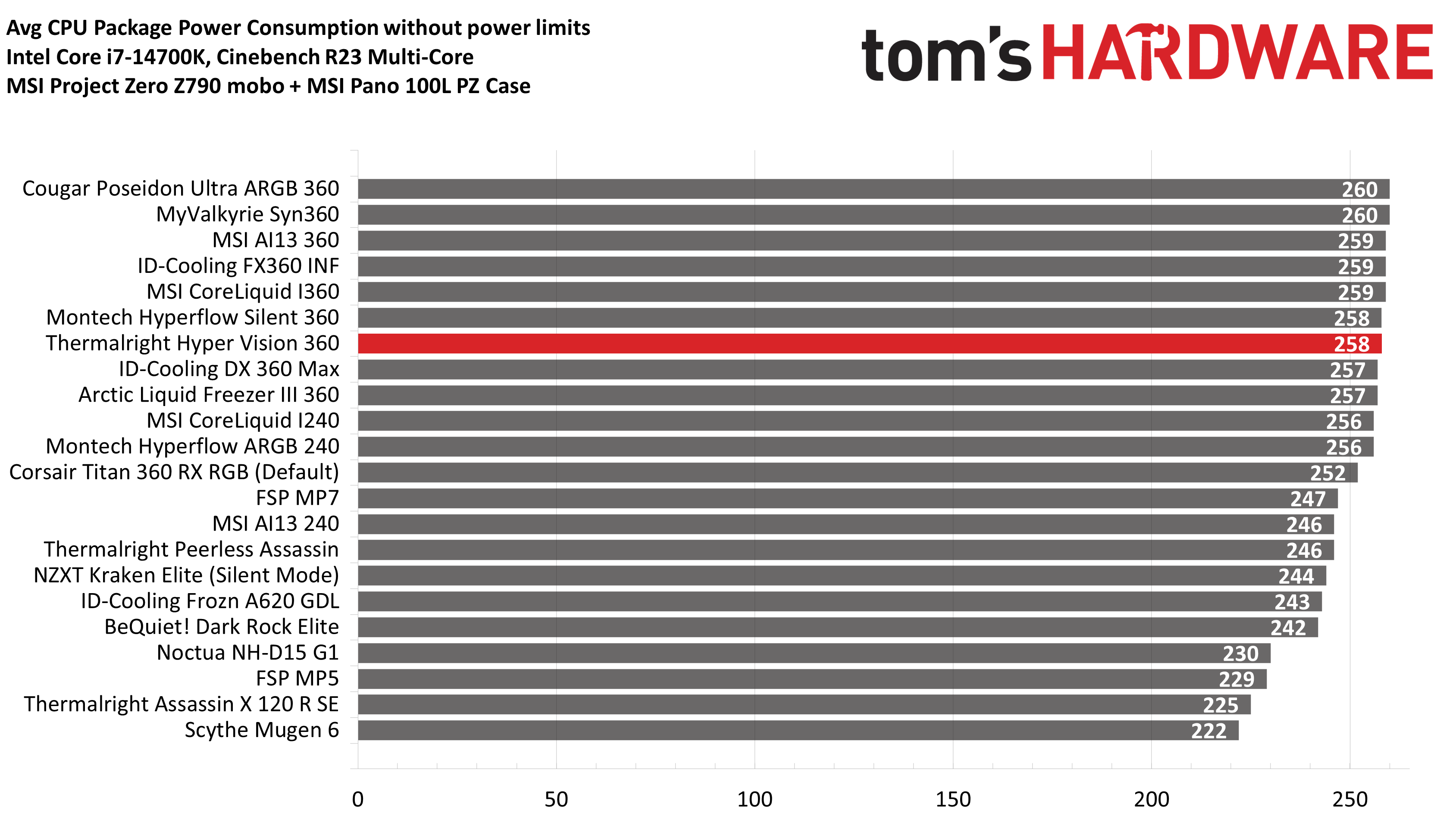

The overclocking expert published two charts with the before and after delidding performance and power consumption on show. In the Cinebench R23 multi-thread tests chart, which we embedded below, you can see a key takeaway: the delidded Ryzen X3D chip could deliver up to 9% better performance in this productivity benchmark. However, it is questionable whether the 73% increase in power consumption would be worth it. Der8auer also tested Counter Strike 2 4K during his video, and provided a similar chart.

If you buy the AMD Ryzen 9 9950X3D but don't feel driven to wring every-last-ounce of performance out of it – Der8auer notes that the delidded CPU can run much cooler at stock settings. He seemed impressed that his sample could run at 65 degrees Celsius under load – which is a temperature reduction of 23 degrees Celsius compared to the CPU as shipped from AMD with IHS ‘octopus’ attached. This modded chip also ran at 290W under load, using about 20W less power than the original chip.

If you are interested in more 9950X3D delidding news, we recently retold the hair-raising tale of an ‘amateur’ delidding one of AMD’s best CPUs for gaming using some fishing line and a clothes iron - plus nerves of steel.

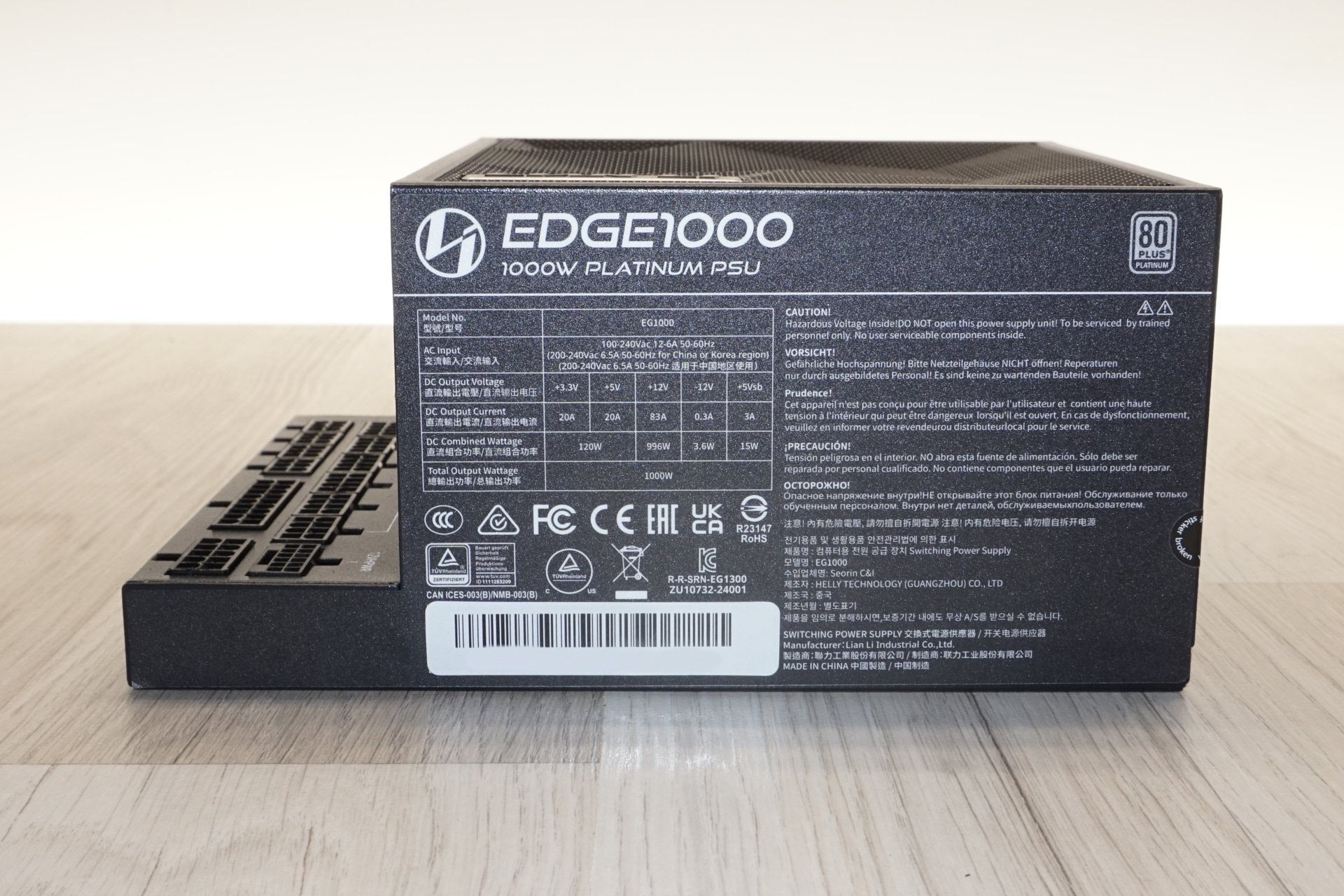

]]>Lian Li Industrial Co. Ltd., established in 1983, is a Taiwanese company specializing in manufacturing computer cases, power supplies, and accessories. It's one of the oldest players in the PC market and is known for its focus on aluminum-based designs. Lian Li produces a range of products aimed at both consumer and industrial markets, with the company's offerings including mid-tower and full-tower cases and more compact cases for smaller builds. Amongst consumers and PC enthusiasts, Lian Li's products are recognized for their build quality, modularity, and innovative features, catering to a diverse set of needs in the PC building community.

We focus on the EG1000 Platinum ATX 3.1 PSU to see whether it deserves a spot in our list of best power supplies. This power supply unit partially complies with the ATX 3.1 design guide (the paragraphs related to electrical quality and performance). It is designed to meet the demanding requirements of modern gaming PCs, with its specifications indicating good efficiency and robust power delivery. Featuring fully modular cables with individually sleeved wires, dynamic fan control for optimal cooling, and advanced internal topologies, the EG1000 Platinum aims to provide both reliability and performance. However, behind its long list of features, the highlight of the EG1000 Platinum is the shape of the chassis itself, which forgoes the ATX cuboid shape and standard length.

Specifications and Design

In the Box

The Lian Li EG1000 Platinum ATX 3.1 PSU comes in straightforward, effective packaging. The outer box is L-shaped, designed to hint at the PSU's unique shape, while the unit itself is protected during shipping by a nylon pouch and dense packaging foam, ensuring it arrives in pristine condition.

The bundle goes slightly beyond the essentials, including mounting screws, the necessary AC power cable, and a basic manual, as well as a few cable ties and a PCI slot cover for routing external cables.

This power supply features a fully modular design, allowing for the removal of all DC power cables, including the 24-pin ATX connector. The cables are all-black, from connectors to wires, and each one is individually sleeved, contributing to the unit's premium aesthetic and improving cable management options. The 12V-2x6 cable is a minor exception, with the tips of the connectors being blue. There is also a relatively short (300 mm) SATA cable with four consequent SATA connectors, which should be very useful in smaller cases with arrays of drives. A reusable cable strap holds every single cable.

The ATX, 12V CPU, and PCI Express cables have four preinstalled wire combs. The wire combs can be easily moved to any position across the cable or removed completely should the user wish to.

External Appearance

The Lian Li EG1000 Platinum ATX 3.1 PSU is housed in a unique chassis that measures 182 mm long, considerably longer than the standard ATX dimensions. This increased length is due to the L-shaped design, where the cable connectors are placed horizontally, making the body deeper. The extended length and design might require careful consideration of cable paths in standard cases, as the unit is primarily designed with dual chamber cases in mind.

The EG1000 Platinum ATX 3.1 PSU's aesthetic is minimalist, featuring a smooth and simple matte black paint finish. The left and right sides are entirely covered by large stickers, with the left side displaying purely decorative elements and the right side providing detailed electrical specifications and certifications. The bottom of the unit is equipped with a unique mesh fan finger guard that spans its entire surface, with a badge bearing the company's logo on the lower left corner, contributing to the sleek appearance.

The front side of the PSU is home to the standard on/off switch and AC cable receptacle. The rear is more complex, featuring an internal USB connector hub that allows users to connect multiple devices requiring an internal USB header. This is particularly useful for those with motherboards that have limited USB headers. The modular cable connectors are clearly organized and labelled for easy and error-free connections, although they are not colour-coded.

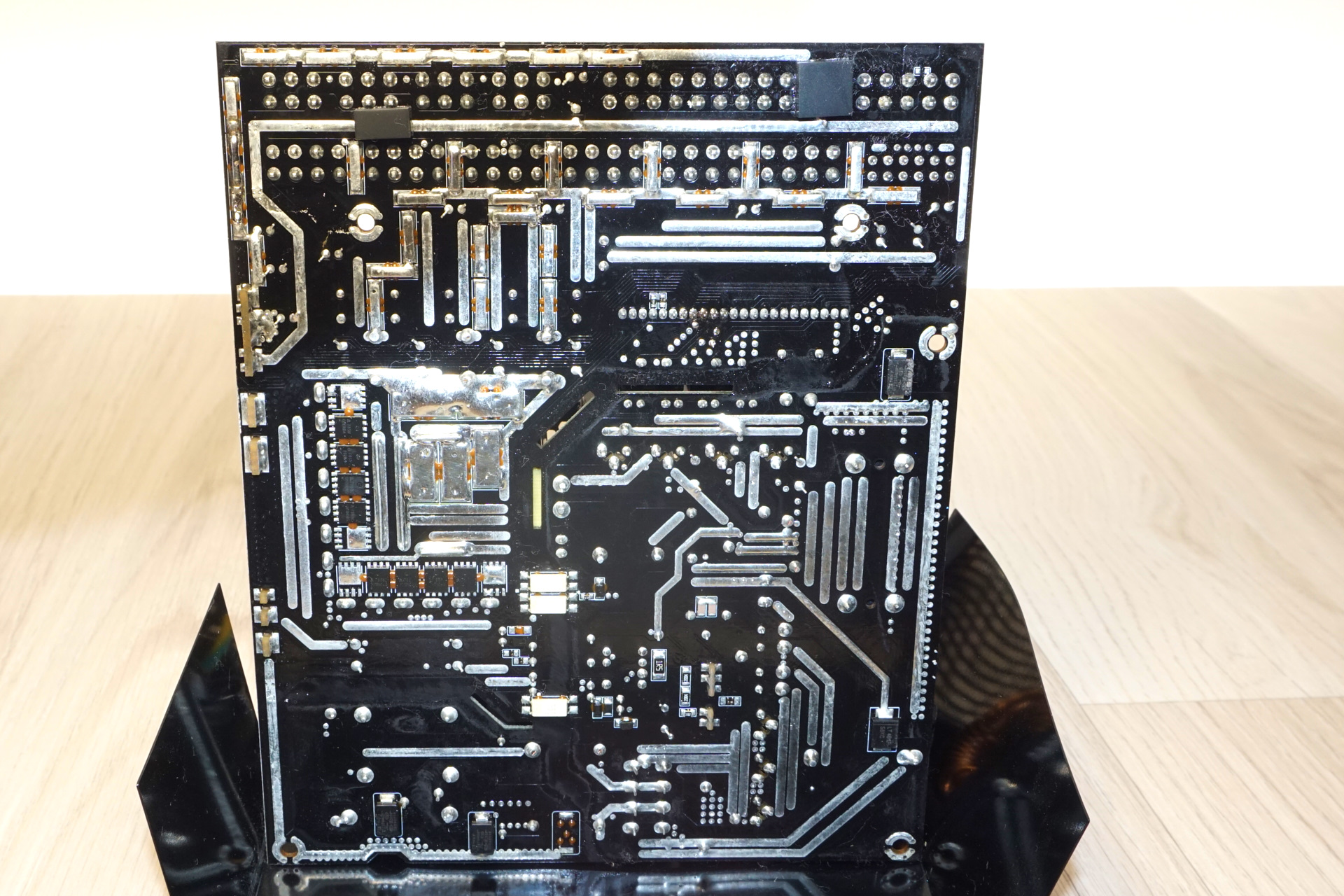

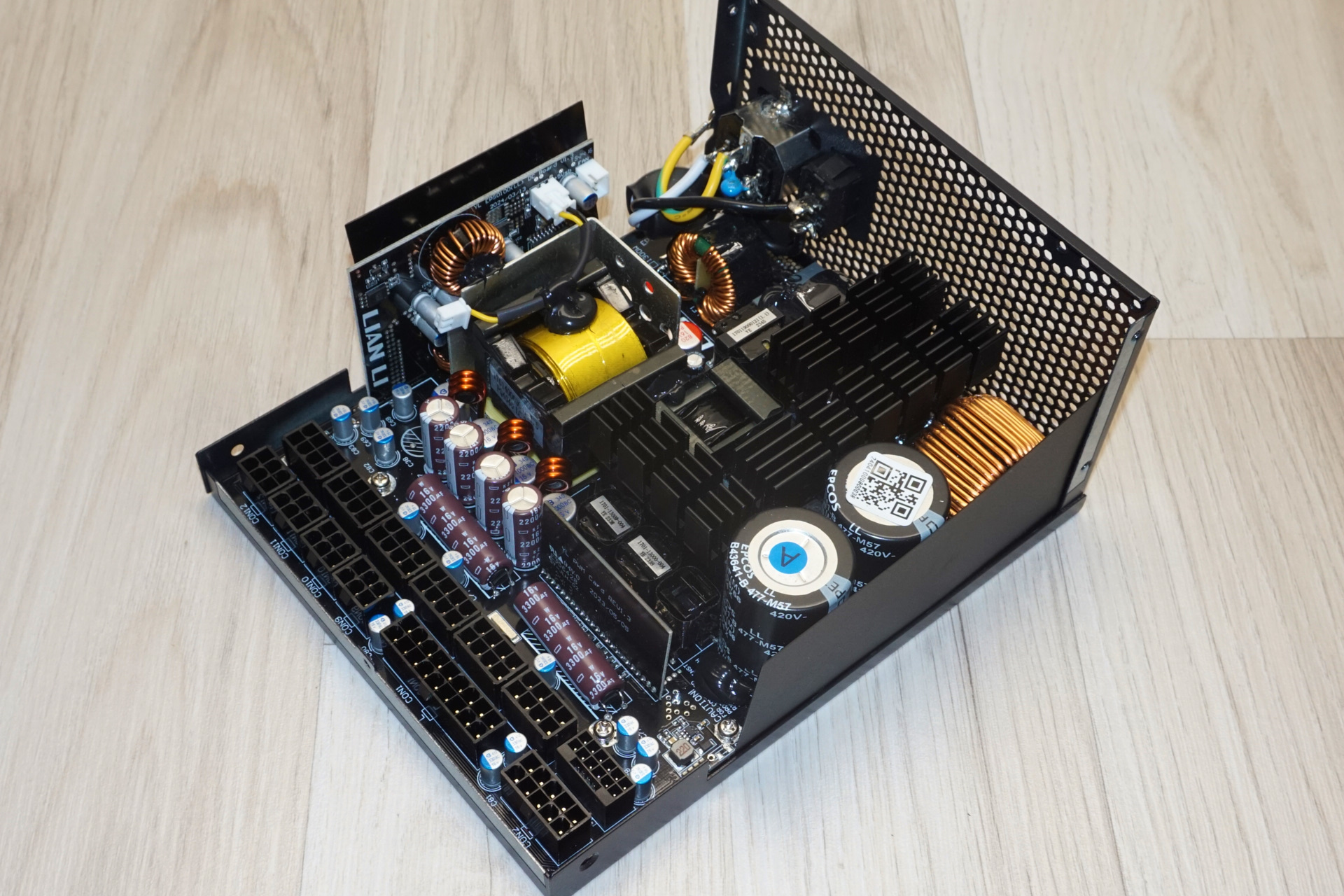

Internal Design

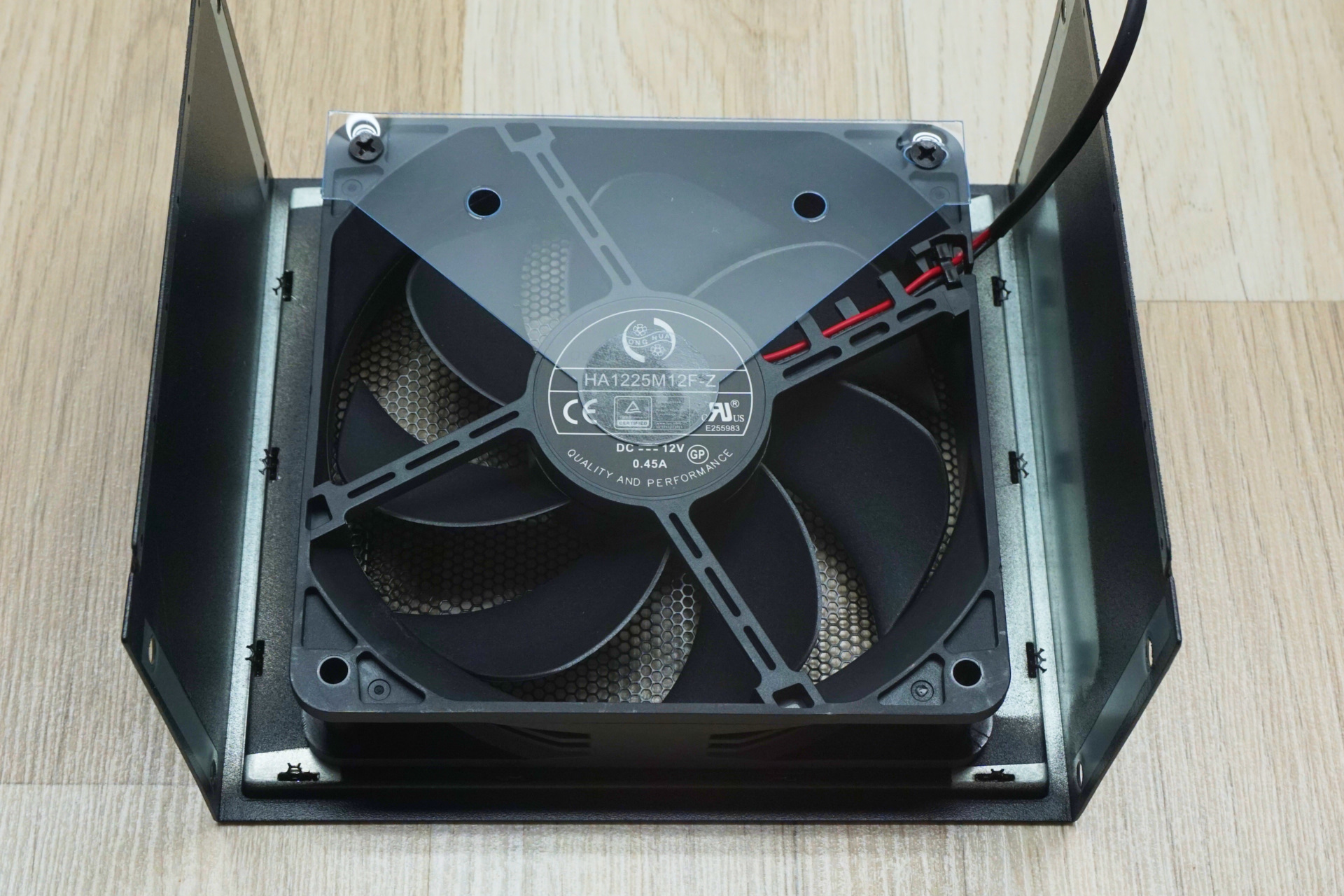

The Lian Li EG1000 Platinum ATX 3.1 PSU is equipped with a Hong Hua HA1225M12F-Z 120 mm fan, which features a Fluid Dynamic Bearing (FDB) engine. This type of fan is well-regarded for its quality and longevity and is commonly found in high-end power supplies. It has a modest maximum speed of 2000 RPM, which should be sufficient considering the unit's high efficiency.

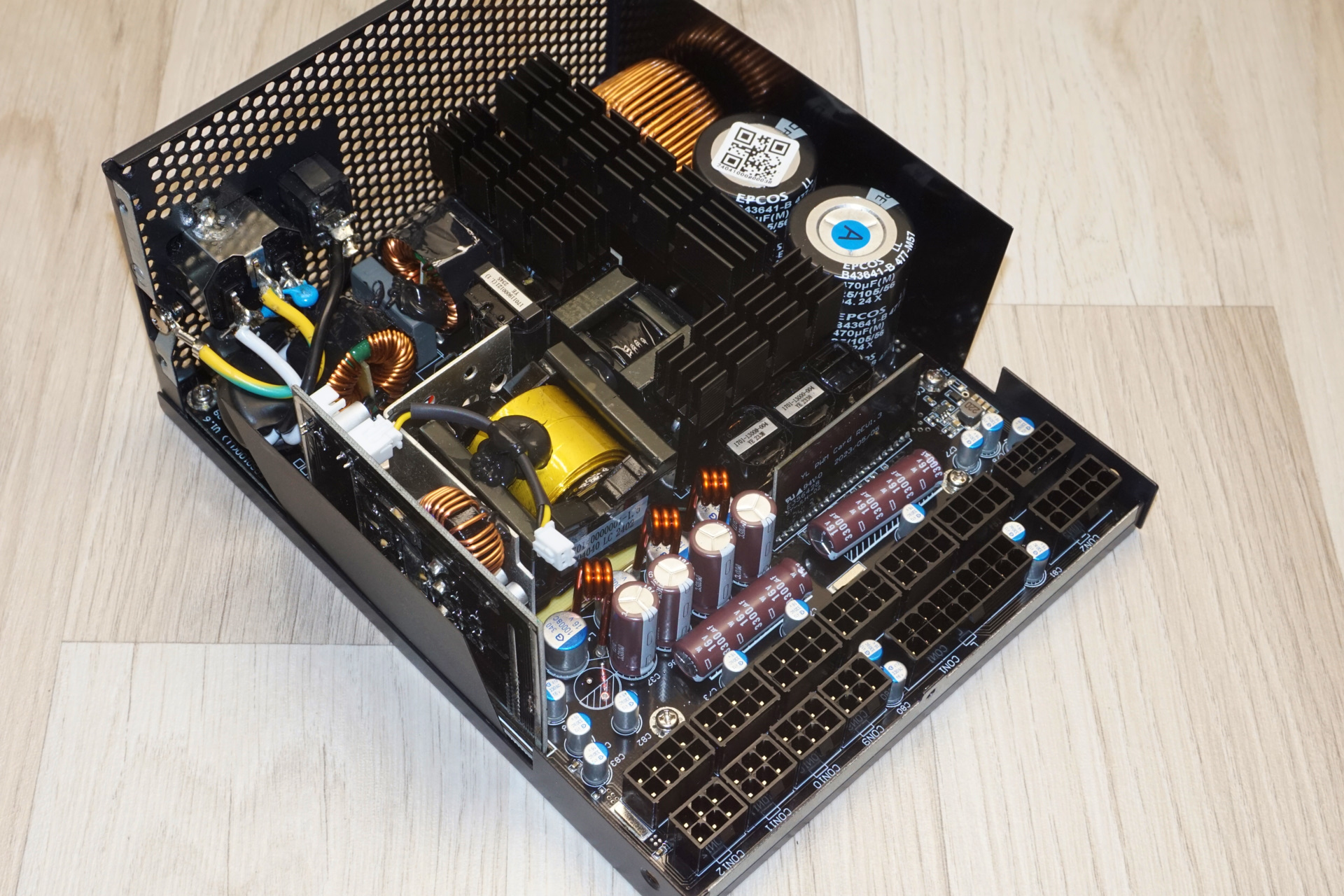

The Lian Li EG1000 Platinum ATX 3.1 PSU is manufactured by Helly Technology, a relatively young but reputable OEM founded in 2008 in China. Despite its shorter history, Helly Technology has established a solid presence in the power supply market with platforms supporting mid to high-tier products.

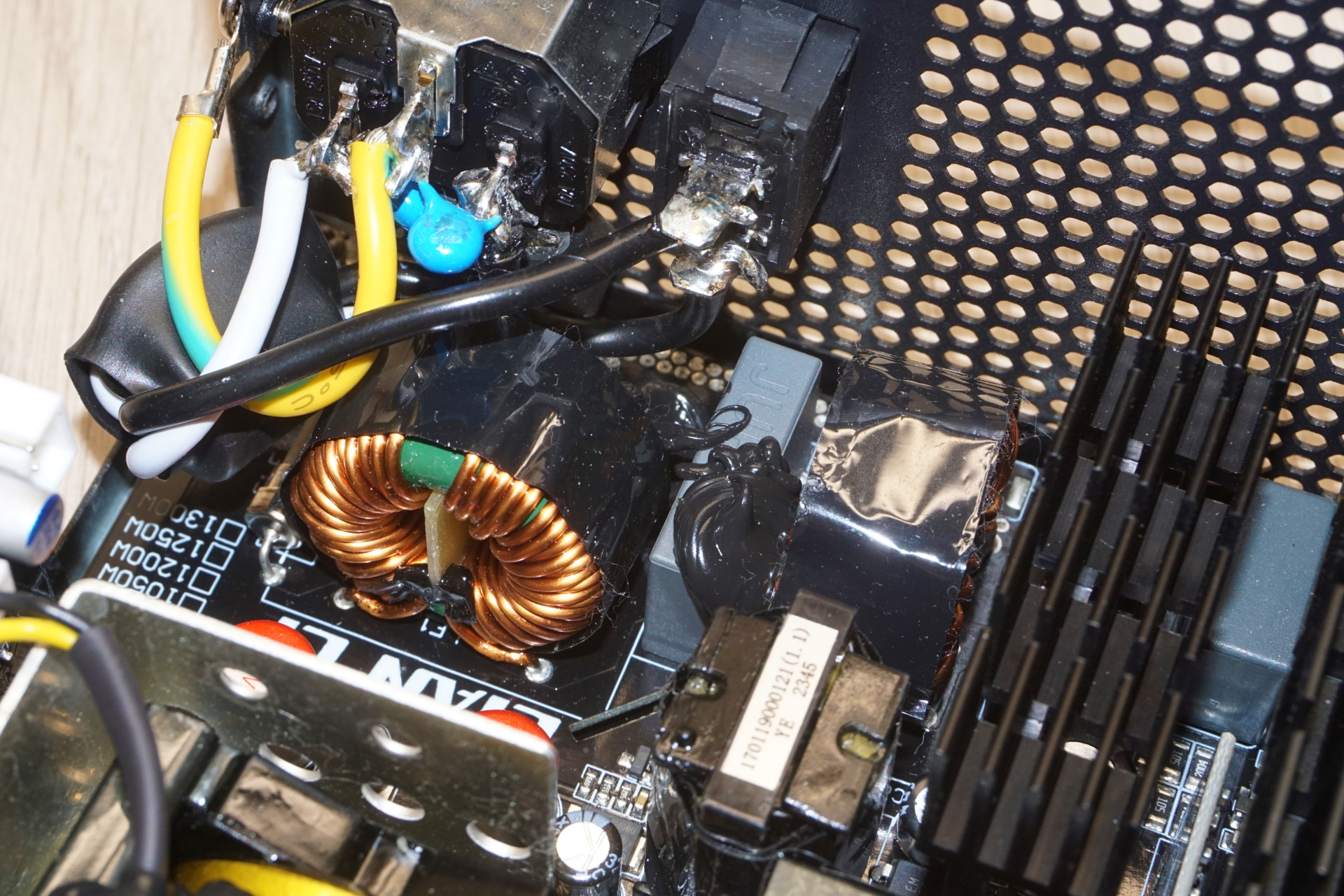

The input stage features a modest transient filter with two Y capacitors, one X capacitor, and two filtering inductors, which is somewhat less effective than those found in other high-tier units. Two rectifying bridges on a dedicated heatsink handle the AC voltage input. The Active Power Factor Correction (APFC) setup includes three MOSFETs (PTA28N50) and a diode on the large heatsink across the edge of the PCB, as well as a large inductor and two EPCOS 470 μF capacitors.

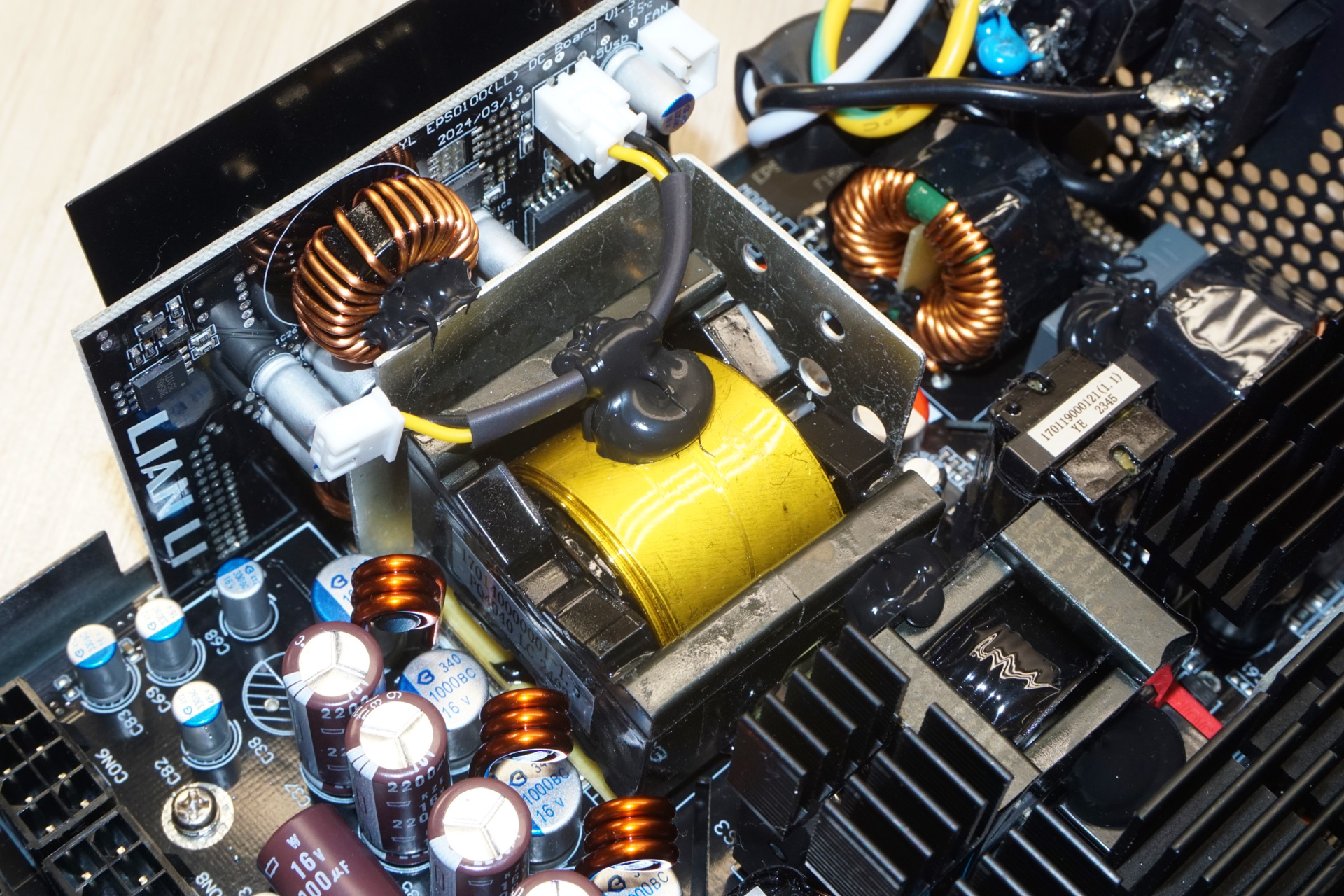

In the primary stage, the Lian Li EG1000 utilizes a full-bridge LLC topology with four OSG55R190F MOSFETs from Oriental Semiconductor mounted on a dedicated heatsink. The secondary stage employs eight G013N04G MOSFETs located on the underside of the main PCB to generate the primary 12V rail, while the 3.3V and 5V rails are produced by DC-to-DC circuits. The secondary side capacitors include a mix of Rubycon and Nippon Chemi-Con products, all from Japanese manufacturers known for their high quality.

Cold Test Results

Cold Test Results (25°C Ambient)

For the testing of PSUs, we are using high precision electronic loads with a maximum power draw of 2700 Watts, a Rigol DS5042M 40 MHz oscilloscope, an Extech 380803 power analyzer, two high precision UNI-T UT-325 digital thermometers, an Extech HD600 SPL meter, a self-designed hotbox and various other bits and parts.

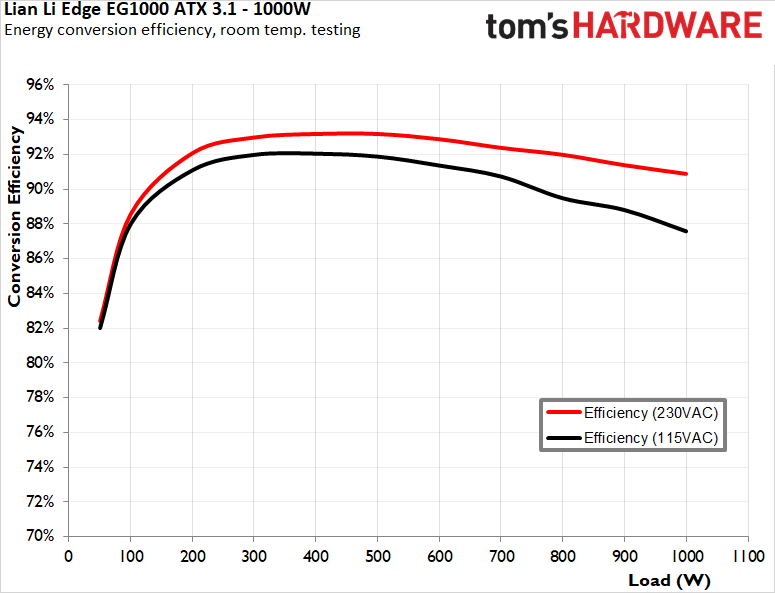

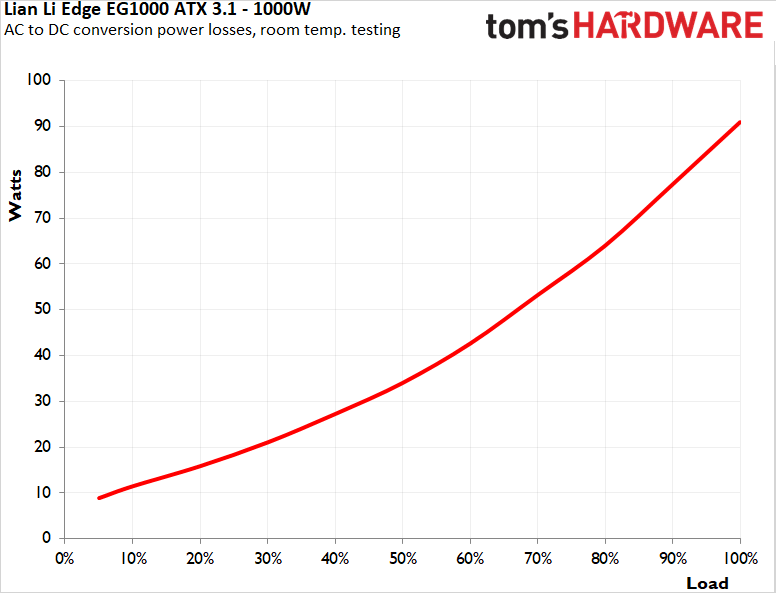

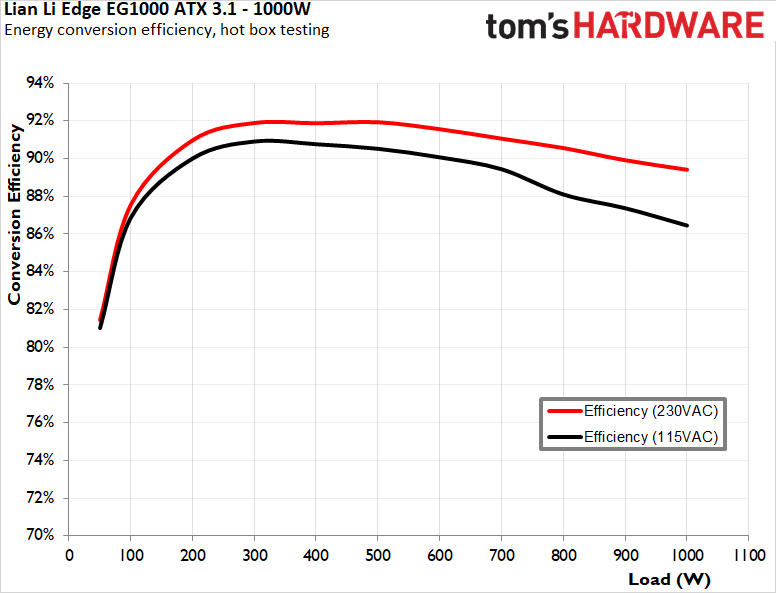

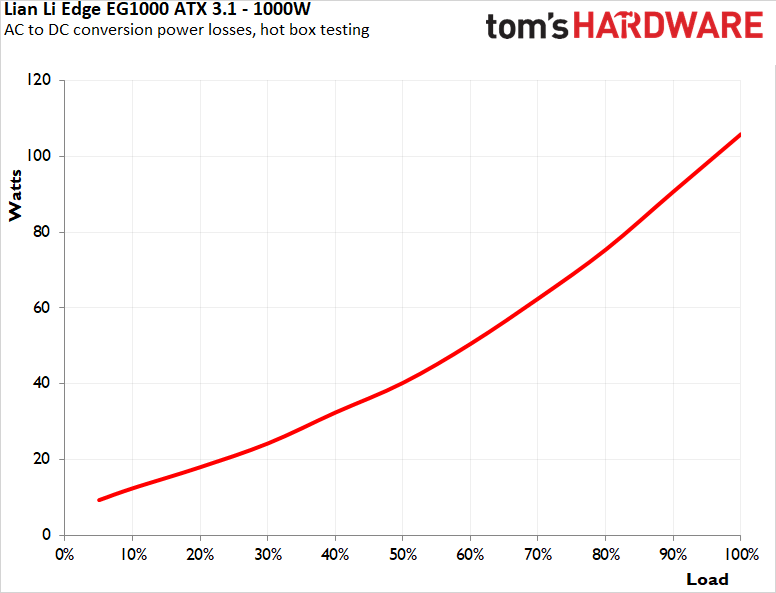

The Lian Li EG1000 Platinum ATX 3.1 PSU meets the 80Plus Platinum certification standards when the input voltage is 115 VAC, even if only barely. When tested with a 115 VAC input, this PSU achieves an average nominal load efficiency of 90.4% across its operational range from 20% to 100% of its capacity, increasing to 92.3% when operated with a 230 VAC input. It would not pass the 80Plus Platinum requirements with an input voltage of 230 VAC as the half-load efficiency is not nearly high enough. The efficiency under very low load conditions is acceptably high.

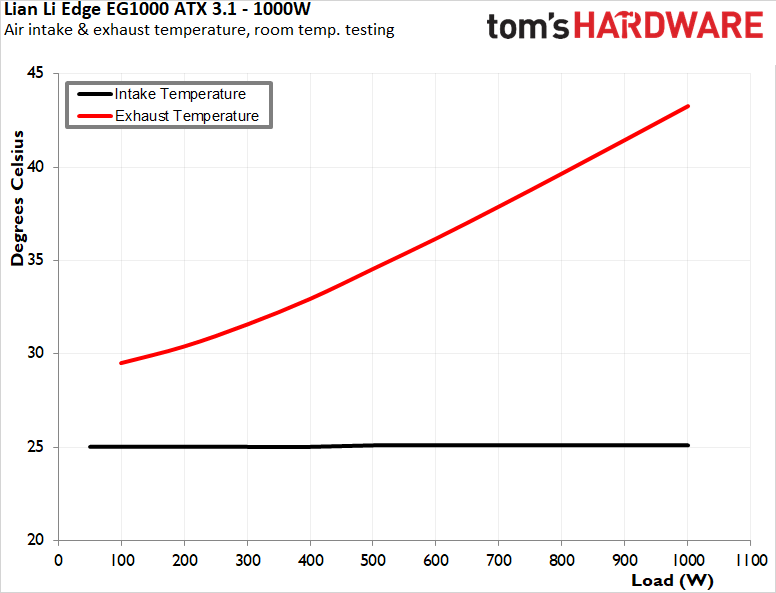

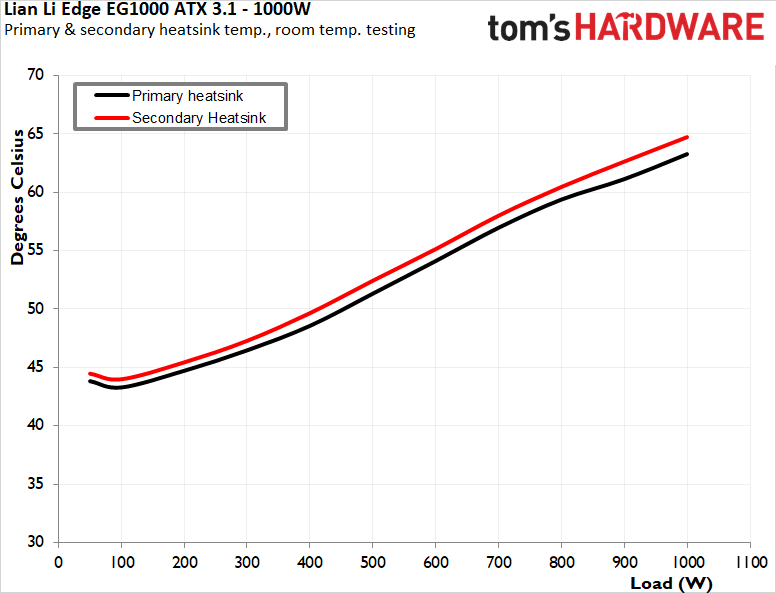

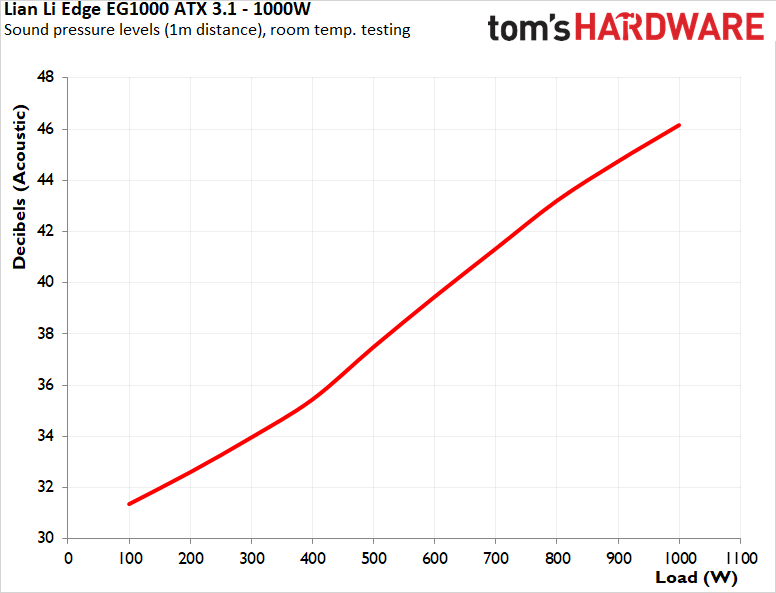

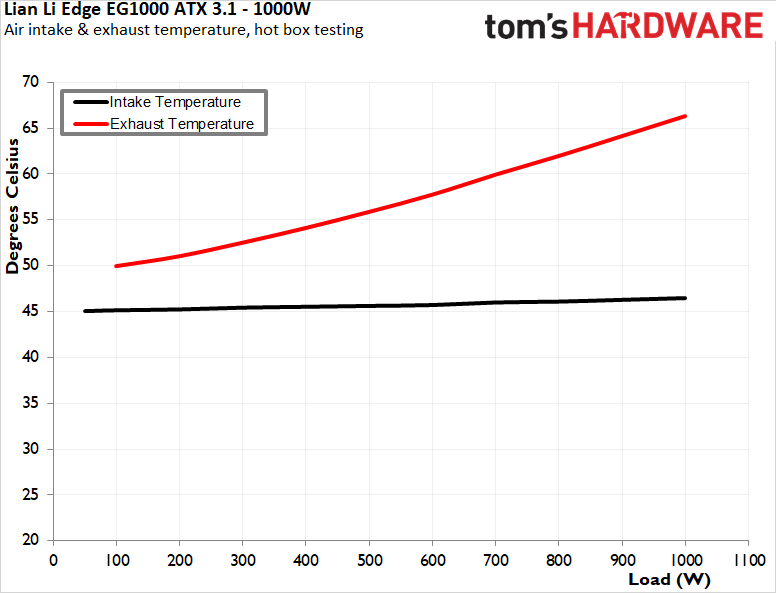

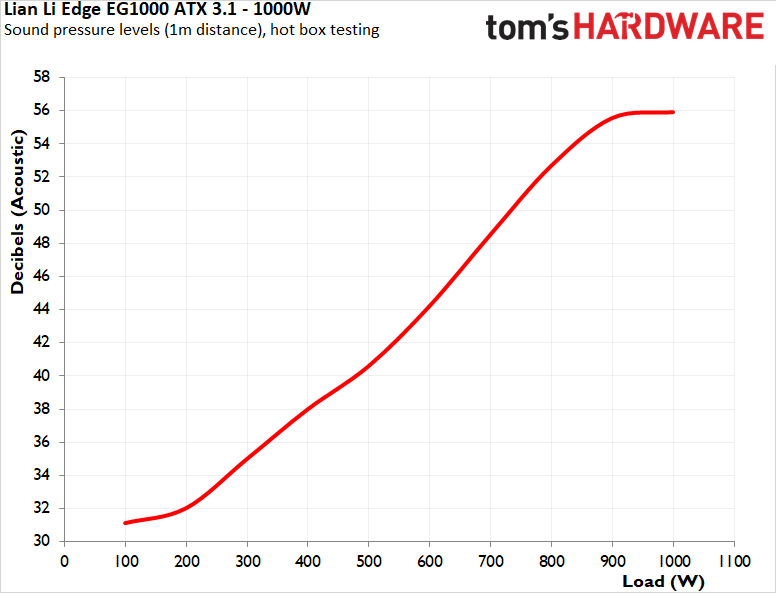

The Lian Li EG1000 Platinum ATX 3.1 PSU demonstrates fair thermal performance and acoustic characteristics during room temperature testing. The fan does not activate immediately but starts when the load is just under 100 Watts. As the load increases, the fan speed escalates almost linearly alongside with the load. However, due to the PSU's small proportions and 120 mm fan, internal temperatures are slightly higher than other units in its class. Acoustically, the fan operates quietly only at loads lower than 300 Watts, which will become noticeable in a quiet environment and keep getting louder as the load increases.

Hot Test Results

Hot Test Results (~45°C Ambient)

The Lian Li EG1000 Platinum ATX 3.1 PSU maintains commendable efficiency during hot testing. The unit achieves an average nominal load efficiency of 89.1% with a 115 VAC input and 91% with a 230 VAC input. This indicates some degradation due to the elevated ambient temperature but within reasonable limits. There are signs of thermal stress at maximum load, with greater efficiency degradation.

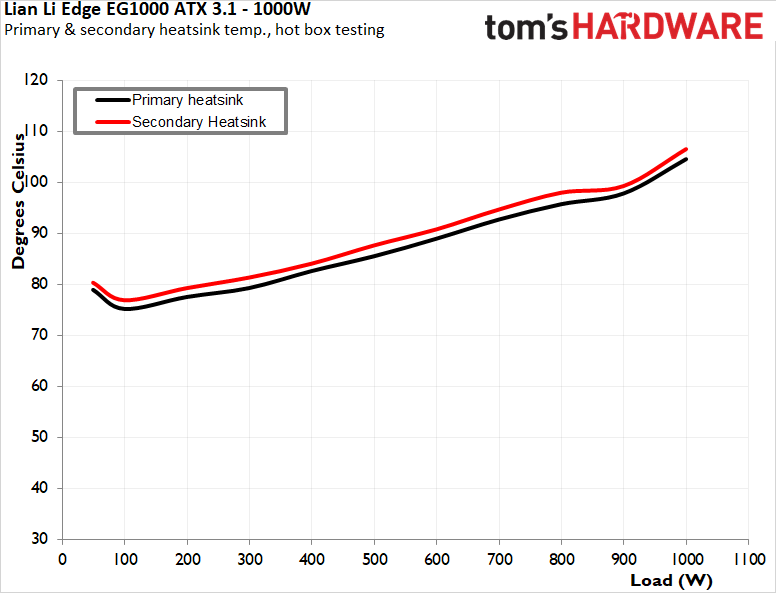

Even though the ambient temperature is significantly higher, the fan once again does not start right up, but once the load is a little higher than 70 Watts. It maintains its linear speed profile but accelerates more rapidly, reaching its maximum speed just below a 900-watt load. It keeps fairly low noise levels when the load is below 300-400 Watts, but the noise output will increase rapidly after that point, with the EG1000 becoming very loud at loads above 800 Watts.

The Lian Li EG1000 Platinum's relatively compact size does not do it any favors when it comes to thermal performance. The internal temperatures are on the high side for an 80Plus Platinum-certified unit, although they stay within safe operational limits. There is a small spike at maximum load due to the fan's inability to increase its speed any further, but the final temperatures are far too low to trigger an OTP event.

PSU Quality and Bottom Line

Power Supply Quality

The Lian Li EG1000 Platinum exhibits fairly good voltage filtering, with the 12V rail showing a maximum ripple of 42 mV, the 5V rail at 22 mV, and the 3.3V rail at 24 mV. The overall voltage regulation is within acceptable limits, with the 12V rail at 0.9%, the 5V rail at 1.4%, and the 3.3V rail at 1.1%. These are not record-setting figures, but the power quality is very good, even considering this is a top-tier product.

During our thorough assessment, we evaluate the essential protection features of every power supply unit we review, including Over Current Protection (OCP), Over Voltage Protection (OVP), Over Power Protection (OPP), and Short Circuit Protection (SCP). The over-current protection (OCP) results are satisfactory, with the 3.3V and 5V rails reacting at 118%, while the 12V rail is significantly slack at 137%. The over-power protection (OPP) response is a bit lax, kicking in at 132%.

Bottom Line

The Lian Li EG1000 Platinum ATX 3.1 PSU positions itself as a premium power supply unit targeting enthusiasts and high-performance system builders seeking something out of the ordinary and aesthetically superior to most other designs. Its unique L-shaped design caters specifically to dual-chamber chassis, offering a distinctive approach to modularity and cable management. However, this design choice might not resonate with a broader audience, as it deviates from the conventional PSU form factor, potentially limiting its compatibility with standard ATX cases and user acceptance. Its unconventional shape, which extends the PSU's length to 182 mm, could pose installation challenges in some cases. However, the unit is primarily marketed towards cases specifically designed to accommodate this kind of unit.

Build quality and aesthetics are where the EG1000 Platinum stands out from the crowd. Aside from its unique L-shaped chassis, the all-black, fully modular cables featuring individually sleeved wires and pre-installed wire combs are possibly the aesthetic highlight of this unit. The integrated USB hub may be redundant for most users. Still, it can be very useful for PC builders wanting to integrate many devices with a motherboard with only one or two headers available. Lian Li designed the EG1000 Platinum to be elegant and pleasant to look at, not extravagant.

Thermally, the EG1000 Platinum performs adequately but not exceptionally. During both cold and hot testing, the 120 mm Hong Hua fan, while reliable, struggles to maintain lower internal temperatures due to its size and the PSU's compact internal volume. The fan's linear speed profile ensures it ramps up appropriately with increasing load. Still, the unit becomes noticeably loud at higher speeds, which could be a concern for users seeking a quieter system even when heavily loaded. Even with the slight hints of thermal stress at maximum load, the unit manages to stay within safe thermal limits, but it does so at the cost of higher noise levels under heavy loads.

Electrically, the EG1000 Platinum delivers solid performance and power quality. It meets the 80Plus Platinum efficiency requirements, even though it just barely clears the threshold for an input voltage of 115 VAC. The average nominal range efficiency is fairly good but not high for a Platinum-certified unit. Voltage regulation and ripple suppression are both very good, with the unit delivering a stable and good quality power output under any operating conditions.

Overall, the Lian Li EG1000 Platinum ATX 3.1 PSU is a well-built, reliable power supply with a few caveats. Its unique design and premium components are offset by potential installation challenges and noise issues under heavy load. While it offers good electrical performance and modularity, its appeal may be limited to users with specific chassis requirements. For a price of $175, it provides fair value but may not be the best choice for every PC builder.

MORE: Best Power Supplies

MORE: How We Test Power Supplies

MORE: All Power Supply Content

]]>Nvidia's PhysX and Flow SDKs are now completely open-source under the permissive BSD-3 license. If you've been a part of the developer community, these libraries have been open-source since late 2018, except for the key GPU simulation kernels. Releasing the source code for these kernels paves the way for game developers to integrate custom and highly optimized variations of PhysX and Flow, while the modding community might see this as an opportunity to run legacy PhysX code on unsupported RTX 50 GPUs through compatibility layers.

PhysX is a real-time physics engine that offloads complex calculations to your GPU, capitalizing on its parallel processing, and powered under the hood by CUDA. This technology has been employed in a handful of older titles from the 2010s, some notable examples of which are Mirror’s Edge, Batman: Arkham Asylum, Metro 2033, Borderlands 2, and the list goes on.

The fact that most of these games relied on a 32-bit PhysX implementation, combined with Nvidia's decision to discontinue 32-bit CUDA on their Blackwell GPUs, causes the intricate physics simulations that are designed and optimized for parallel computing to fall back to the CPU, crippling performance. Flow is more specialized and serves to power fluid simulation mechanics. Think of fire, gas, and smoke effects.

With PhysX 4.0, Nvidia made public the CPU-side simulation source code of PhysX, but the GPU-side kernels were still proprietary. Limited to the binaries only, understanding the system's internals and customizing it for specific needs was almost impossible. However, with Nvidia's special GPU acceleration sauce now out in the open, anyone can see, study, modify, and build on these existing libraries.

We won't be surprised if modders now work to create a 32-bit to 64-bit compatibility layer to enable PhysX support for older titles on Blackwell GPUs. With access to the source code, it's technically possible to decouple PhysX and Flow from CUDA and port the technology to hardware-agnostic platforms like OpenCL/Vulkan to enable support for AMD and Intel processors, but that's much easier said than done.

For the most part, PhysX is a dead technology for games and has been superseded by alternatives; for example, Unreal Engine 5 uses the new Chaos Physics engine. However, access to the PhysX's GPU kernels and the shader simulation code for Flow is likely to have far-reaching impact for graphics engineering, robotics, architecture and design, animation, and the list goes on.

]]>Another Blackwell GPU bites the dust, as the meltdown reaper has reportedly struck a Redditor's MSI GeForce RTX 5090 Gaming Trio OC, with the impact tragically extending to the power supply as well. Ironically, the user avoided third-party cables and specifically used the original power connector, the one that was supplied with the PSU, yet both sides of the connector melted anyway.

Nvidia's GeForce RTX 50 series GPUs face an inherent design flaw where all six 12V pins are internally tied together. The GPU has no way of knowing if all cables are seated properly, preventing it from balancing the power load. In the worst-case scenario, five of the six pins may lose contact, resulting in almost 500W (41A) being drawn from a single pin. Given that PCI-SIG originally rated these pins for a maximum of 9.5A, this is a textbook fire/meltdown risk.

The GPU we're looking at today is the MSI RTX 5090 Gaming Trio OC, which, on purchase, set the Redditor back a hefty $2,900. That's still a lot better than the average price of an RTX 5090 from sites like eBay, currently sitting around $4,000. Despite using Corsair's first-party 600W 12VHPWR cable, the user was left with a melted GPU-side connector, a fate which extended to the PSU.

MSI 5090 Gaming trio OC melted cable (repost with pics) from r/nvidia

The damage, in the form of a charred contact point, is quite visible and clearly looks as if excess current was drawn from one specific pin, corresponding to the same design flaw mentioned above. The user is weighing an RMA for their GPU and PSU, but a GPU replacement is quite unpredictable due to persistent RTX 50 series shortages. Sadly, these incidents are still rampant despite Nvidia's assurances before launch.

With the onset of enablement drivers (R570) for Blackwell, both RTX 50 and RTX 40 series GPUs began suffering from instability and crashes. Despite multiple patches from Nvidia, RTX 40 series owners haven't seen a resolution and are still reliant on reverting to older 560-series drivers. Moreover, Nvidia's decision to discontinue 32-bit OpenCL and PhysX support with RTX 50 series GPUs has left the fate of many legacy applications and games in limbo.

As of now, the only foolproof method to secure your RTX 50 series GPU is to ensure optimal current draw through each pin. You might want to consider Asus' ROG Astral GPUs as they can provide per-pin current readings, a feature that's absent in reference RTX 5090 models. Alternatively, if feeling adventurous, maybe develop your own power connector with built-in safety measures and per-pen sensing capabilities?

]]>AMD processors were instrumental in achieving a new world record during a recent Ansys Fluent computational fluid dynamics (CFD) simulation run on the Frontier supercomputer at the Oak Ridge National Laboratory (ORNL). According to a press release by Ansys, it ran a 2.2-billion-cell axial turbine simulation for Baker Hughes, an energy technology company, testing its next-generation gas turbines aimed at increasing efficiency. The simulation previously took 38.5 hours to complete on 3,700 CPU cores. By using 1,024 AMD Instinct MI250X accelerators paired with AMD EPYC CPUs in Frontier, the simulation time was slashed to 1.5 hours. This is more than 25 times faster, allowing the company to see the impact of the changes it makes on designs much more quickly.

A new supercomputing record has been set!Ansys, @bakerhughesco, and @ORNL have run the largest-ever commercial #CFD simulation using 2.2 billion cells and 1,024 @AMD Instinct GPUs on the world’s first exascale supercomputer. The result? A 96% reduction in simulation run…April 4, 2025

Frontier was once the fastest supercomputer in the world, and it was also the first one to break into exascale performance. It replaced the Summit supercomputer, which was decommissioned in November 2024. However, the El Capitan supercomputer, located at the Lawrence Livermore National Laboratory, broke Frontier’s record at around the same time. Both Frontier and El Capitan are powered by AMD GPUs, with the former boasting 9,408 AMD EPYC processors and 37,632 AMD Instinct MI250X accelerators. On the other hand, the latter uses 44,544 AMD Instinct MI300A accelerators.

Given those numbers, the Ansys Fluent CFD simulator apparently only used a fraction of the power available on Frontier. That means it has the potential to run even faster if it can utilize all the available accelerators on the supercomputer. It also shows that, despite Nvidia’s market dominance in AI GPUs, AMD remains a formidable competitor, with its CPUs and GPUs serving as the brains of some of the fastest supercomputers on Earth.

“By scaling high-fidelity CFD simulation software to unprecedented levels with the power of AMD Instinct GPUs, this collaboration demonstrates how cutting-edge supercomputing can solve some of the toughest engineering challenges, enabling breakthroughs in efficiency, sustainability, and innovation,” said Brad McCredie, AMD Senior Vice President for Data Center Engineering.

Even though AMD can deliver top-tier performance at a much cheaper price than Nvidia, many AI data centers prefer Team Green because of software issues with AMD’s hardware.

One high-profile example was Tiny Corp’s TinyBox system, which had problems with instability with its AMD Radeon RX 7900 XTX graphics cards. The problem was so bad that Dr. Lisa Su had to step in to fix the issues. And even though it was purportedly fixed, the company still released two versions of the TinyBox AI accelerator — one powered by AMD and the other by Nvidia. Tiny Corp also recommended the more expensive Team Green version, with its six RTX 4090 GPUs, because of its driver quality.

If Team Red can fix the software support on its great hardware, then it could likely get more customers for its chips and get a more even footing with Nvidia in the AI GPU market.

]]>Seasonic, a longstanding leader in power supply technology, is recognized for its expertise in designing and manufacturing high-performance power supply units (PSUs). With a reputation built on delivering durable, efficient, and reliable products, the company caters to a diverse range of users, including professionals with demanding workloads and gaming enthusiasts requiring stable power delivery. Seasonic's distinction lies in its in-house design and manufacturing of PSU platforms, with a strong emphasis on premium components and engineering excellence.

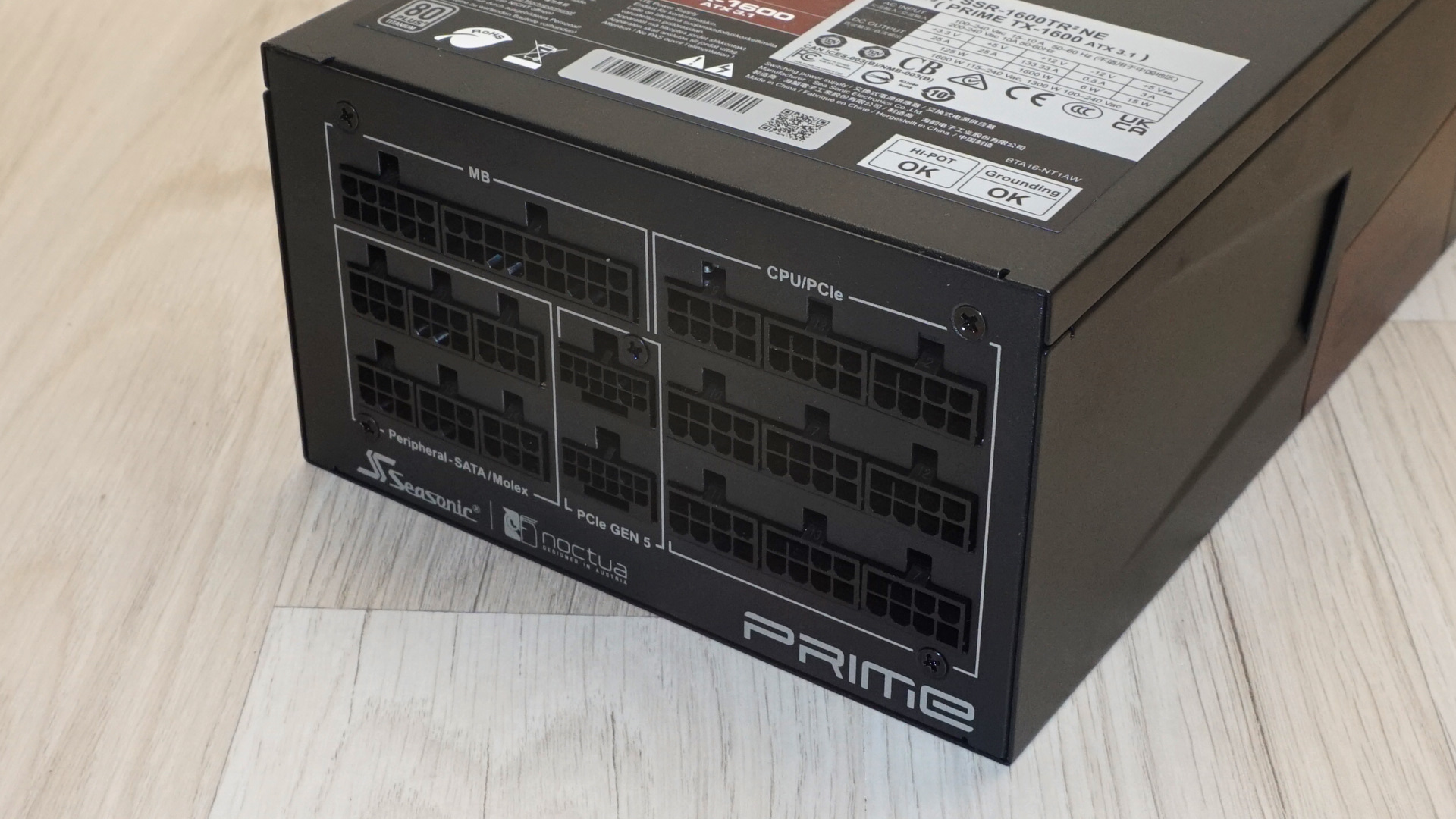

This review takes a close look at the Prime TX-1600 Noctua Edition, a collaborative effort between Seasonic and Noctua. This flagship model in Seasonic’s Prime series combines Seasonic’s proficiency in power supply design with Noctua’s renowned cooling expertise. The unit features titanium-level efficiency combined with very impressive specifications and a power rating of 1600W at 50°C, targeting insatiable power users who are building systems with extreme power demands. Its standout feature includes Noctua’s NF-A12x25 HS-PWM fan to deliver superior cooling performance. The power supply also complies with ATX 3.1 and PCIe 5.1 standards, ensuring compatibility with the latest hardware, including GPUs requiring high transient power. The collaboration is further reflected in the PSU’s design, with Noctua’s brown color found throughout the product. It is an expensive product, but it sets the performance standard for top-tier PC PSUs, making it one of the best power supplies on the market.

Specifications and Design

In the Box

The Seasonic Prime TX-1600 Noctua Edition comes packaged in a durable, very long cardboard box featuring a black-and-brown theme. This color scheme departs from Seasonic's usual black-and-silver design for Titanium-rated units, aligning with Noctua’s signature aesthetic. Inside, the PSU is securely enclosed in a nylon pouch and further protected by dense foam inserts to ensure safe transport.

The included bundle is extensive, featuring mounting screws, a 90-degree ATX adapter that doubles as a jump-start tester, an AC IEC C19 power cable, cable ties, five cable straps, cable combs, and a metallic case badge. It is important to note that users must avoid jump-starting the PSU with the adapter connected to a motherboard.

The Prime TX-1600 features unique cables with individually sleeved wires in black and brown, complementing the unit’s aesthetic theme. These cables include two PCIe 5.1 12V-2x6 connectors for modern GPUs, six 6+2 pin PCI Express connectors, and 18 SATA connectors. Notably, two of the SATA connectors are 3.3 V cables, which may be incompatible with older devices and disable hot-swapping functionality.

External Appearance

The Seasonic Prime TX-1600 Noctua Edition features an understated and robust design. Its chassis is coated with self-cleaning satin black paint that resists fingerprints, with decorative embossments adorning the left and right sides, adding texture without compromising the semi-minimalist aesthetic. The PSU also prominently features Noctua’s signature brown in its fan guard plate, which covers most of the unit’s bottom.

The PSU deviates from standard ATX dimensions, with a length of 210mm—considerably longer than the ATX guide limit of 140mm. This extended size necessitates compatibility checks with cases to ensure proper fitment. The fan grille design prioritizes minimizing turbulence noise over aesthetics. Regardless, it seamlessly integrates into the PSU’s overall design.

The rear panel houses the IEC C20 AC power inlet, an on/off switch, and a hybrid fan mode button. Enabling hybrid fan mode allows the PSU to operate passively under lower loads, while disabling it forces the fan to spin at minimal speeds. The front side is filled with cable connectors, labeled for straightforward installation.

Internal Design

Internally, the Prime TX-1600 Noctua Edition is equipped with a Noctua NF-A12x25 HS-PWM 120mm fan. This fan incorporates advanced features such as an SSO2 bearing and Sterrox liquid-crystal polymer blades, which provide excellent thermal performance and reduced noise levels. There is no official datasheet for this particular model but our measurements revealed a maximum speed of about 2400 RPM.

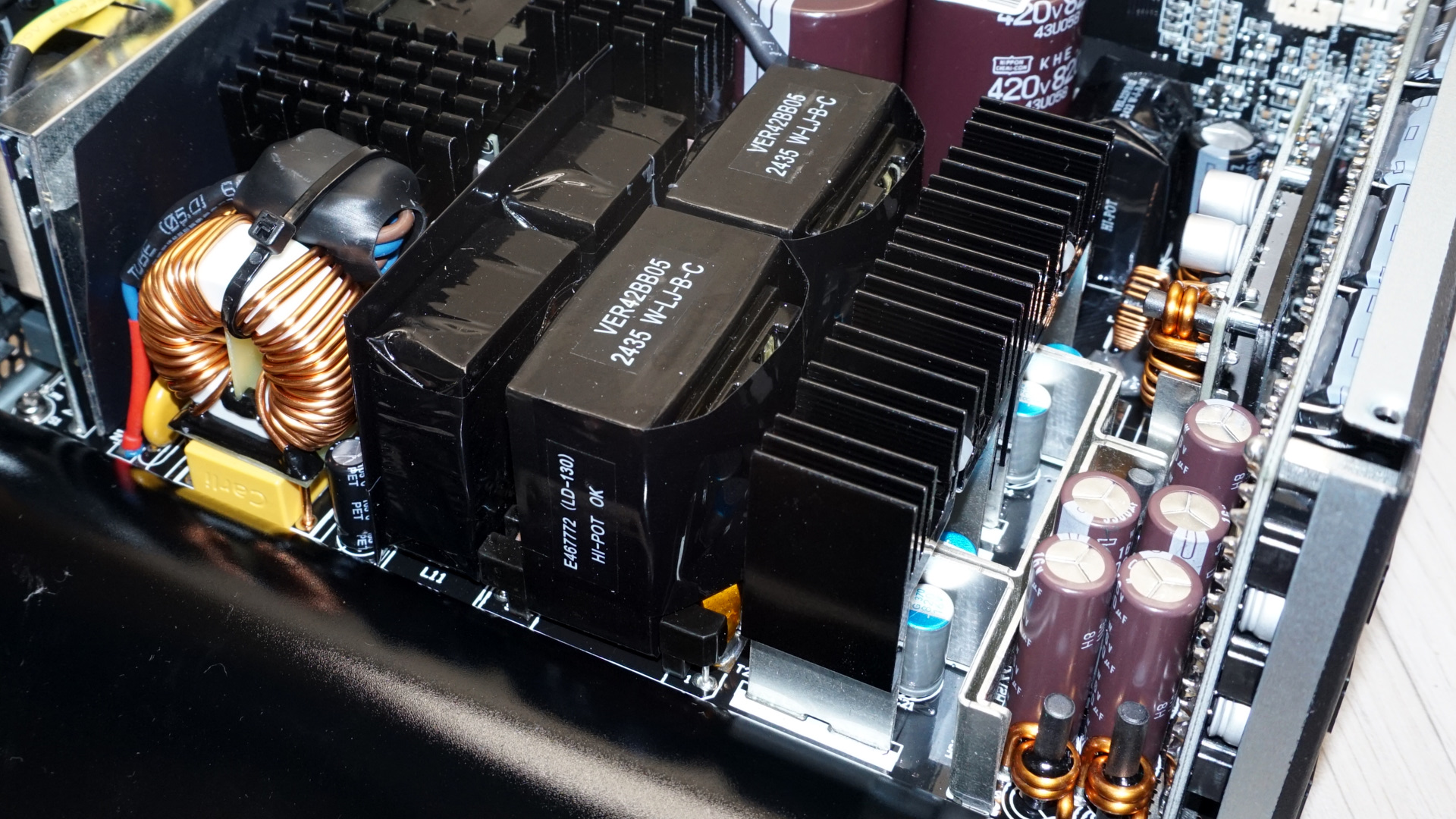

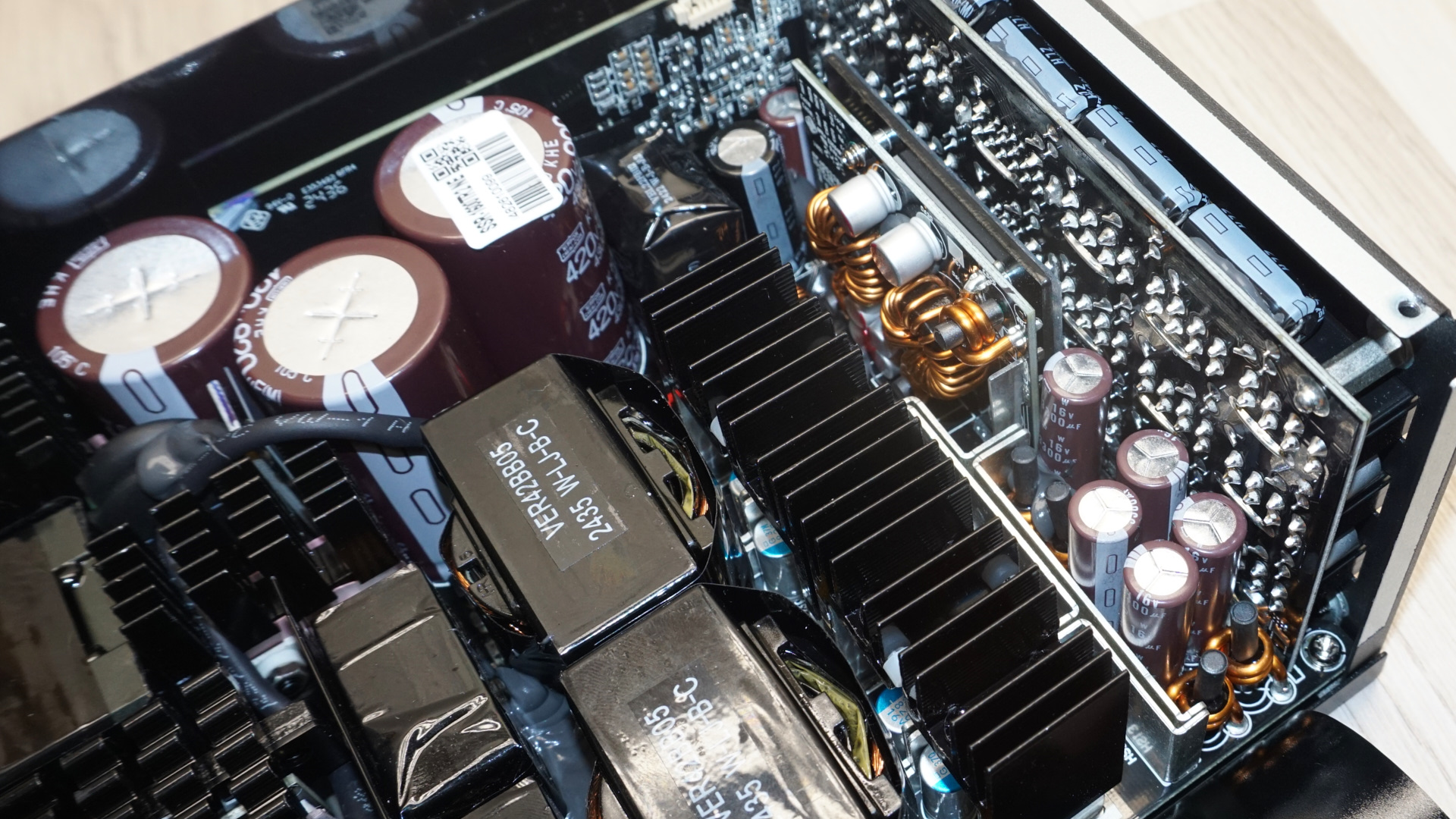

The PSU’s design is entirely in-house, reflecting Seasonic’s expertise in power supply development. Its internal layout is very dense, utilizing massive heatsinks for any PC PSU, let alone a design with that high an efficiency. The input filtering stage is robust, featuring six Y capacitors, four X capacitors, and two oversized inductors for enhanced EMI suppression.

The Active Power Factor Correction (APFC) stage eliminates rectifying bridges in favor of four rectifier MOSFETs mounted on large heatsinks, improving efficiency. The APFC includes four additional MOSFETs, two diodes, and three enormous Nippon Chemi-Con 820μF capacitors, forming an interleaved topology for maximum reliability and efficiency.

The primary inversion stage utilizes four Infineon 60R080P7 MOSFETs in a full-bridge LLC topology. These are mounted on dedicated heatsinks just before the dual main transformers. On the secondary side, 16 MOSFETs split across two arrays generate the primary 12V rail. The DC-to-DC circuits are on a vertical daughterboard in parallel to the connector’s board and produce the 3.3V and 5V rails. All capacitors, including secondary-stage components, are supplied by Nippon Chemi-Con, one of the currently most reputable manufacturers. Despite the dense layout, the build quality is immaculate.

Cold Test Results

Cold Test Results (25°C Ambient)

For the testing of PSUs, we are using high precision electronic loads with a maximum power draw of 2700 Watts, a Rigol DS5042M 40 MHz oscilloscope, an Extech 380803 power analyzer, two high precision UNI-T UT-325 digital thermometers, an Extech HD600 SPL meter, a self-designed hotbox and various other bits and parts.

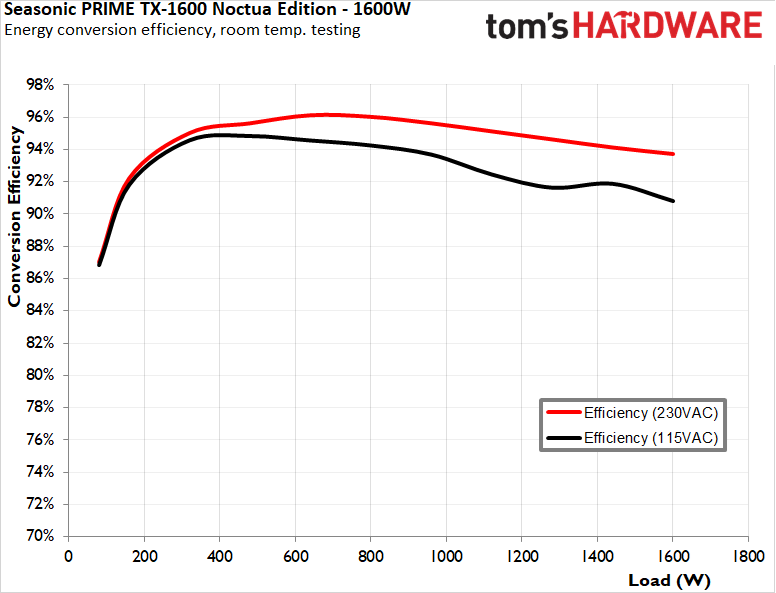

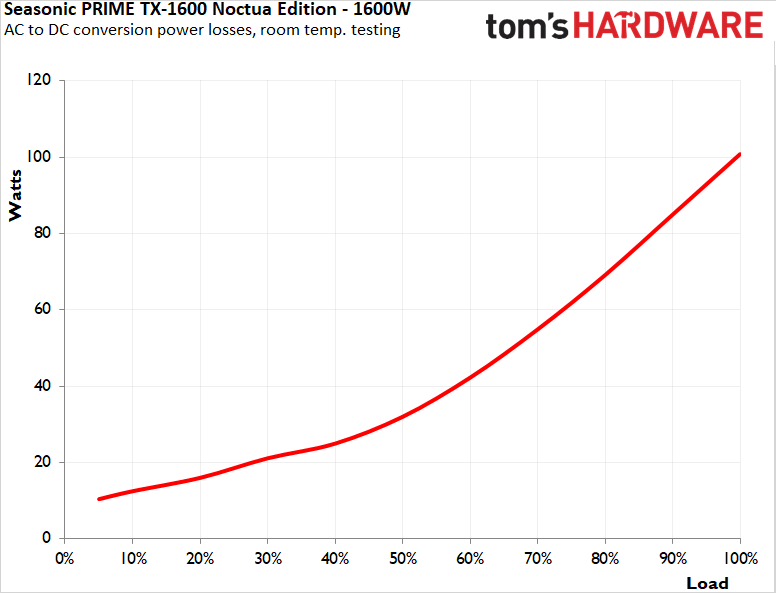

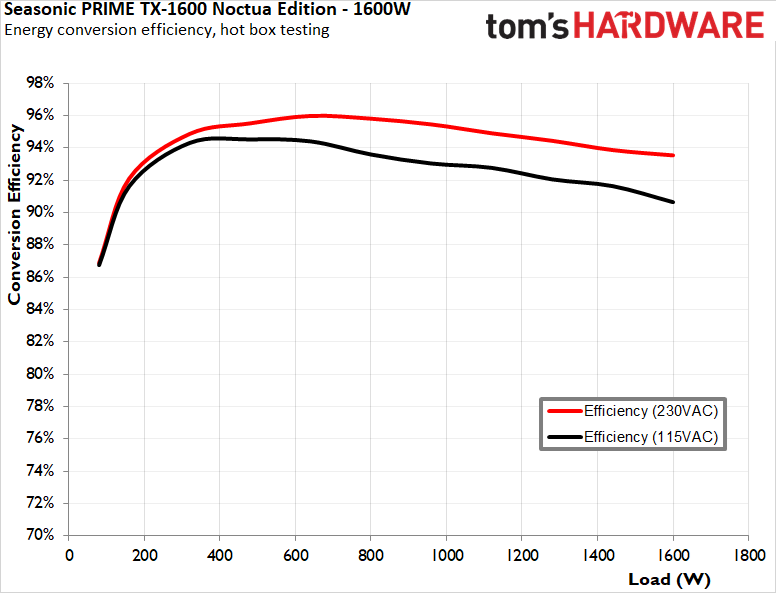

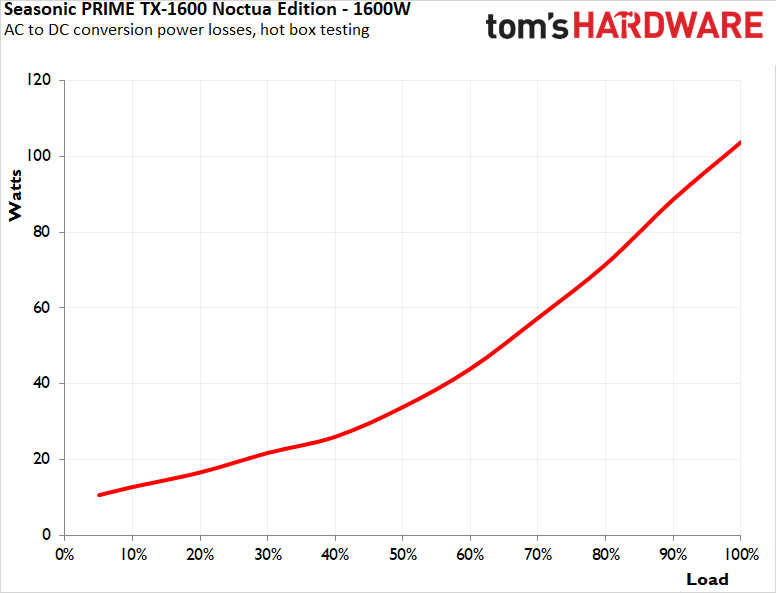

During cold testing, the Seasonic Prime TX-1600 Noctua Edition demonstrated spectacularly high efficiency, meeting both the 80Plus Titanium and Cybenetics Titanium certification requirements with a 115 VAC input, missing the 80Plus Titanium certification requirements with an input voltage of 230 VAC by a hair at maximum load. It achieved an average nominal load efficiency of 93.1% at 115 VAC and 94.8% at 230 VAC. The efficiency peaked at approximately 40% load and remained fairly stable across the entire nominal load range (10–100%). At very low loads, the PSU maintained excellent efficiency levels, surpassing typical standards for advanced PC PSUs.

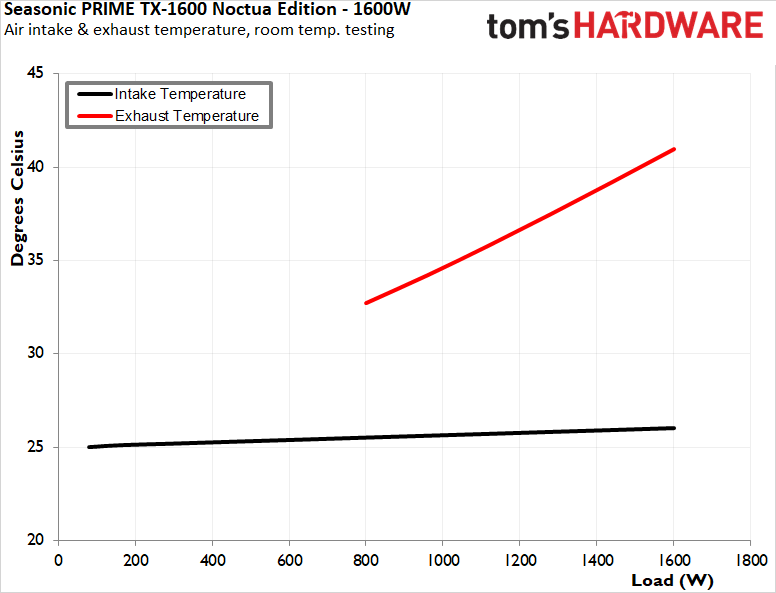

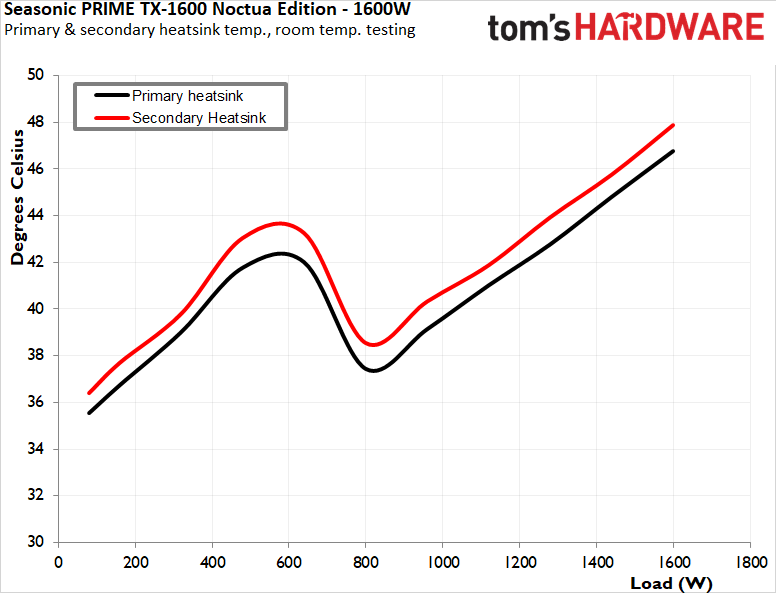

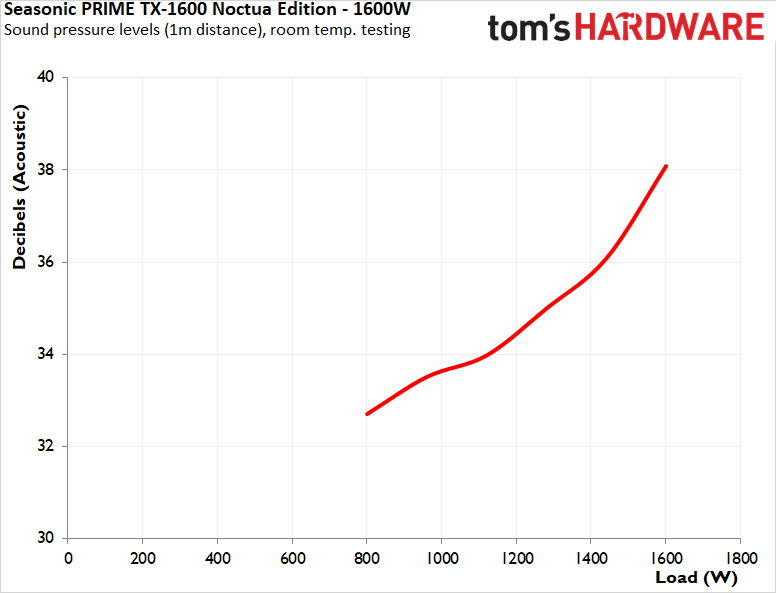

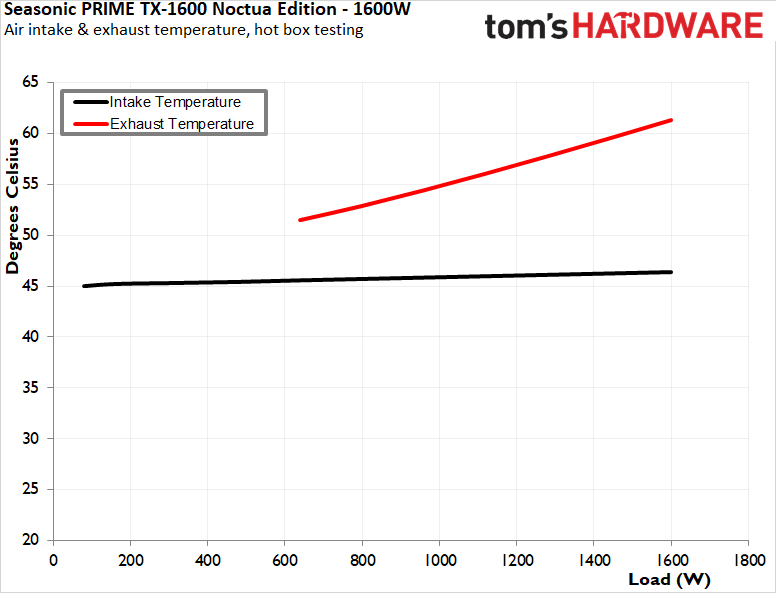

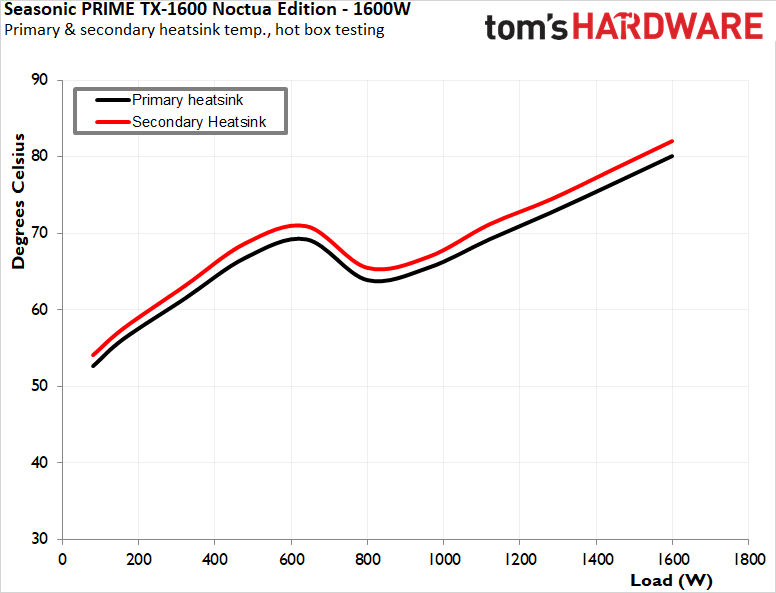

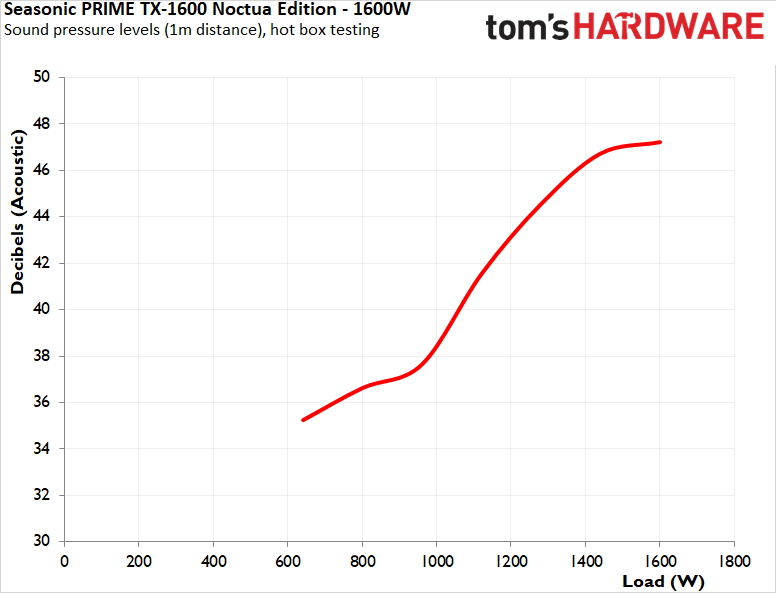

The Noctua NF-A12x25 HS-PWM fan remained inactive until the load exceeded 800 watts, allowing the PSU to operate passively under lower power conditions. When the fan did engage, it spun at low speeds and generated minimal noise. Even under maximum load, the fan did not go anywhere near its full rotational speed, maintaining a fairly quiet operation. Thermal performance during cold testing was notable, with internal temperatures staying very low despite the PSU’s high power output. The effect that the designer’s choice of heatsinks and the inclusion of Noctua’s advanced fan are evident on the thermal and acoustics performance of this unit.

Hot Test Results

Hot Test Results (~45°C Ambient)

During hot testing, the Seasonic Prime TX-1600 Noctua Edition exhibited a marginal drop in efficiency compared to cold conditions. The unit achieved an average nominal load efficiency of 92.9% at 115 VAC and 94.6% at 230 VAC, reflecting a slight decrease of 0.2% from cold testing results. This efficiency drop is minimal, with the PSU virtually completely unfazed by the >20 degree Celsius temperature increase.

The Noctua NF-A12x25 HS-PWM fan activated slightly earlier during hot testing, starting operation at approximately 650 watts compared to 800 watts in room temperature conditions. The fan speed increased progressively with load but even now it does not ever reach its maximum rotational speed. Acoustic performance was outstanding, with noise levels remaining controlled and within a tolerable range throughout testing. Thermal performance was exceptional, with operating temperatures remaining extremely low for a PSU with such a high power output. The internal components were kept well within safe limits, with no indication of thermal stress.

PSU Quality and Bottom Line

Power Supply Quality

The Seasonic Prime TX-1600 Noctua Edition exhibits outworldish electrical stability and power quality, setting the standards for a top-tier PC PSU. The 12V rail maintains regulation at 0.4%, while the 5V and 3.3V rails hold at 0.8% and 0.7%, respectively. Ripple suppression is particularly noteworthy, with maximum ripple levels measured at 20 mV on the 12V rail, 14 mV on the 5V rail, and 14 mV on the 3.3V rail. These would be top-class results for any PC PSU, which the Prime TX-1600 achieves while outputting 1600 watts with an ambient temperature above 45 degrees Celsius.

During our thorough assessment, we evaluate the essential protection features of every power supply unit we review, including Over Current Protection (OCP), Over Voltage Protection (OVP), Over Power Protection (OPP), and Short Circuit Protection (SCP). All protection mechanisms were activated and functioned correctly during testing. The OCP trigger thresholds are set at 128% for the 3.3V rail, 132% for the 5V rail, and 126% for the 12V rail. The OPP is set to engage at 128%, aligning with expectations for an ATX 3.1-compliant power supply.

Bottom Line

The Seasonic Prime TX-1600 Noctua Edition sets itself apart as a meticulously engineered power supply unit, showcasing a unique collaboration between Seasonic and Noctua. The design of the unit reflects this partnership, with a robust black chassis accented by Noctua’s signature brown, creating a distinctive aesthetic that some will love and some will hate. The inclusion of individually sleeved black-and-brown cables further complements the theme. Aesthetics are something typically subjective but we believe that it will be a great visual match if other Noctua products are found in the system. Internally, the PSU features a densely packed layout with oversized heatsinks, premium Nippon Chemi-Con capacitors, and advanced circuit design, ensuring both durability and performance.

In terms of efficiency and electrical performance, the Prime TX-1600 Noctua Edition delivers industry-leading results. The unit meets 80Plus Titanium and Cybenetics Titanium certification requirements, achieving a peak efficiency of 94.8% at 230 VAC during cold testing and maintaining an impressive 94.6% in hot conditions. At 115 VAC, it maintains similarly high efficiency, with peak values reaching 93.1% during cold testing and 92.9% in hot conditions. Voltage regulation is exceptional, with deviations of just 0.4% on the 12V rail and ripple levels as low as 20 mV, setting a high standard for power quality.

The PSU’s thermal and acoustic performance is equally impressive, some of which success can be attributed to Noctua’s NF-A12x25 HS-PWM fan and special grill design, but we should not forget about the massive heatsinks and top-tier components either. This advanced cooling design ensures minimal turbulence noise while effectively maintaining very low operating temperatures with no or minimal airflow, even under full load and elevated ambient conditions. Even when the fan does start – and it takes quite a load to make it start at all – it remains remarkably quiet even at higher loads, never reaching its maximum speed during testing.

At a retail price of $570, the Seasonic Prime TX-1600 Noctua Edition represents a significant investment, but it justifies this cost by setting performance benchmarks in nearly every category. Its combination of cutting-edge design, unparalleled efficiency, superior electrical stability, and remarkable thermal and acoustic characteristics makes it a standout option for users who demand uncompromising quality. For professionals and enthusiasts building systems with extreme power requirements, this PSU is a definitive choice, offering a balance of innovation, performance, and reliability that is difficult to match.

MORE: Best Power Supplies

MORE: How We Test Power Supplies

MORE: All Power Supply Content

]]>Yesterday, Microsoft unveiled WHAMM, a generative AI model for real-time gaming, as demonstrated in its demo starring the 28-year-old classic Quake II. The interactive demo responds to user inputs via controller or keyboard, though the frame rate barely hangs in the low to mid-teens. Before you grab your pitchforks, Microsoft emphasizes that the focus should be on analyzing the model's quirks and not judging it as a gaming experience.

WHAMM, which stands for World and Human Action MaskGIT Model, is an update to the original WHAM-1.6B model launched in February. It serves as a real-time playable extension with faster visual output. WHAM uses an autoregressive model where each token is predicted sequentially, much like LLMs. To make the experience real-time and seamless, Microsoft transitioned to a MaskGIT-style setup where all tokens for the image can be generated in parallel, decreasing dependency and the number of forward passes required.

WHAMM was trained on Quake II with just over a week of data, a dramatic reduction from the seven years required for WHAM-1.6B. Likewise, the resolution has been bumped up from a pixel-like 300 x 180 to a slightly less pixel-like 640 x 360. You can try out the demo yourself at Copilot Labs.

The model's ability to keep track of the existing environment, apart from the occasional graphical anomaly, while simultaneously adapting to user inputs, is impressive, regardless of the atrociously bad input lag. You can shoot, move, jump, crouch, look around, and even shoot enemies, but ultimately, it's no more than a fancy showcase and can never substitute the original experience.

As expected, the model isn't perfect. Enemy interactions are described as fuzzy, the context length is limited, the game incorrectly stores vital stats like health and damage, and it is confined to a single level.

This announcement follows OpenAI's latest Ghibli trend, which has garnered a lot of negative attention. While I'm no artist, there's a certain human element to every piece of creative work that AI cannot truly recreate. Yet, with AI's current rate of development, we might see that fully AI-generated games and movies could be a reality within the next few years, and that's where things are heading.

The sweet spot lies in AI enhancing, not replacing, creative works, like Nvidia's ACE technology, which can power lifelike NPCs. Parts of this technology are already integrated into the life simulation game inZOI. From a technological point of view, WHAMM still represents a step up from previous attempts, which were often chaotic, incoherent, and teeming with hallucinations.

]]>OctoPrint is a software that allows you to control and monitor your 3D printer remotely. You can start and stop, adjust 3D printing settings, and even view the live progress of your 3D prints via a camera. The software supports various plugins, each designed to address specific needs, and allows you to tailor the software based on your requirements. If you own one of the best 3D printers and want to create timelapses, you must install Octolapse.

Some plugins can help you organize print files on OctoPrint and automate routine tasks like bed leveling or filament changes. Others focus on analytics and reporting to offer insights on print time by analyzing the G-code as well as the performance of the 3D printer. We look at the best five plugins for OctoPrint. But before that, let’s look at how to access and install the plugins in OctoPrint.

How to Access and Install Plugins in OctoPrint

Access and Install OctoPrint Plugins

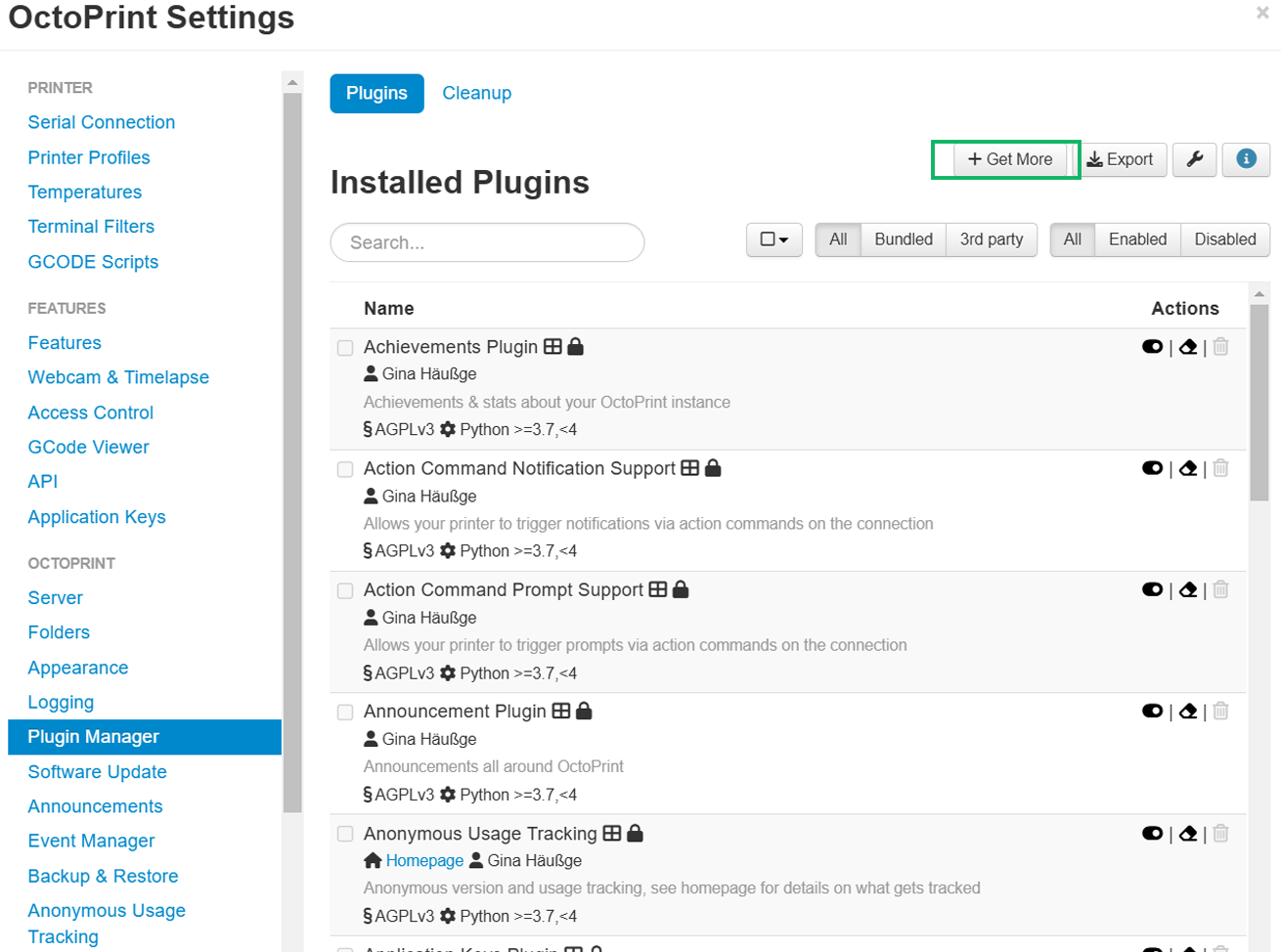

Accessing and installing plugins on OctoPrint is straightforward if you have already managed to set up the software. Follow the steps below.

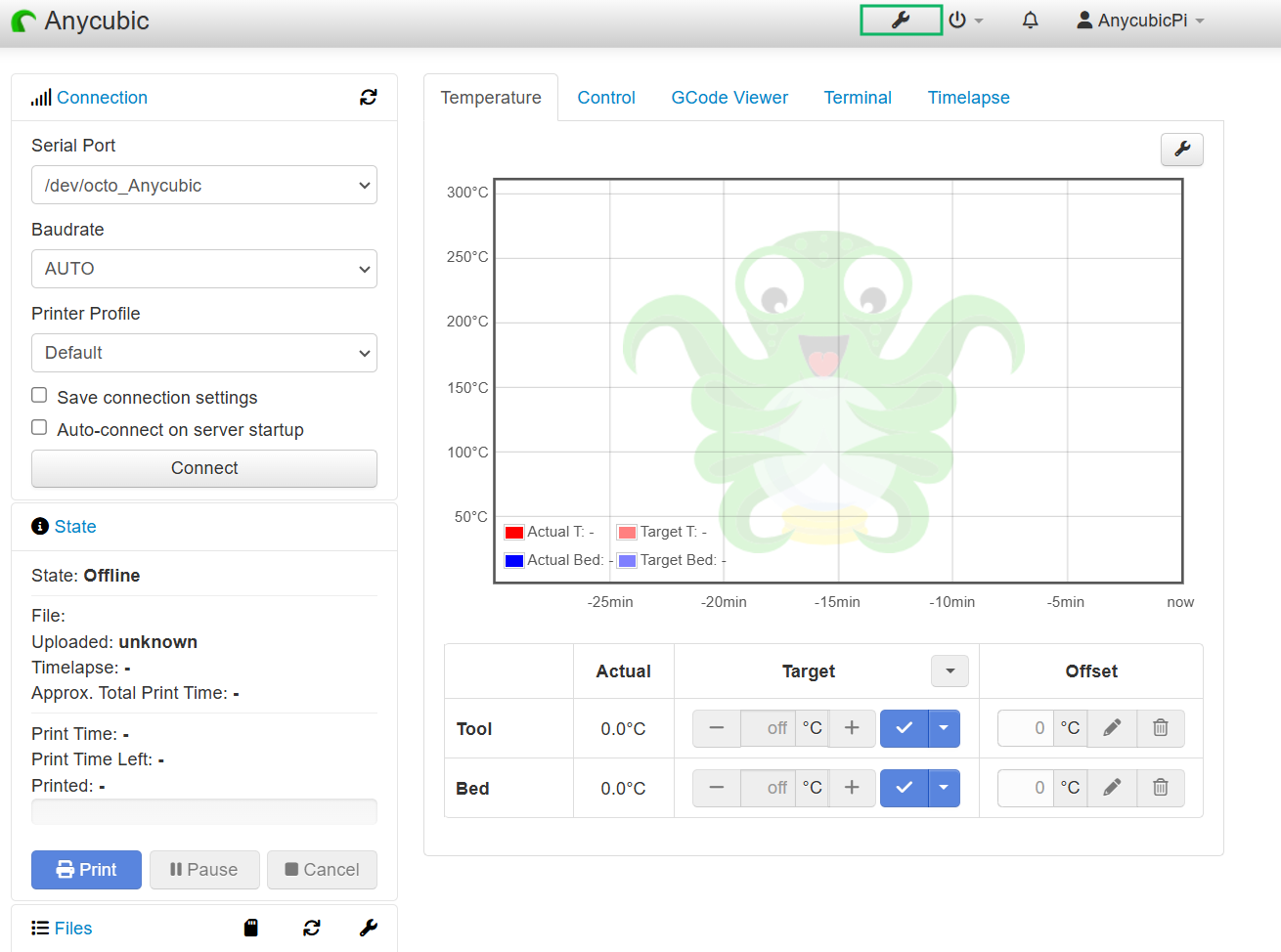

1. Log in to OctoPrint interface in your browser.

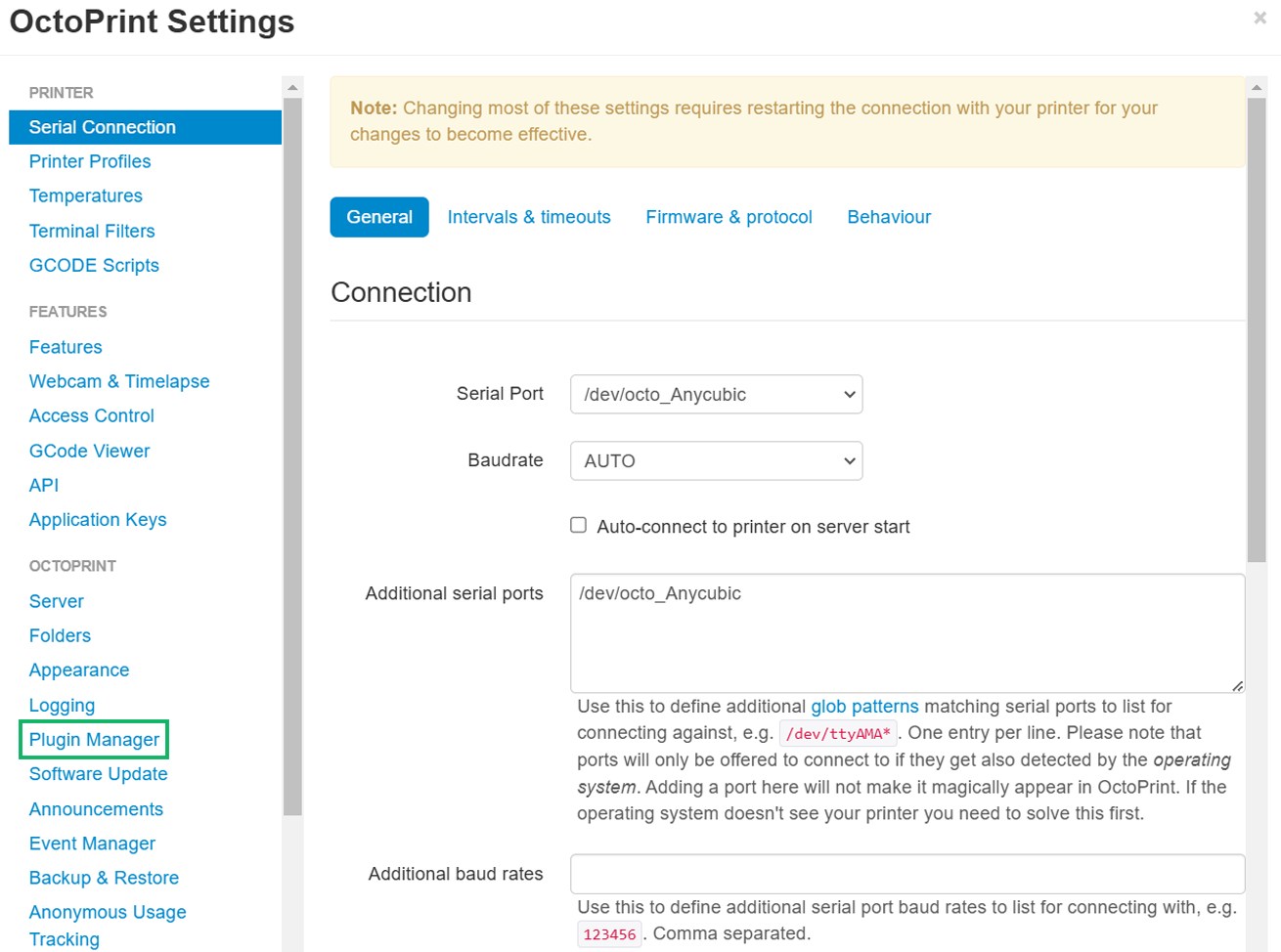

2. Click the wrench icon on the top-right corner to access the settings menu.

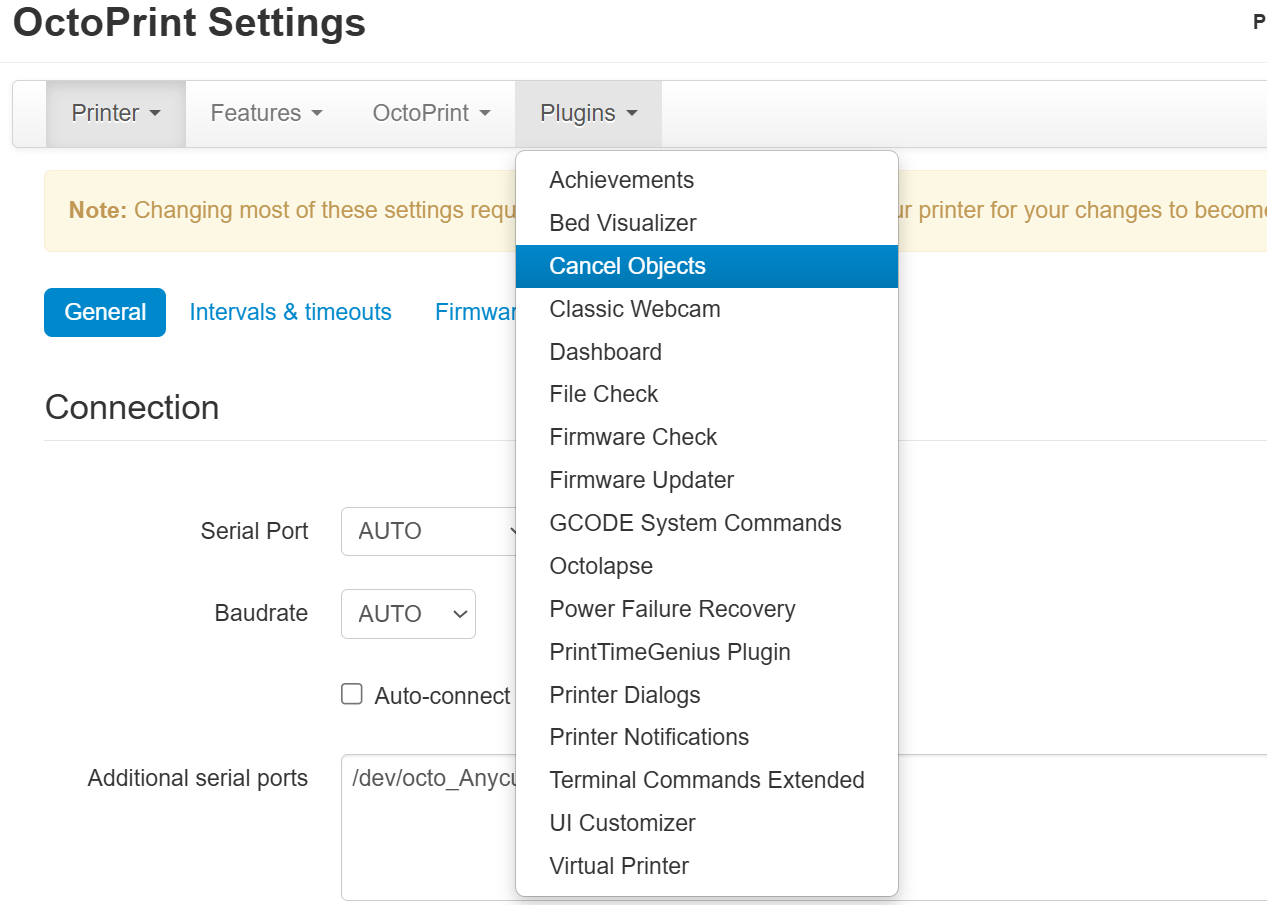

3. Locate and click Plugin Manager under OCTOPRINT section. This enables you to search, install, and manage plugins.

You will be able to see the plugins already installed. To look for more, click Get More.

Best Octoprint Plugins

Let’s now have a look at the best OctoPrint plugins to install.

Octolapse

1. Octolapse (Plugin for Creating 3D Printing Timelapses)

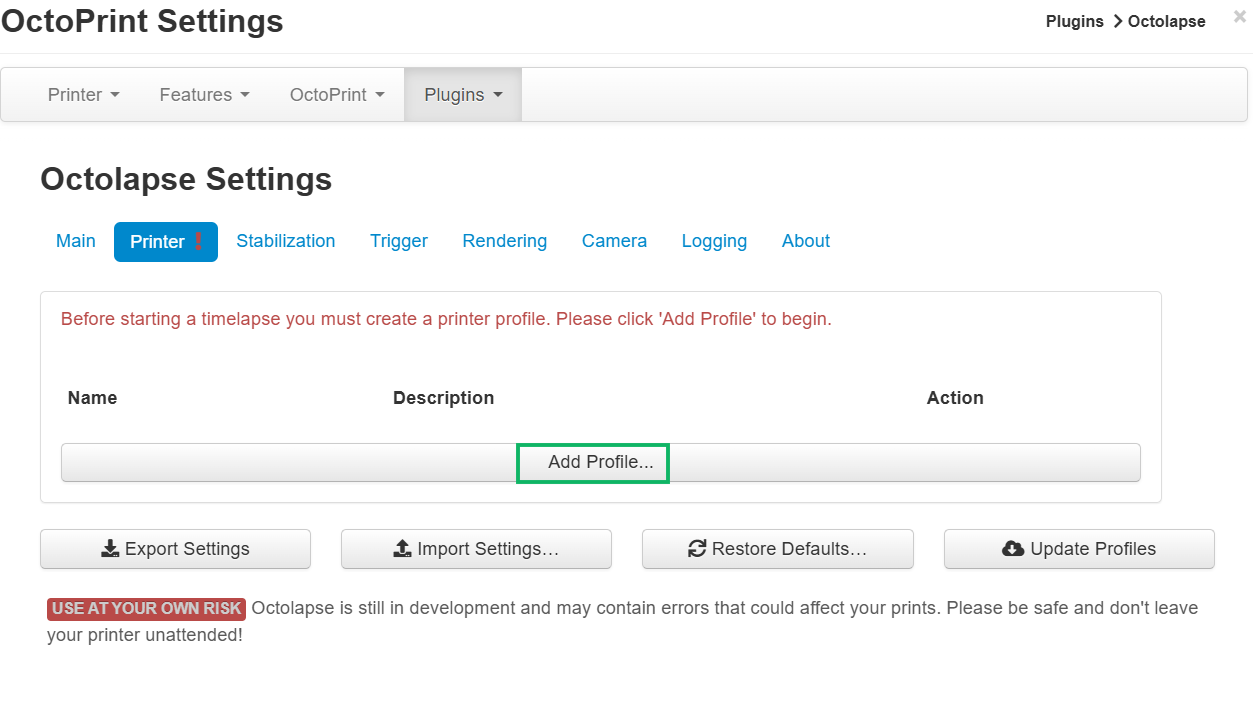

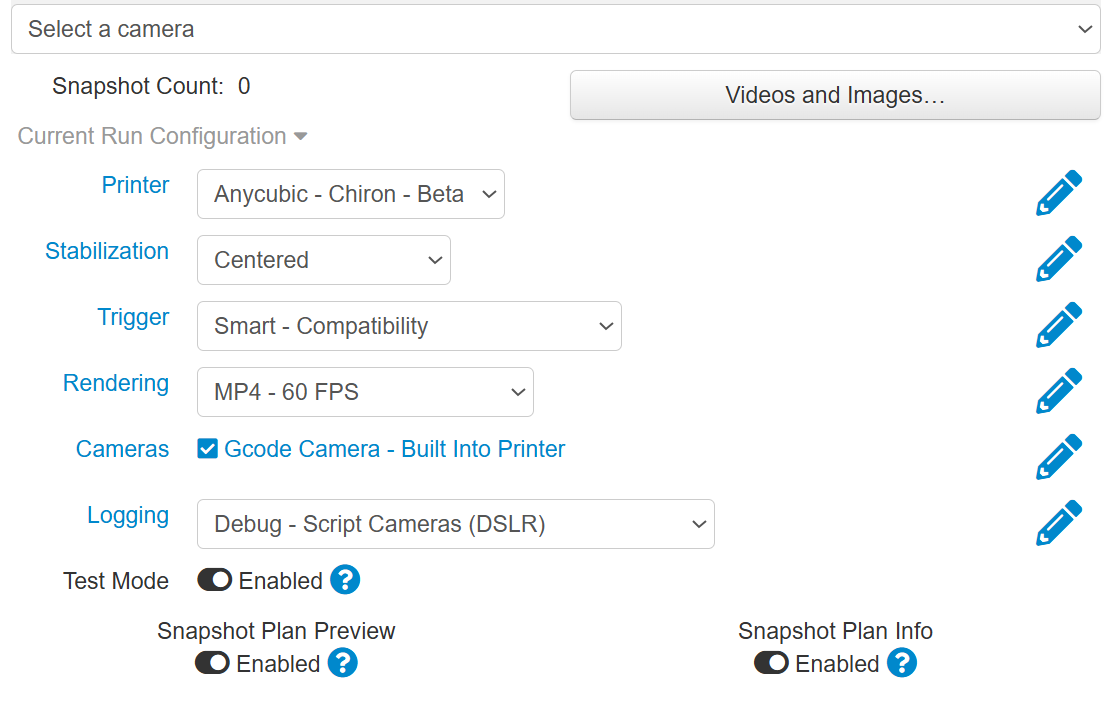

Octolapse is the first plugin on our list. If you have ever come across short, interesting 3D printing time-lapses where the print looks like it’s growing steadily from the print bed, this is the plugin that can be used to create them. It captures snapshots at various stages of the printing process and synchronizes the camera's movement with the print head. Octolapse is completely free, and all you need to have is a Raspberry Pi and a camera, which can be a webcam, Pi camera, or DSLR. Follow the steps below to install and use it.

1. Search for Octolapse on the search bar in the settings section, then install it.

2. Restart OctoPrint, and then add your 3D printer profile using the Add Profile section. Then, connect it to OctoPrint using a USB cable.

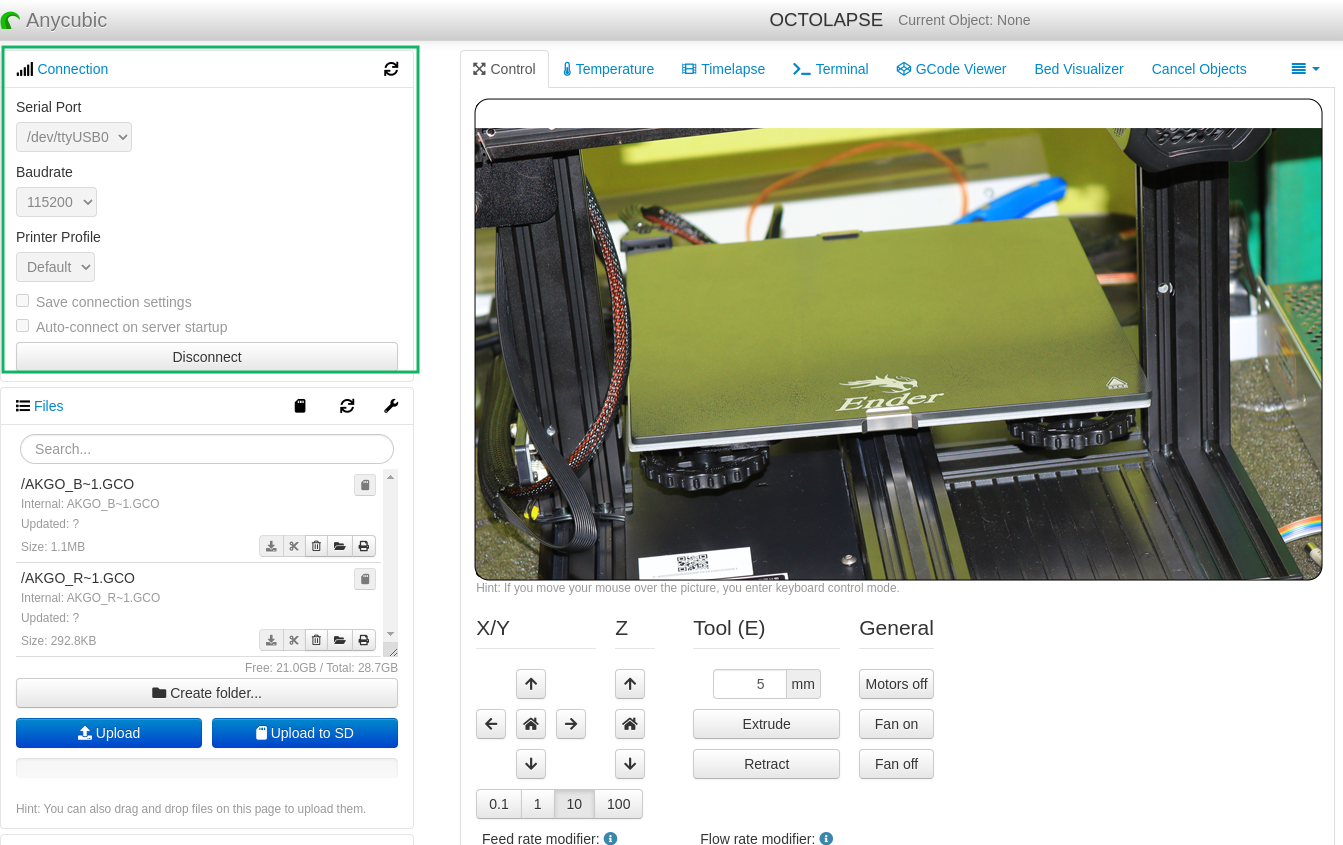

3. Start the 3D printer connection by clicking on Connect on the left section of the interface.

You can then go ahead and set up your camera settings.

When you finish, start your 3D print and you will see the snapshots of your timelapses in the videos and images section. You can choose to download or delete them from there.

Bed Visualizer

2. Bed Visualizer (Bed Adjustment Plugin)

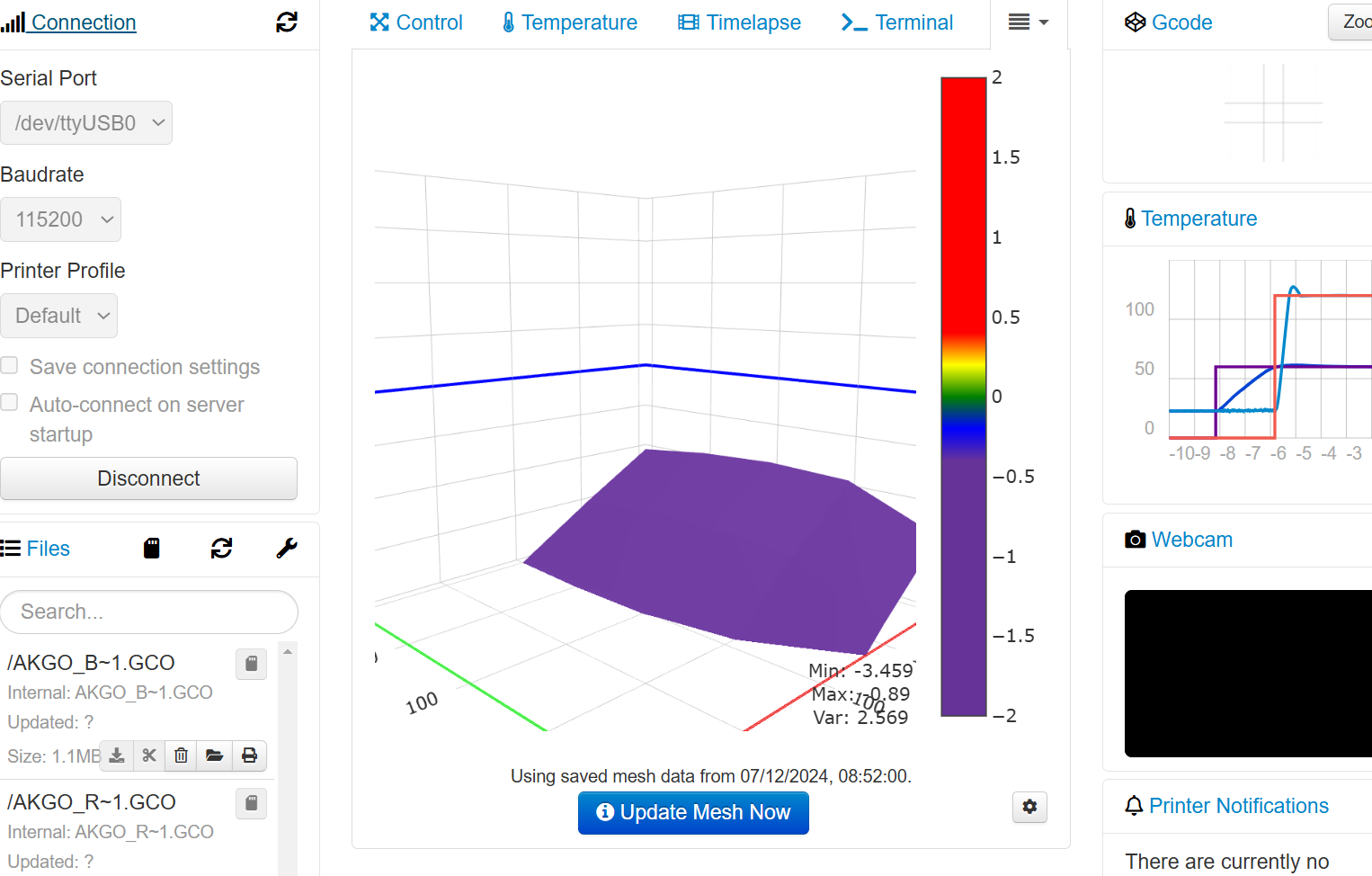

Bed leveling is an important and difficult process, especially if your 3D printer doesn’t have automatic bed leveling features. Even auto-bed leveling features might not be accurate sometimes, and your 3D prints can fail if there is a part that is not well-leveled. The bed visualizer plugin is a great tool to help you properly level your bed. It integrates with the 3D printer firmware to gather the details of the bed and then it generates a 3D mesh visualization.

The visualization highlights high and low points in the X, Y, and Z axes. This helps point out the areas that can cause issues and this enables you to make the necessary adjustments. To set up the plugin, your 3D printer must be running on compatible firmware like Marlin, PrusaFirmware, Klipper, or Smoothieware.

Follow the steps below to learn how to use it.

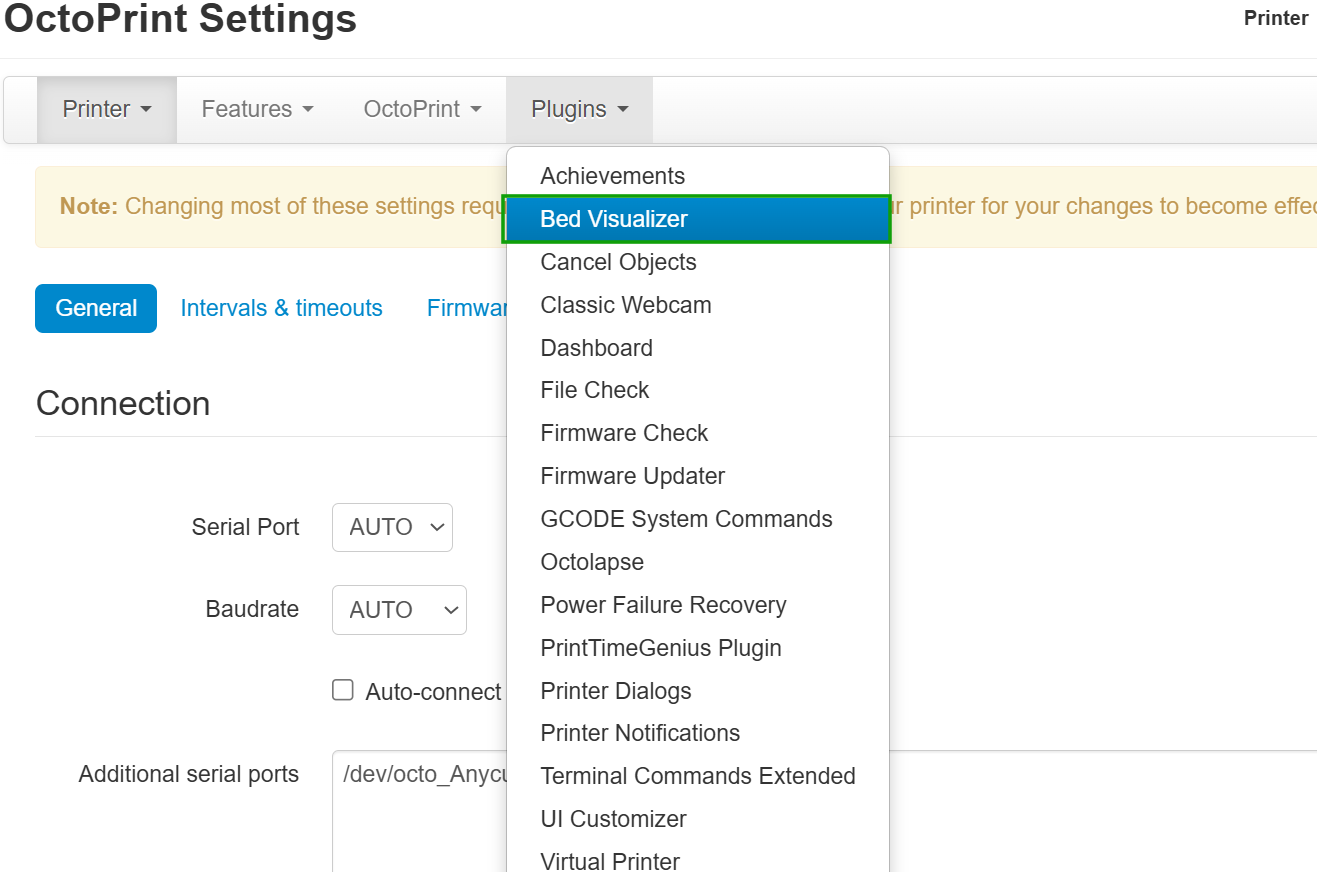

1. Go to the settings section, then Plugins > Bed Visualizer. If you don’t see it, go to Get More, then search for it there.

Sometimes, it might fail to install due to missing system dependencies. If that happens, you will need to SSH to your Raspberry Pi, then run the command sudo apt install libatlas3-base so that the plugin can load.

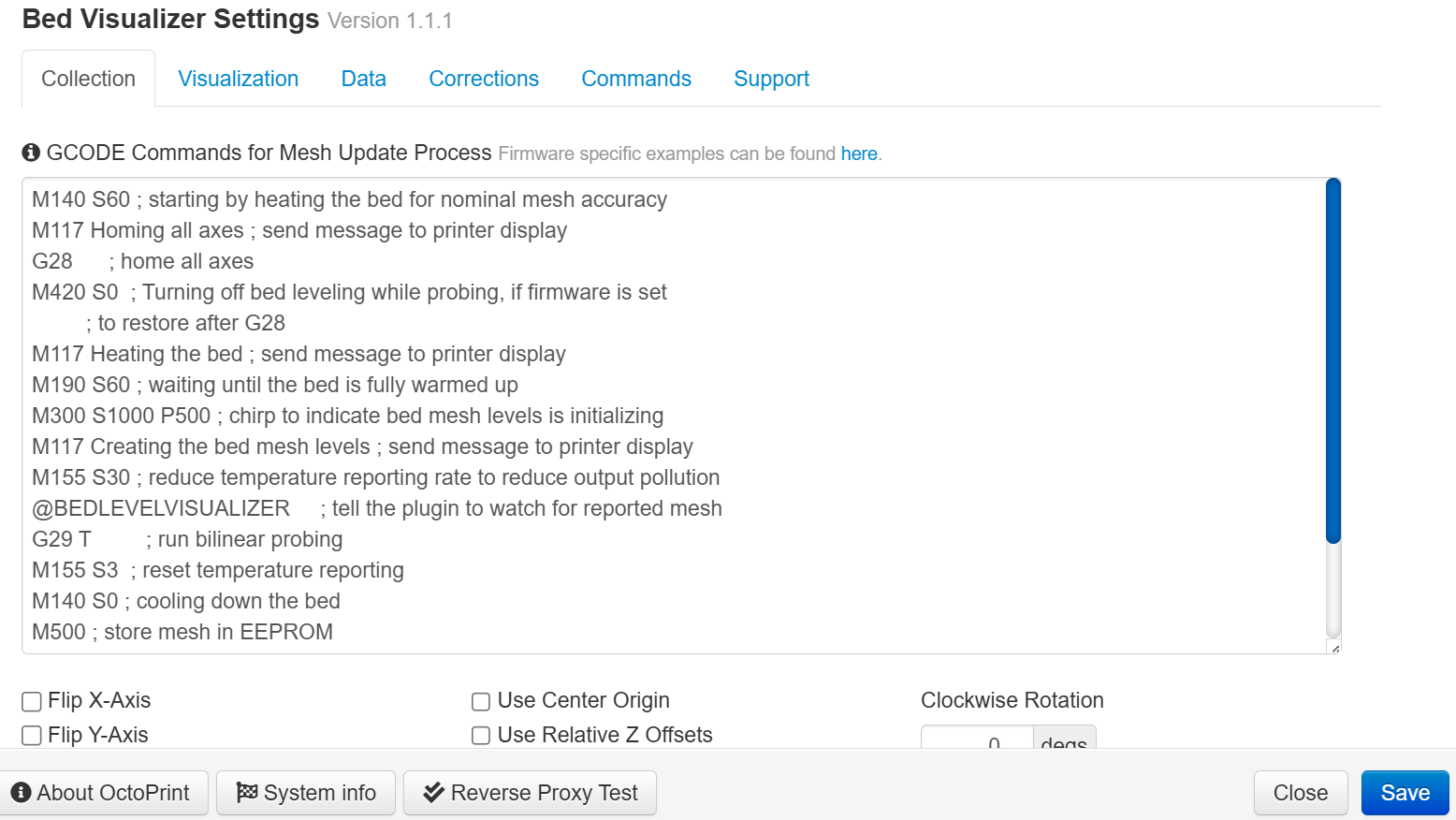

2. Click on the plugin, and you will need to set the Gcode commands for it to work. You can find the firmware-specific examples on GitHub. You can then enter them in the Gcode section.

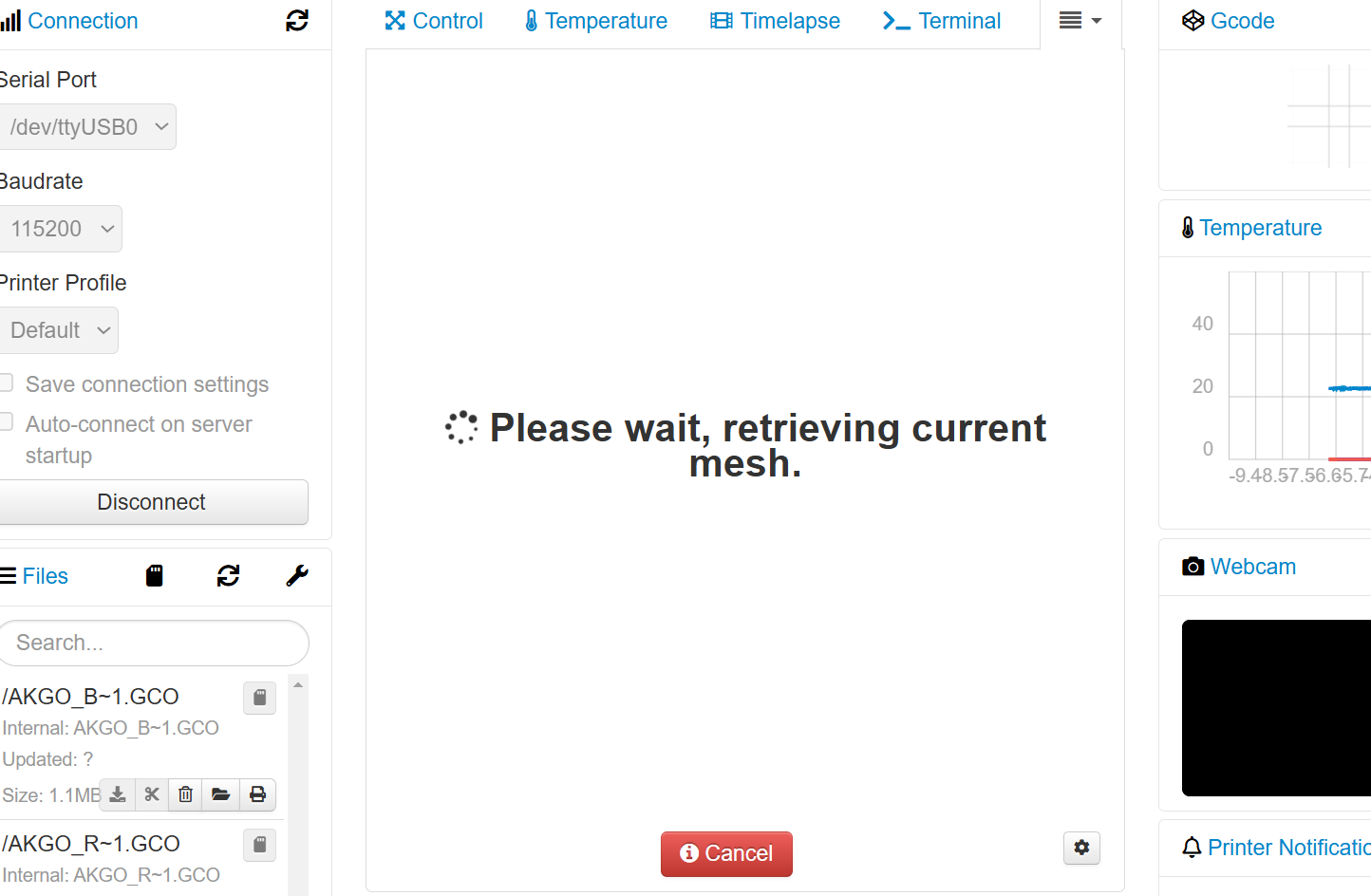

3. When you enter the example, save, and update the Mesh, you will see your 3D printer moving as the plugin retrieves the current mesh, as shown below.

You will see a visualization of your bed when it finishes, as shown below.

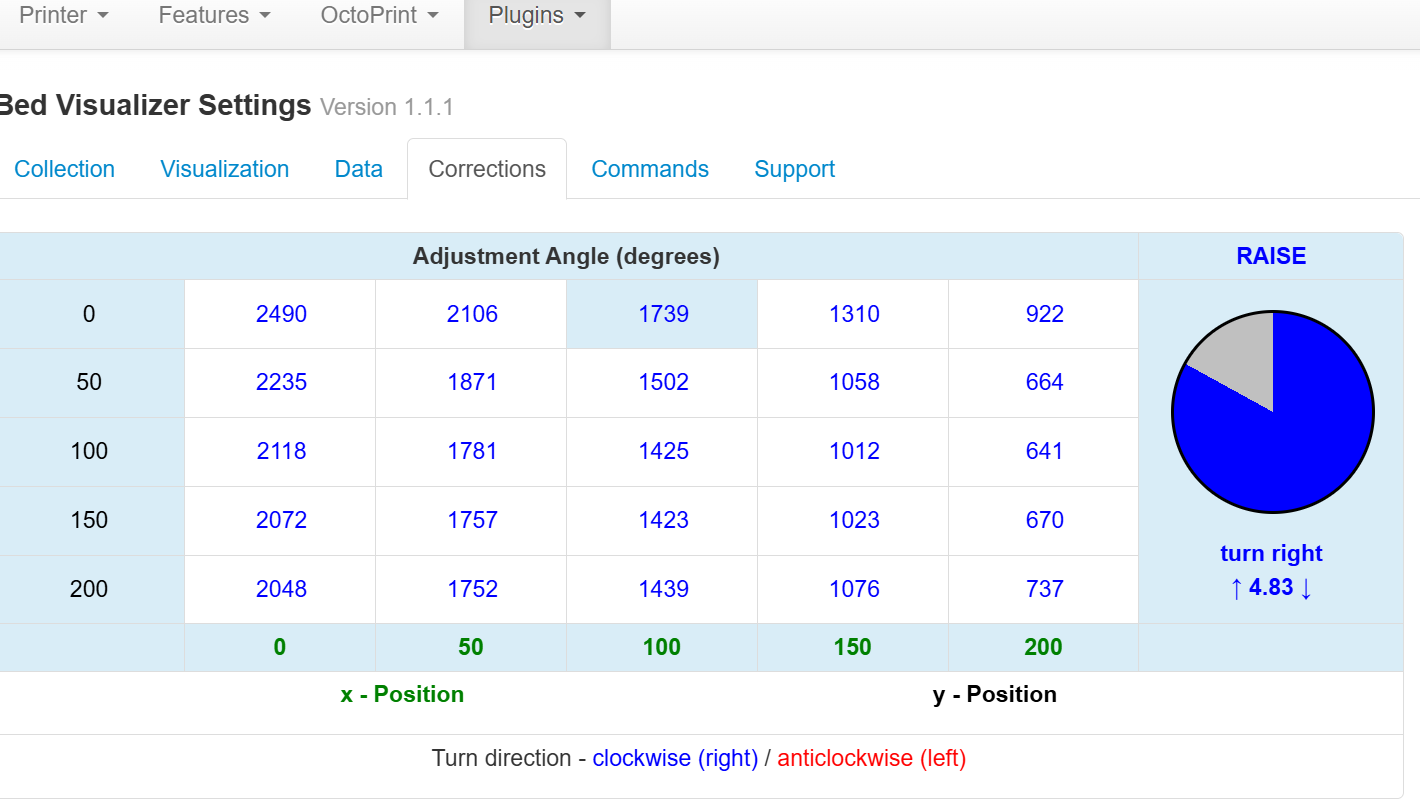

4. Click the settings icon on the right to get more details on your 3D printer bed. When you go to the Corrections section and hover your mouse over the numbers, you will see how much the points needs to be adjusted in relation to the point that you click.

PrintTimeGenius

3. PrintTimeGenius (Time Estimate Plugin)

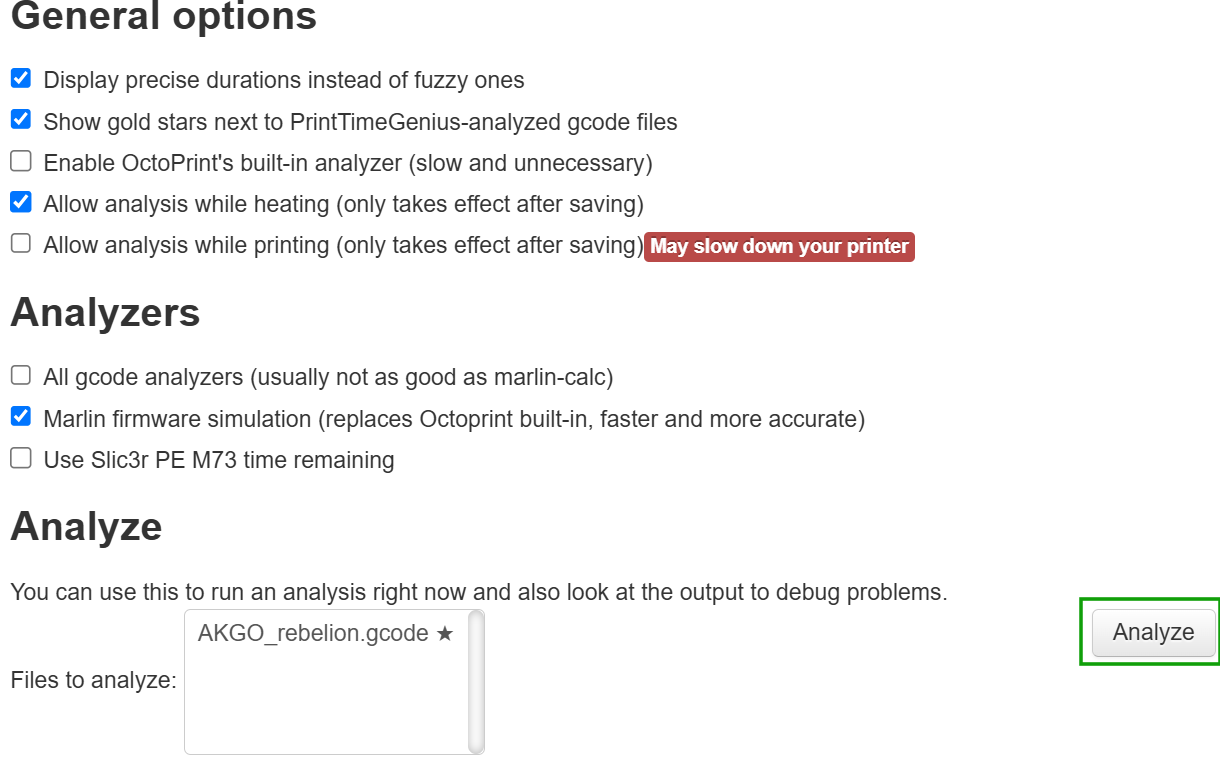

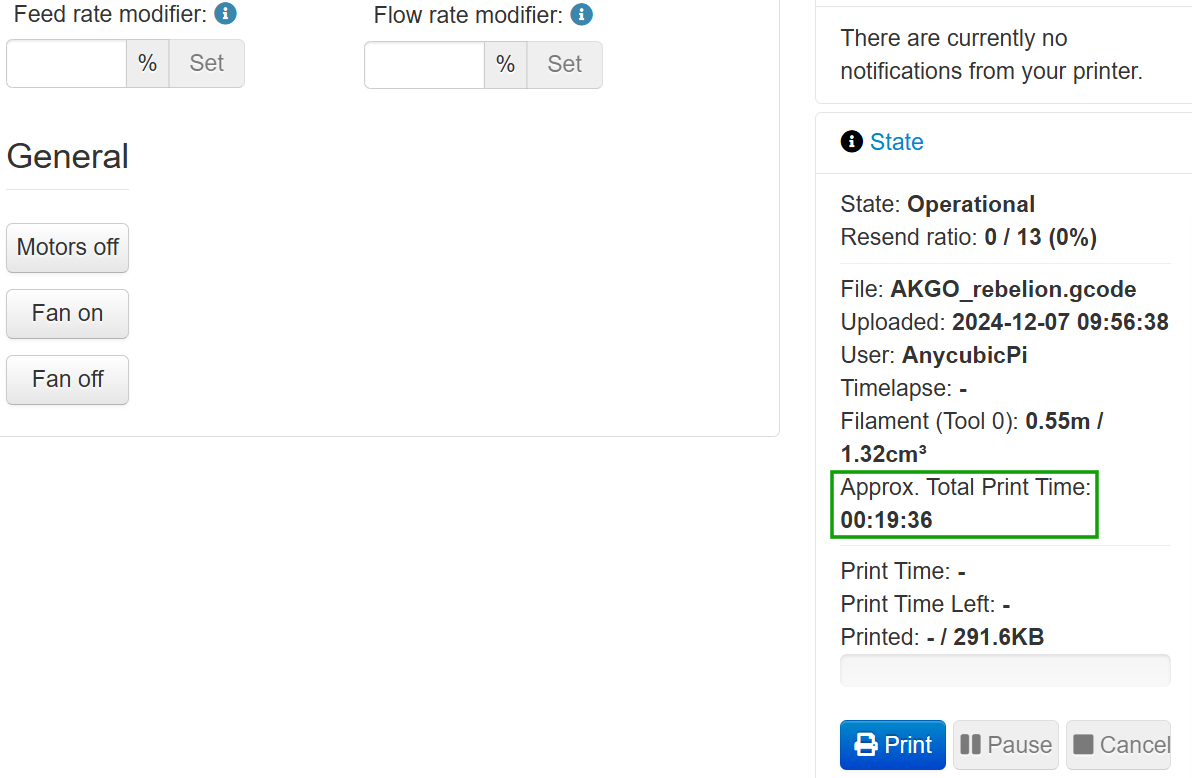

As the name suggests, PrintTimeGenius helps estimate the time it takes to 3D print your file. It analyzes the actual Gc-code instead of relying on the predictions of the 3D printer, which is not perfect. The good thing about this plugin is that it learns over time by comparing actual print durations with its predictions, and this improves the accuracy after each print. You can find it already installed on the plugins section. Follow the steps below to use it.

1. Adjust the settings accordingly after clicking on it. The default settings works well for most people. Click Save.

2. Upload a G-code to OctoPrint, then click the load and print icon just above the upload option.

3. Go to the plugins section, click PrintTimeGenious then select the G-code, and click Analyze.

Cancel Objects

4. Cancel Objects (Cancel Models in Individual Basis)

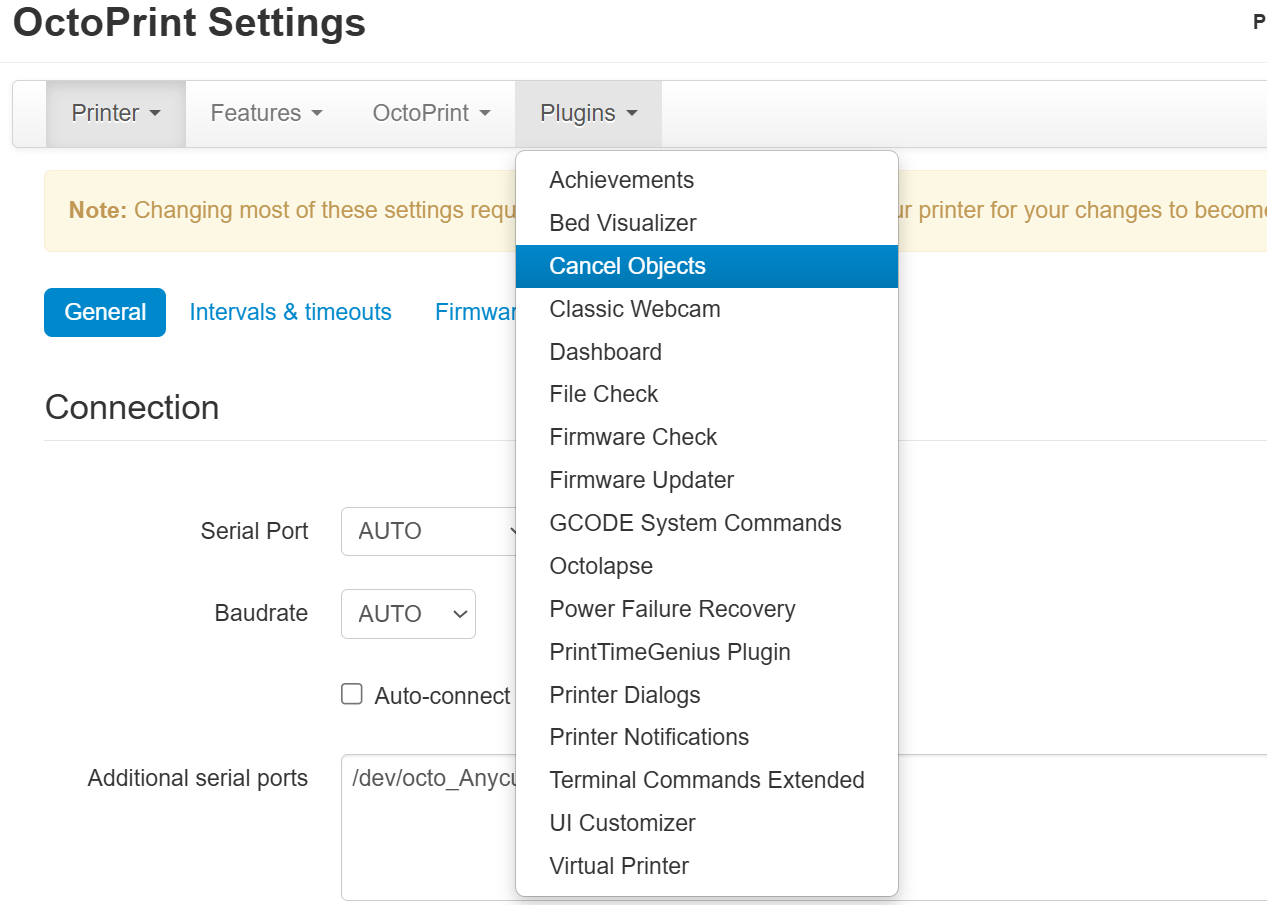

When 3D printing multiple objects at once, if something goes wrong and you would like to cancel one of the objects, it can be hard to do so without disrupting the others. Cancel Ojects makes it easier to cancel specific objects mid-print without restarting the entire print job. This is helpful as it prevents the failed objects from interfering with the other objects, saving time and material. To use it, follow the steps below.

1. Go to the plugins section and click Cancel Objects. If it’s not there already, go to Get More and search for it there. You can also get it on GitHub.

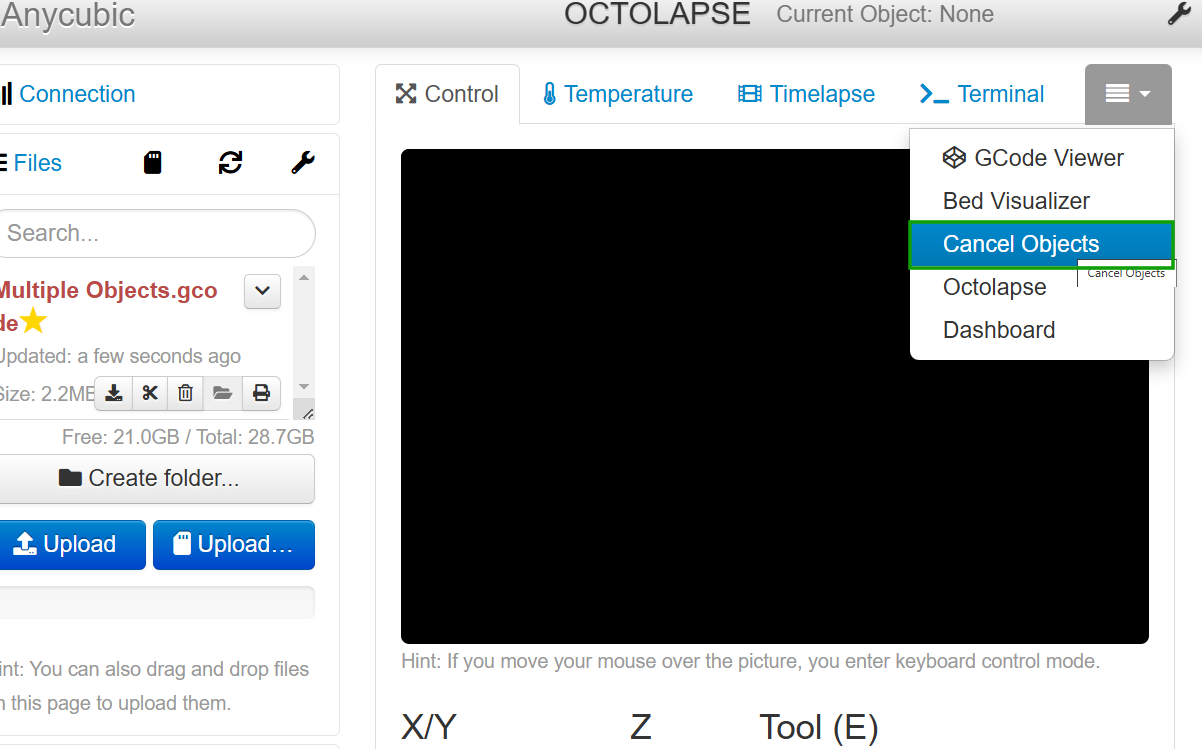

2. Upload multiple objects, then select Cancel Objects option on the drop-down menu near Terminal.

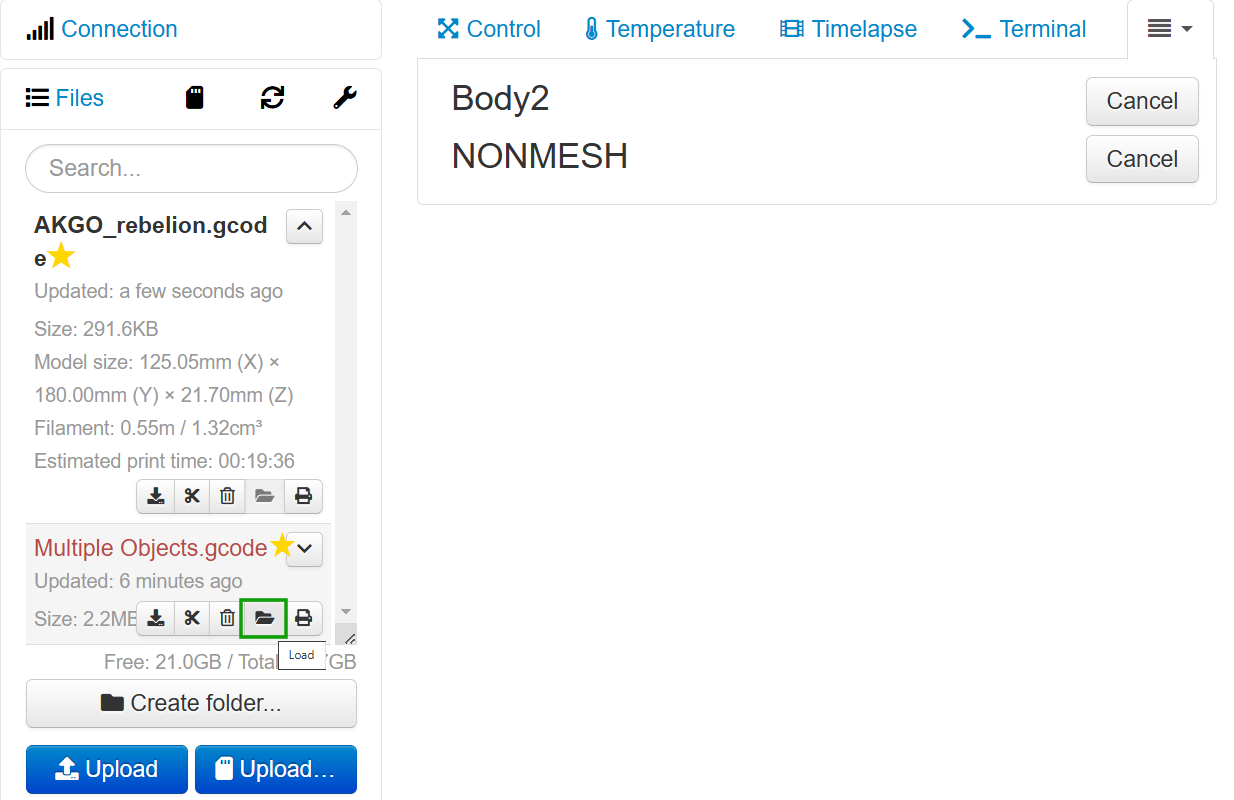

3. Load the files from the left section of the interface and you will see them appearing and there is an option to cancel them.

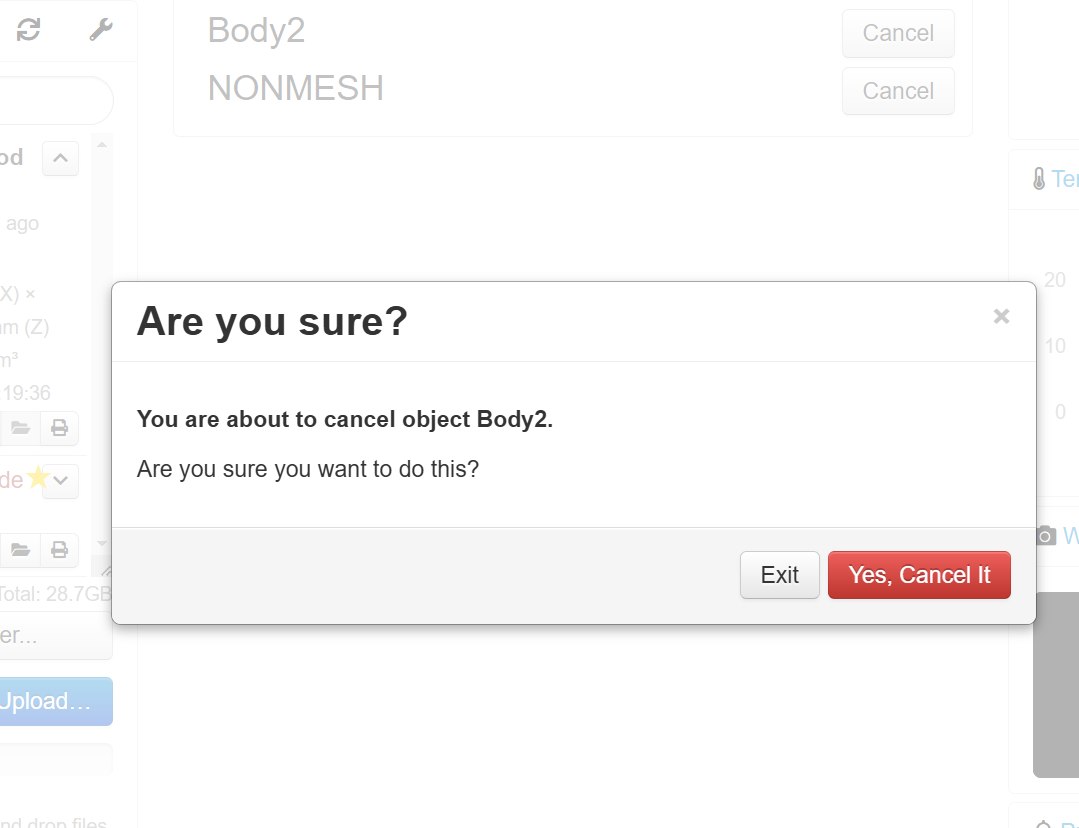

4. When you click cancel on an individual model, a window will launch, asking if you are sure to cancel. Proceed to accept.

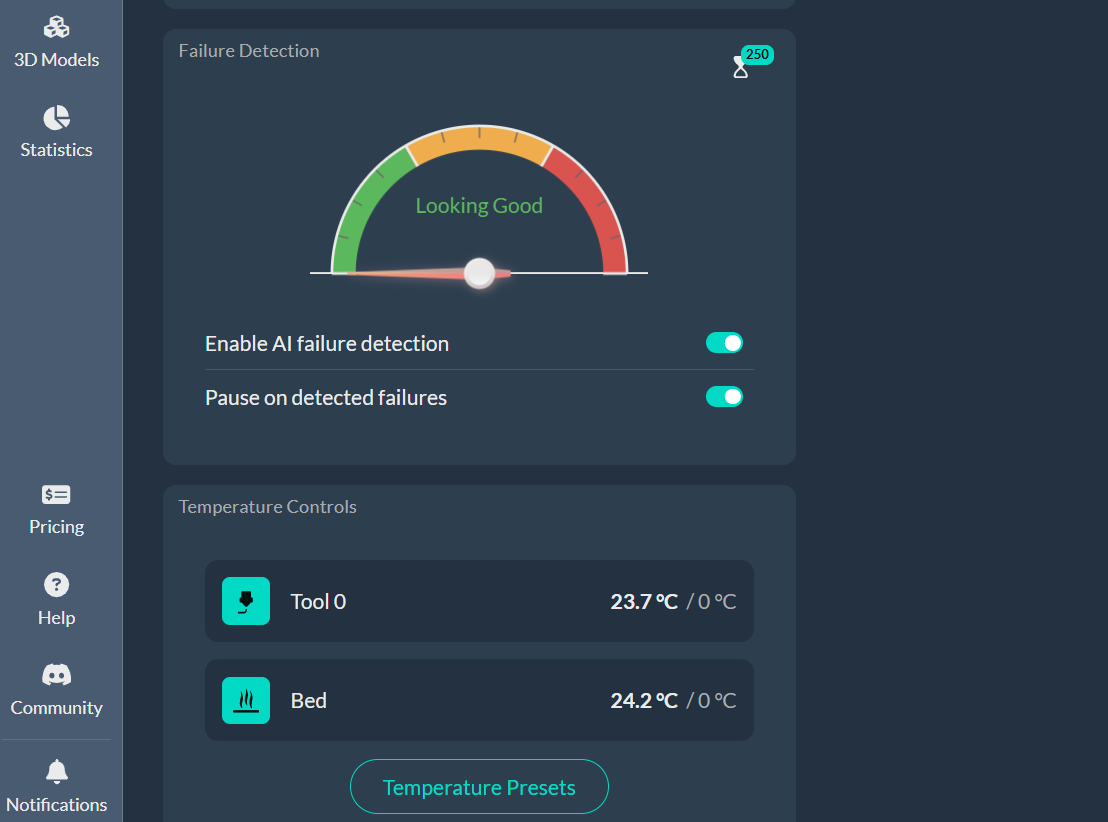

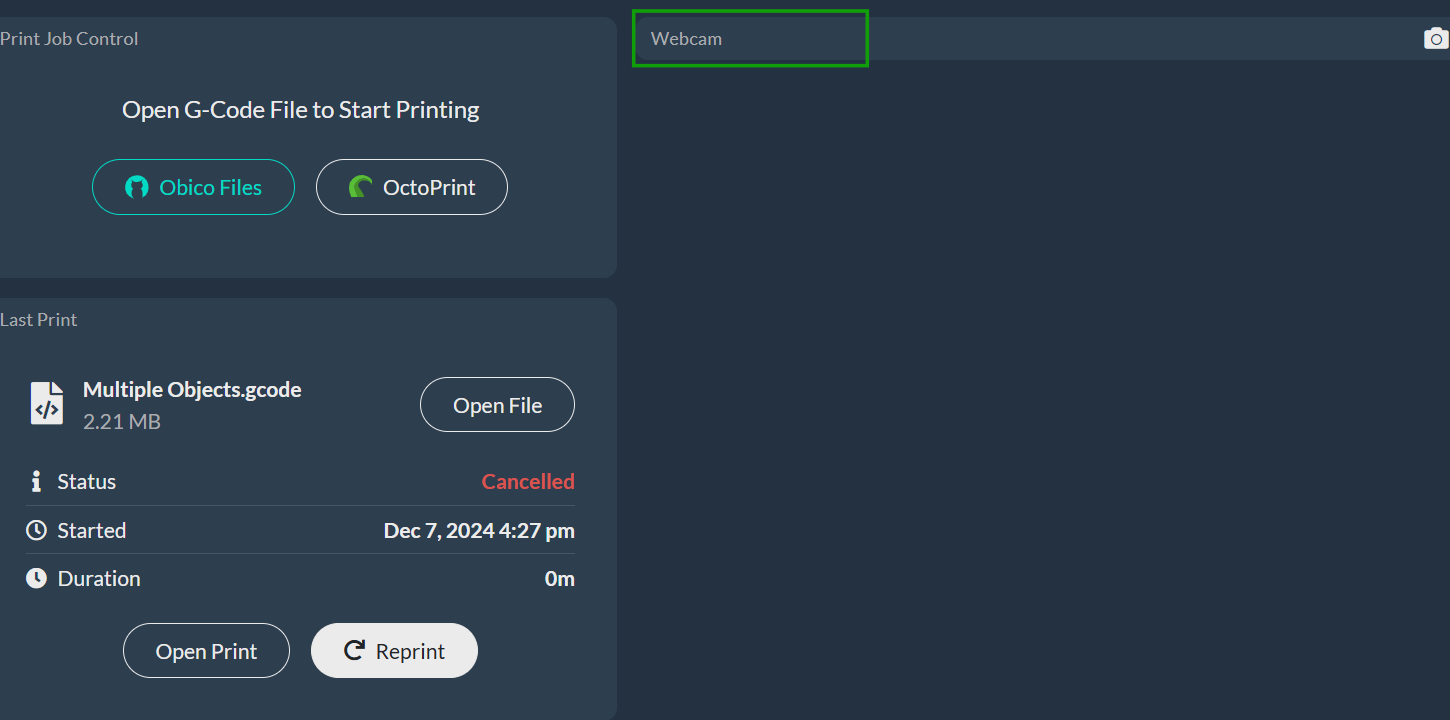

Obico

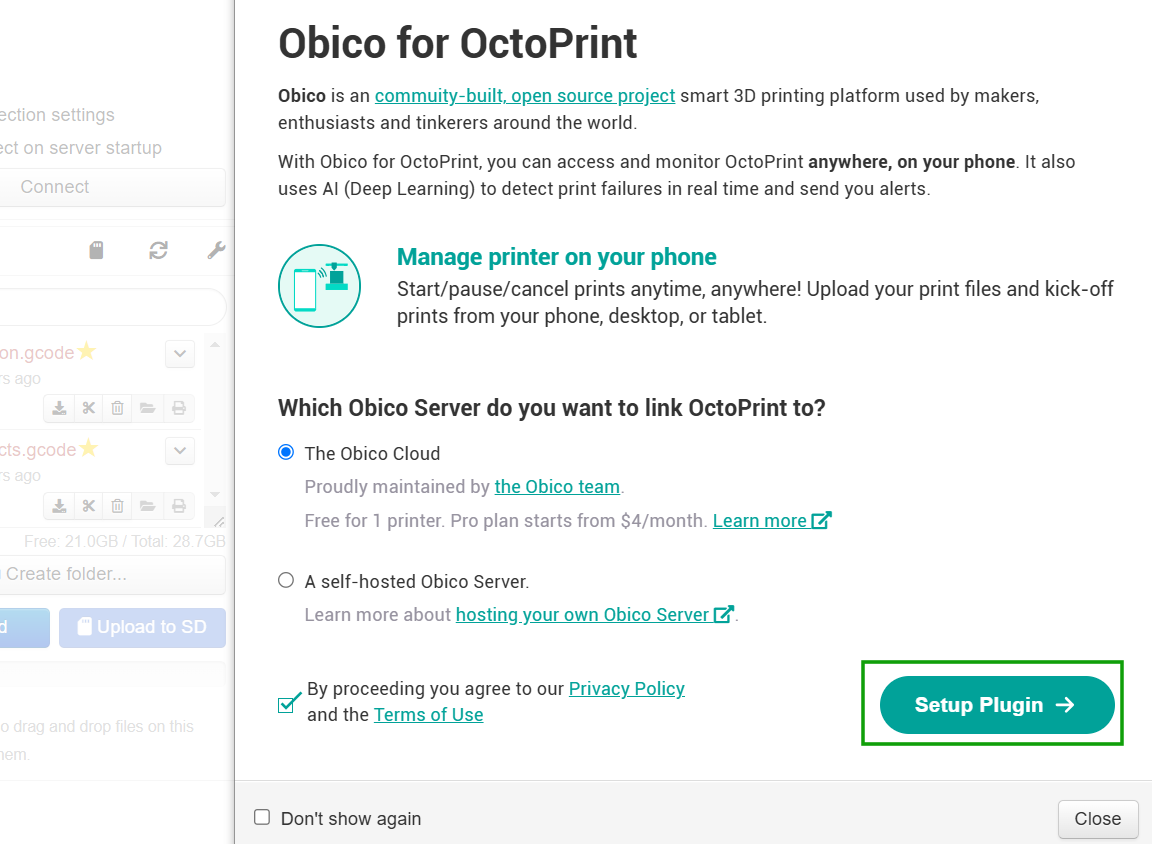

5. Obico (3D Print Failure Detector)

Obico, formerly known as The Spaghetti Detective, uses AI to detect potential print failures in real time. It monitors the progress of the print, identifies issues like filament tangles or spaghetti-like tangles, and alerts you. Obico also integrates with webcams, allowing you to visually monitor prints through your phone or computer. It also supports notifications through SMS, email, or push alerts. Get to know how to use it in the steps below.

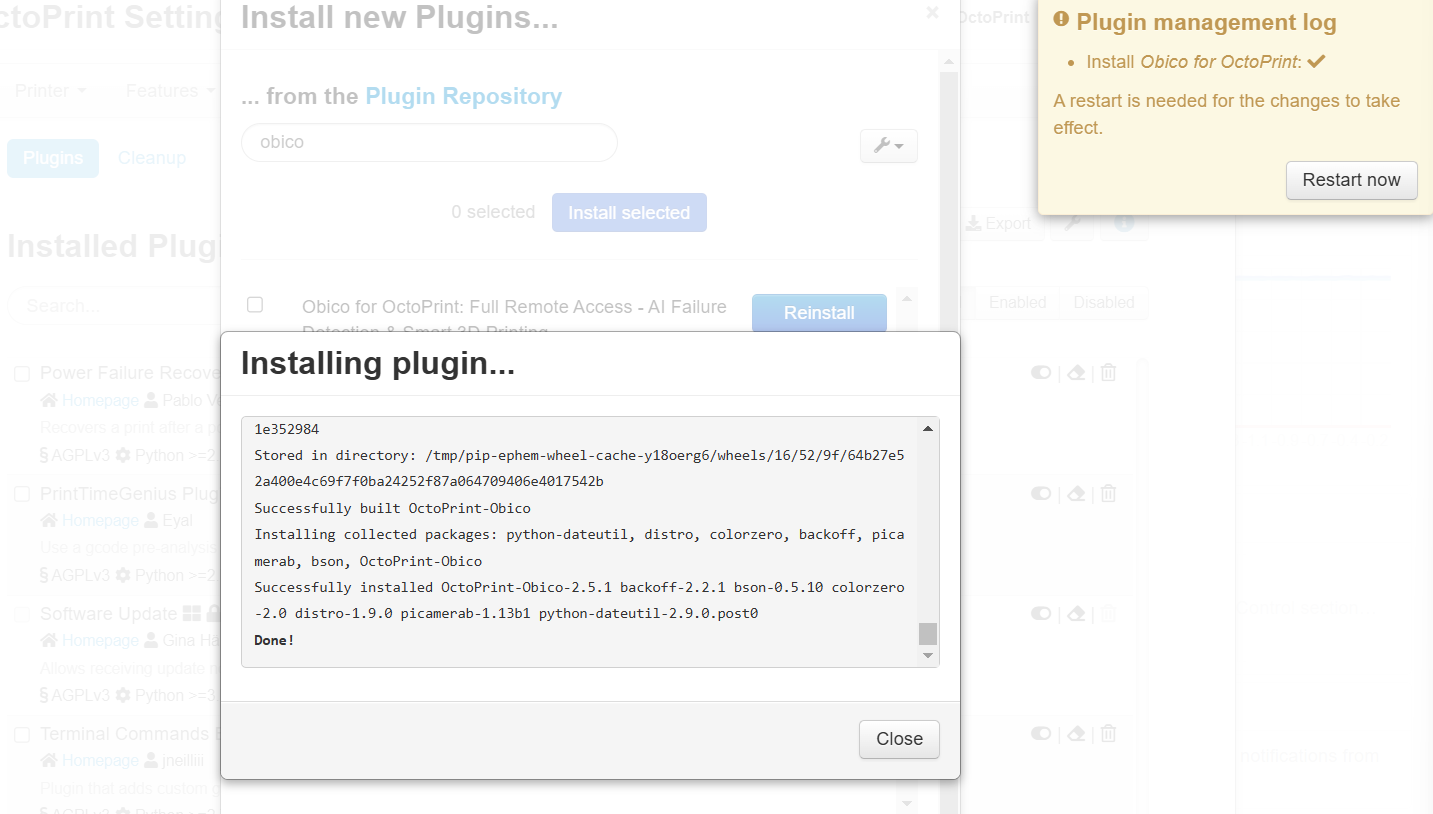

1. Go to Plugins Manager > Get More, search for Obico, and install it.

2. Restart OctoPrint, then reload the page so that the changes take effect.

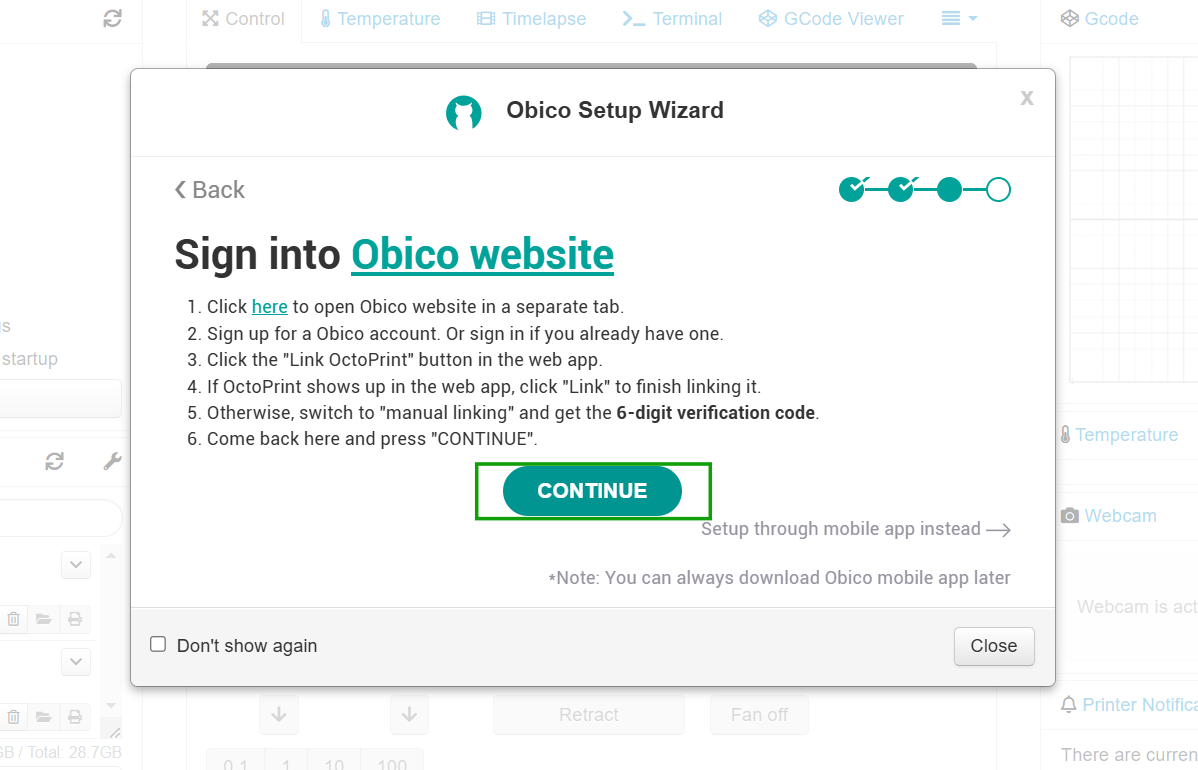

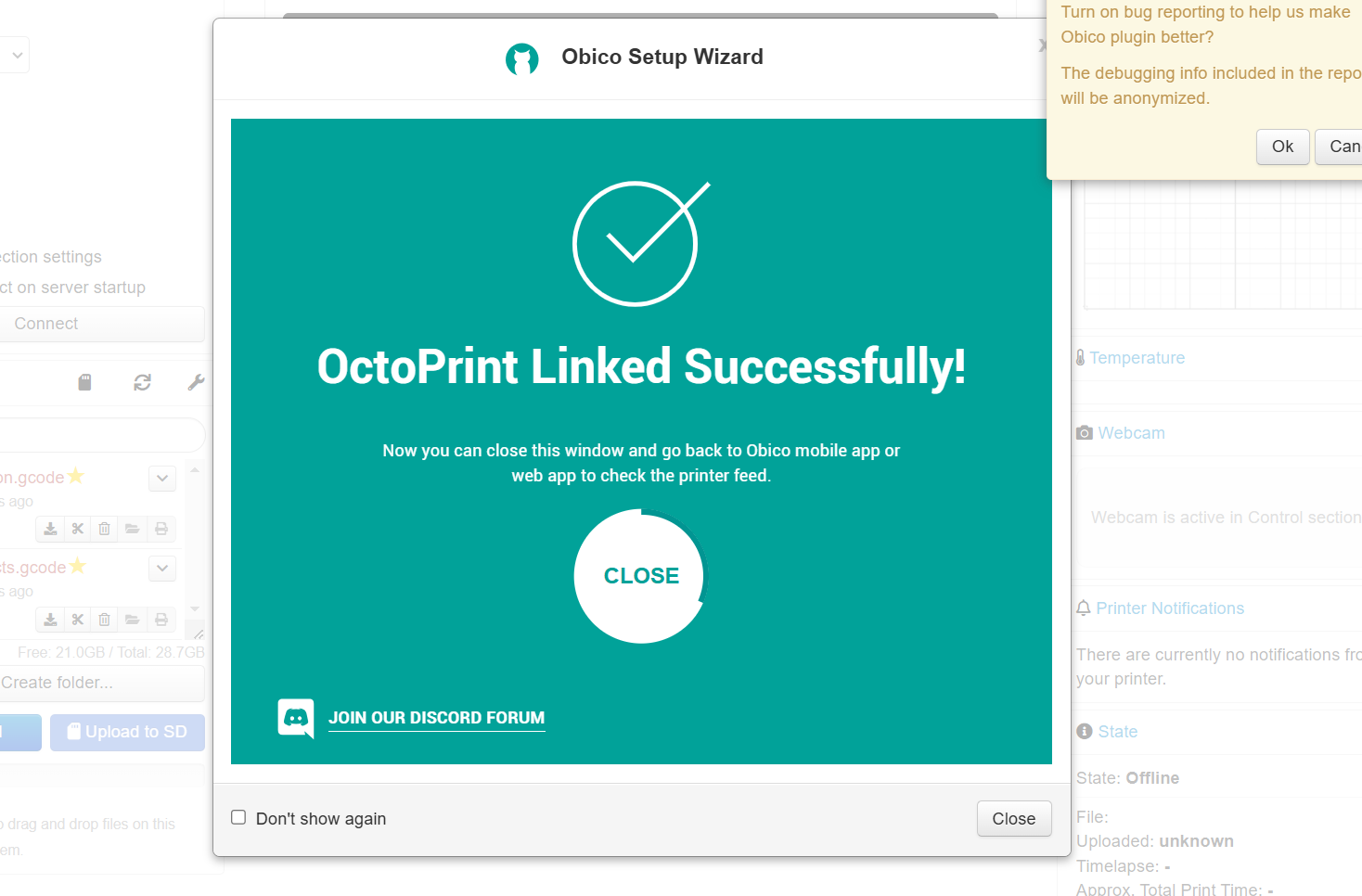

3. Click Setup Plugin to start setting up Obico.

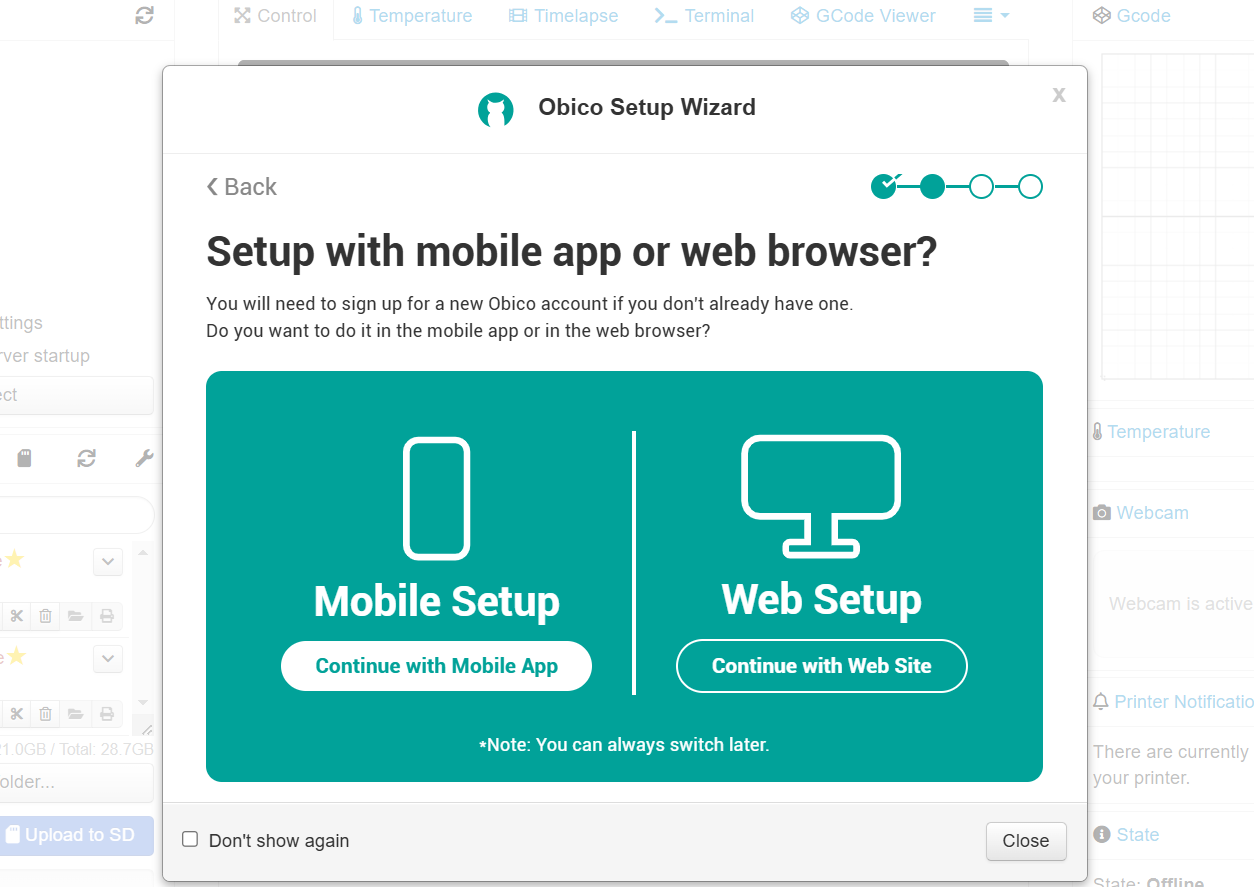

4. Choose whether to setup with a mobile app or web browser.

For my case, I will choose web browser.

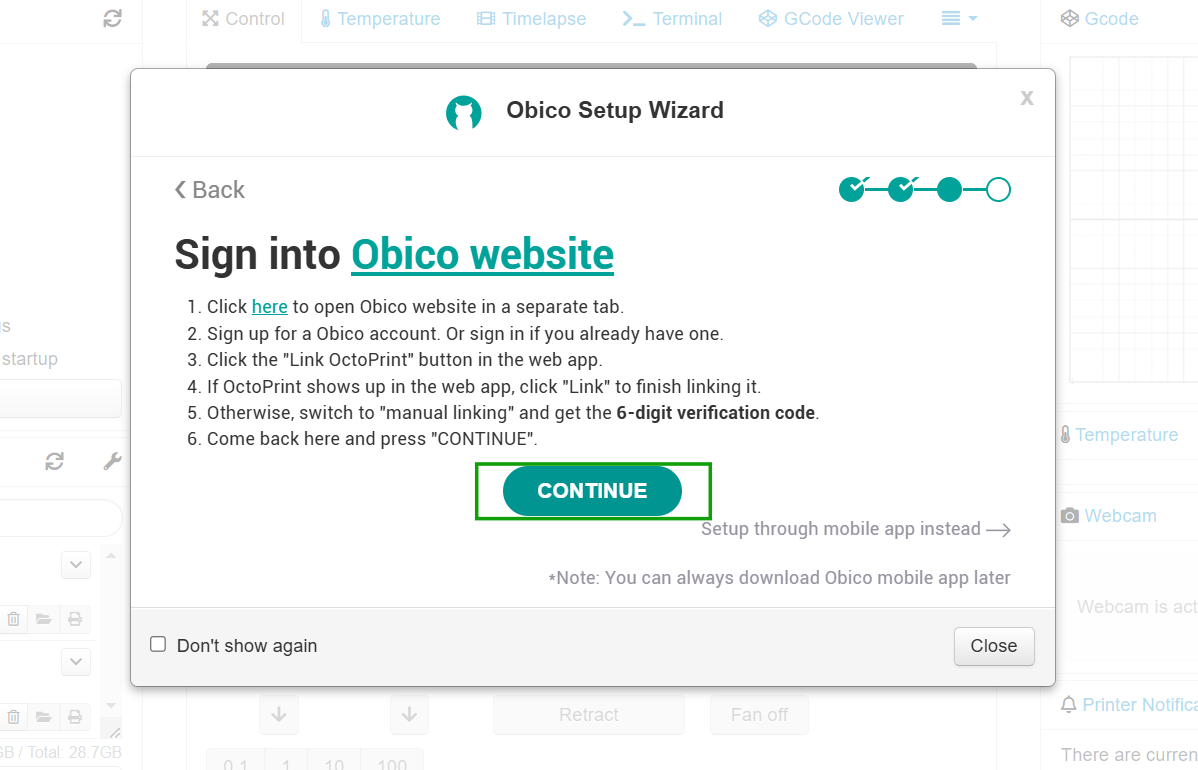

5. Continue to open Obico website to sign up for an account.

6. Link your 3D printer to Obico by clicking on Link Printer.

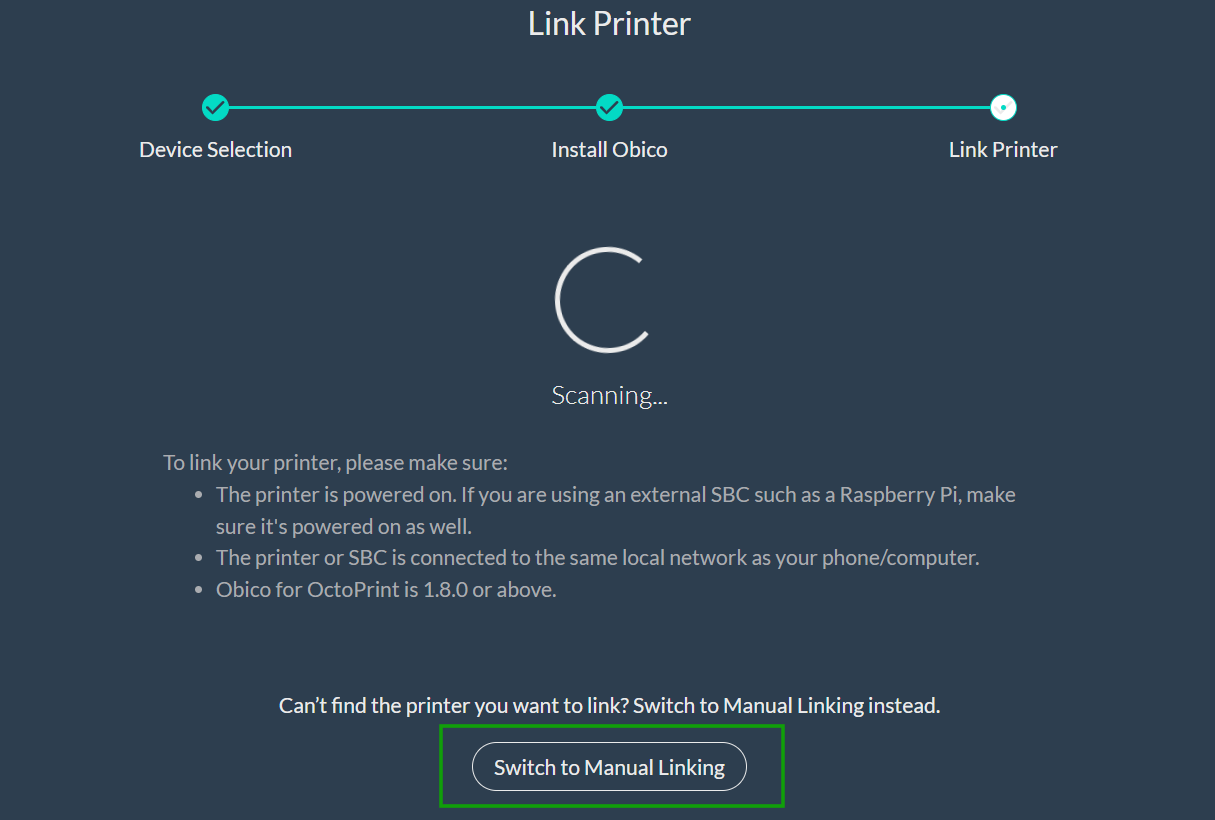

7. Select OctoPrint in the window that launches then click Next. It will start scanning for your 3D printer. For it to find your 3D printer, it must be powered on and if you are connecting it via a Raspberry Pi, ensure it’s powered on. You can also link it manually by clicking on Switch to Manual Linking.

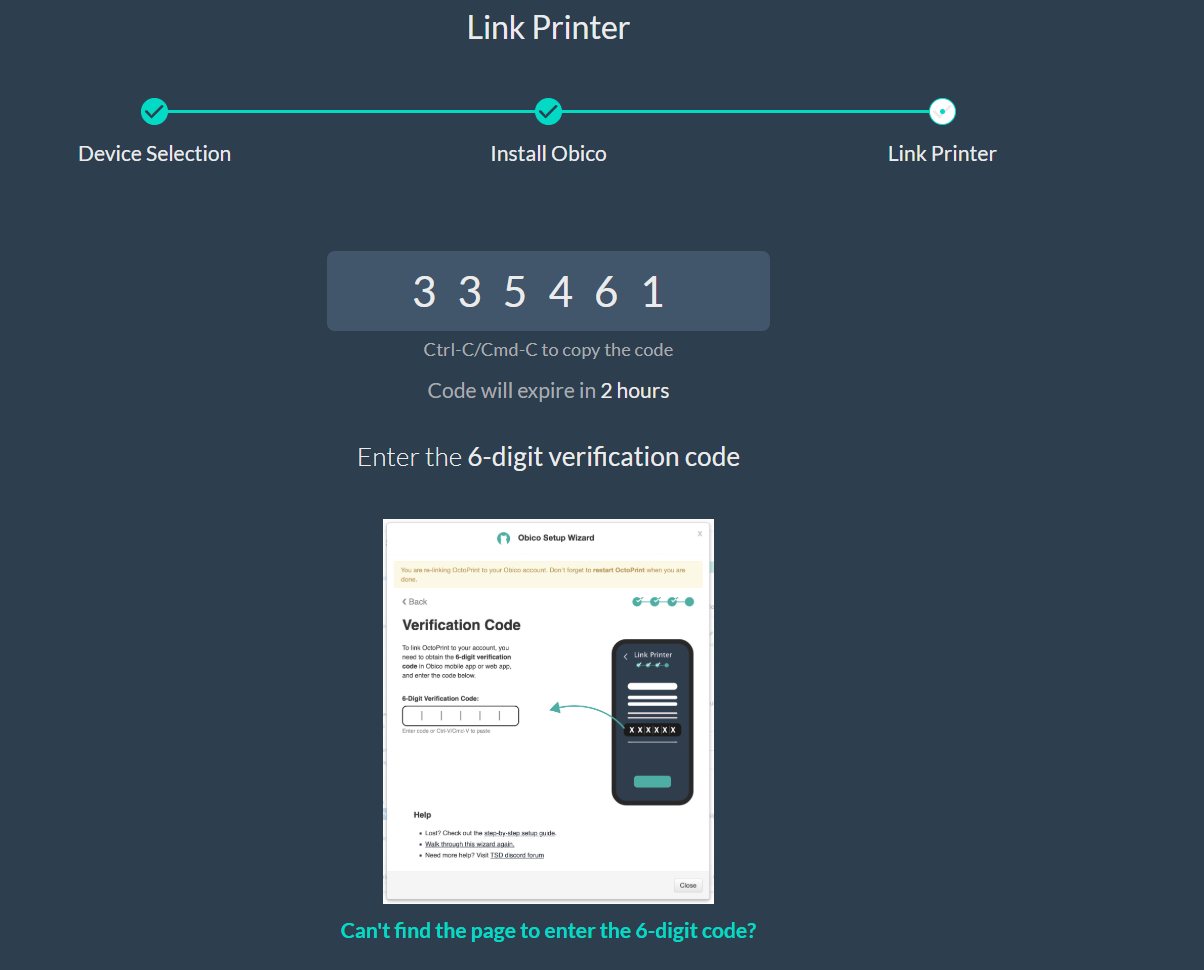

8. Copy the 6-digit verification code that will be generated.

9. Go back to the previous page in the Obico plugin in OctoPrint and click continue.

10. Paste the verification code and Obico will be set up successfully.

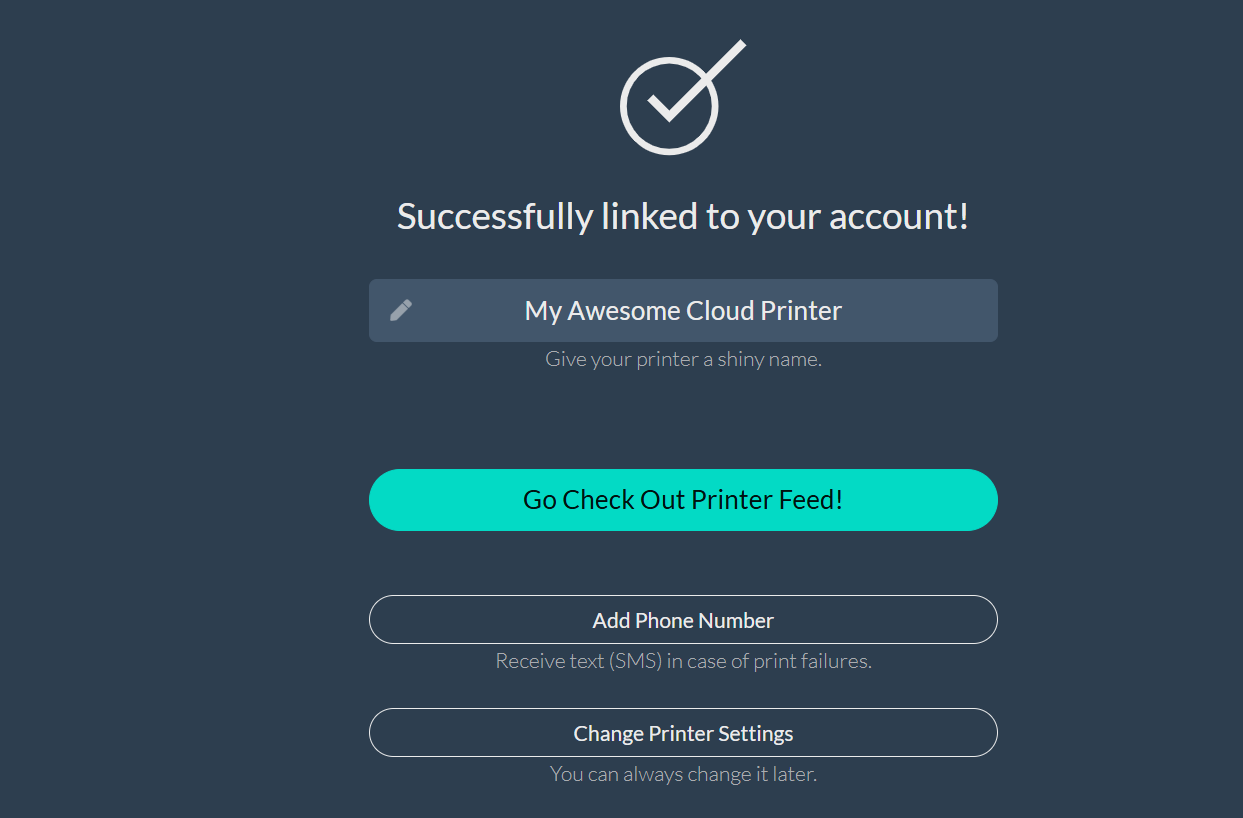

11. Go back to Obico web application and you can rename your 3D printer, check the 3D printer feed, add a phone number, and even change the 3D printer settings.

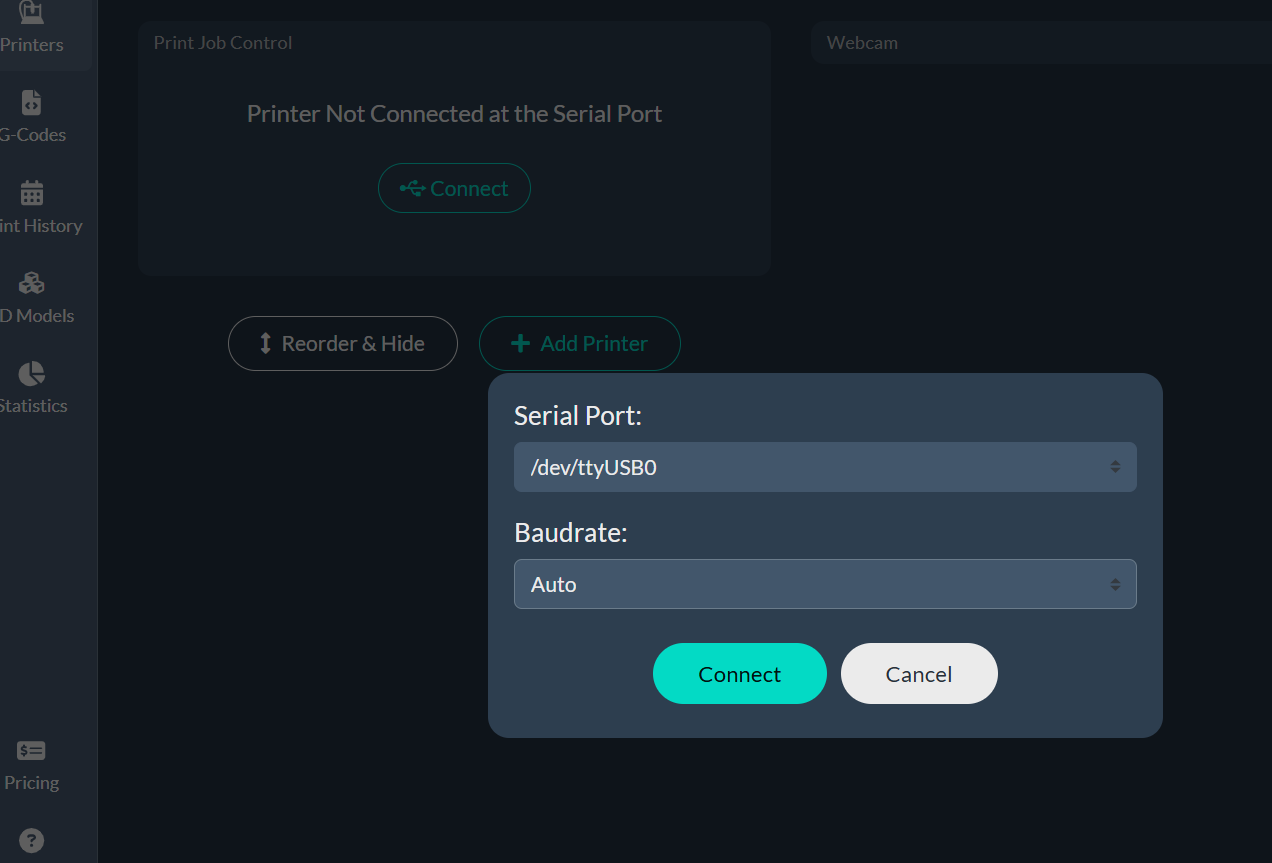

You can also go ahead and connect the 3D printer at the serial port.

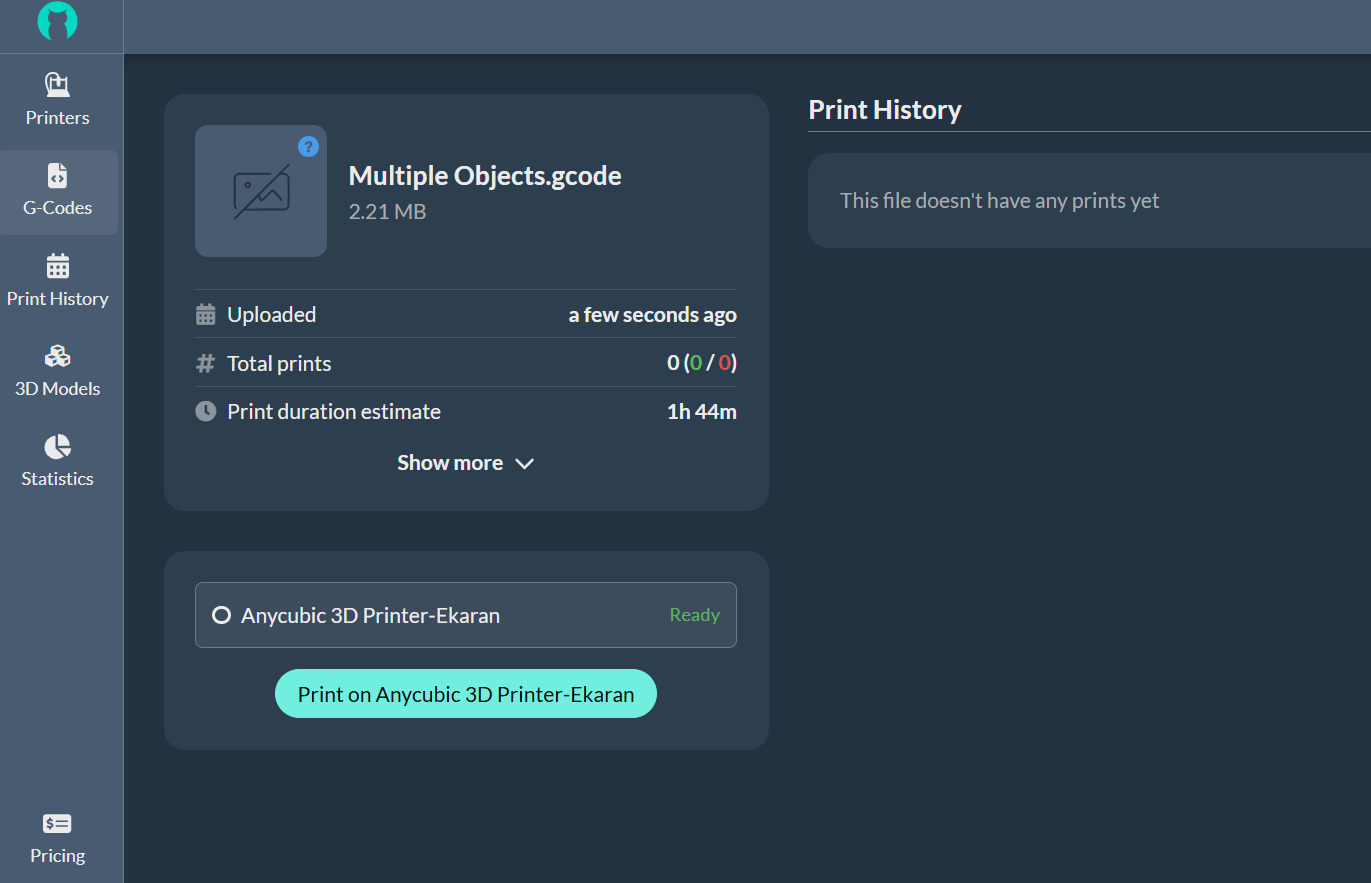

You can also upload your G-code to the platform and start 3D printing on Obico.

You can also download designs from the 3D models section, slice them on the platform, choose your 3D printer, and then confirm. You can find the 3D print failure option when you scroll down in the 3D printer section.

If you have connected the camera, you will be able to view 3D printing process live in the right section.

When you sign up to Obico, you are given 30 30-day free trial. Afterward, you will need to upgrade to the Pro version, which costs $4/month. The free version offers you basic web streaming, 10 free AI detection hours monthly, and up to 50MB of G-code cloud storage per file. The pro version, on the other hand, gives you premium webcam streaming, 50 AI detection hours per month, and G-code cloud storage of up to 500MB per file.

]]>US tariffs have caused a huge stir across every industry, and PC hardware is no exception. Today, we received an email from Vaio touting a tariff-free sale on their laptop inventory.

This was confirmed in the email but isn't clear on the website. In the email, Vaio assured us that this applies to the Vaio SX-R laptops, but we're unsure if it applies to other laptop series.

We are sure that this is only a temporary offer. The email stated that tariff-free pricing will only apply to the current inventory. Once this stock has been sold, tariff pricing will be applied, and we anticipate that the prices will increase notably.

Therefore, this may be your last chance to snag a brand-new Vaio SX-R laptop without the avalanche of tariffs impacting the price.

Over the last few days, we've covered some of the impending impacts of the latest tariffs. Most recently, there have been concerns that chipmaking tools will make US-made processors much more expensive to manufacture.

While exceptions have been made for semiconductor imports, the same cannot be said for water fabrication equipment (WEF). These uncertainties make offers like this from Vaio enticing, although fleeting.

The email from Vaio confirmed that the tariff-free pricing will definitely apply to the Vaio SX-R line. However, we aren't sure if it applies to all of the current laptop inventory, which also includes the Vaio FS series. That said, the "Shop Tariff Free" URL takes us to a page listing the Vaio SX-R and Vaio FS laptop lines.

At the very least, you can see specs for both machines on the Vaio website. The Vaio SX-R starts at $2,199 and goes up to $2,499, with the biggest difference being the storage options, which range from 1TB to 2TB. Both versions come with an Intel Core Ultra 7 155H processor, 32GB of RAM, and a 14-inch touchscreen with a resolution of 2560 x 1440px.

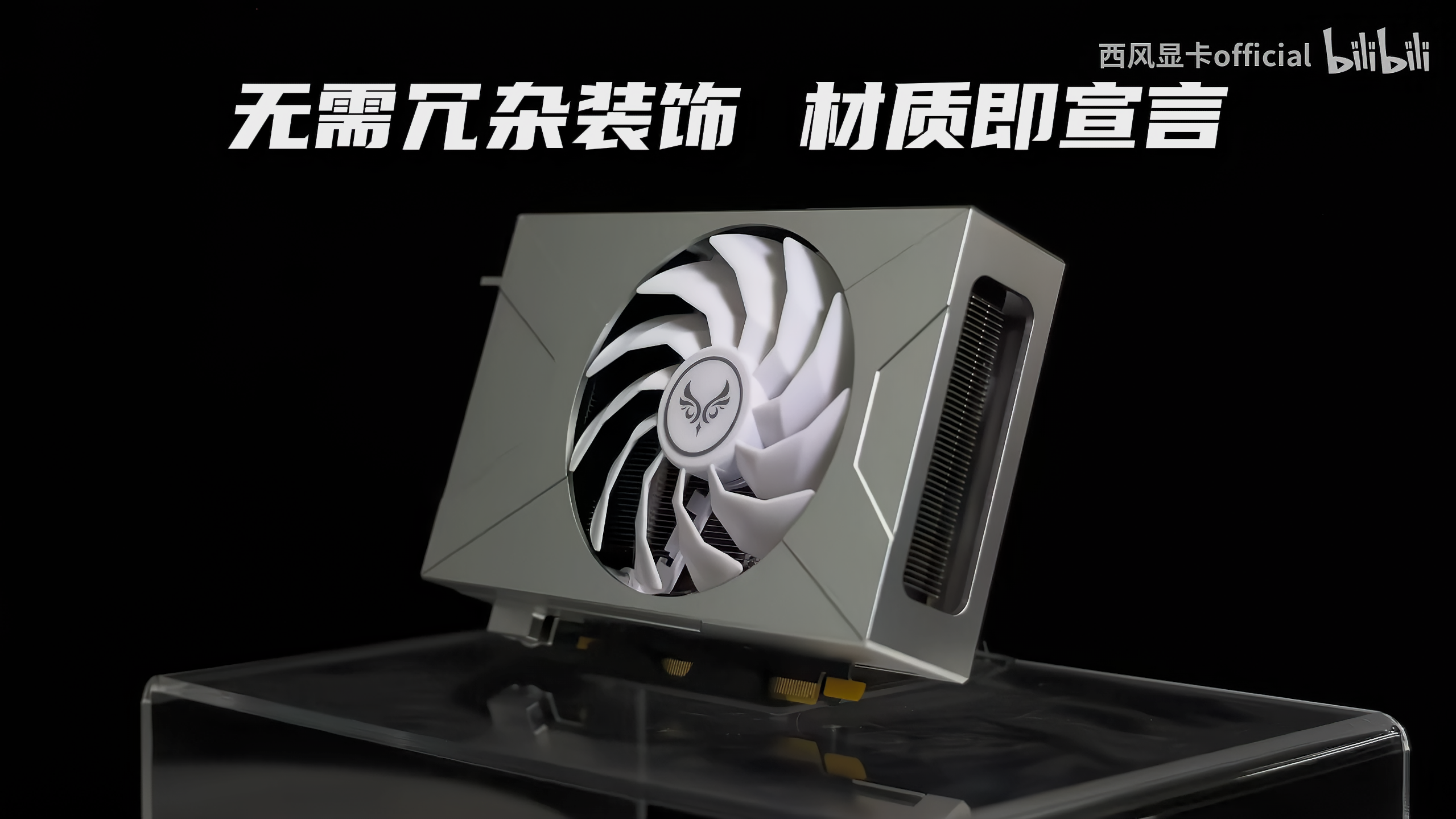

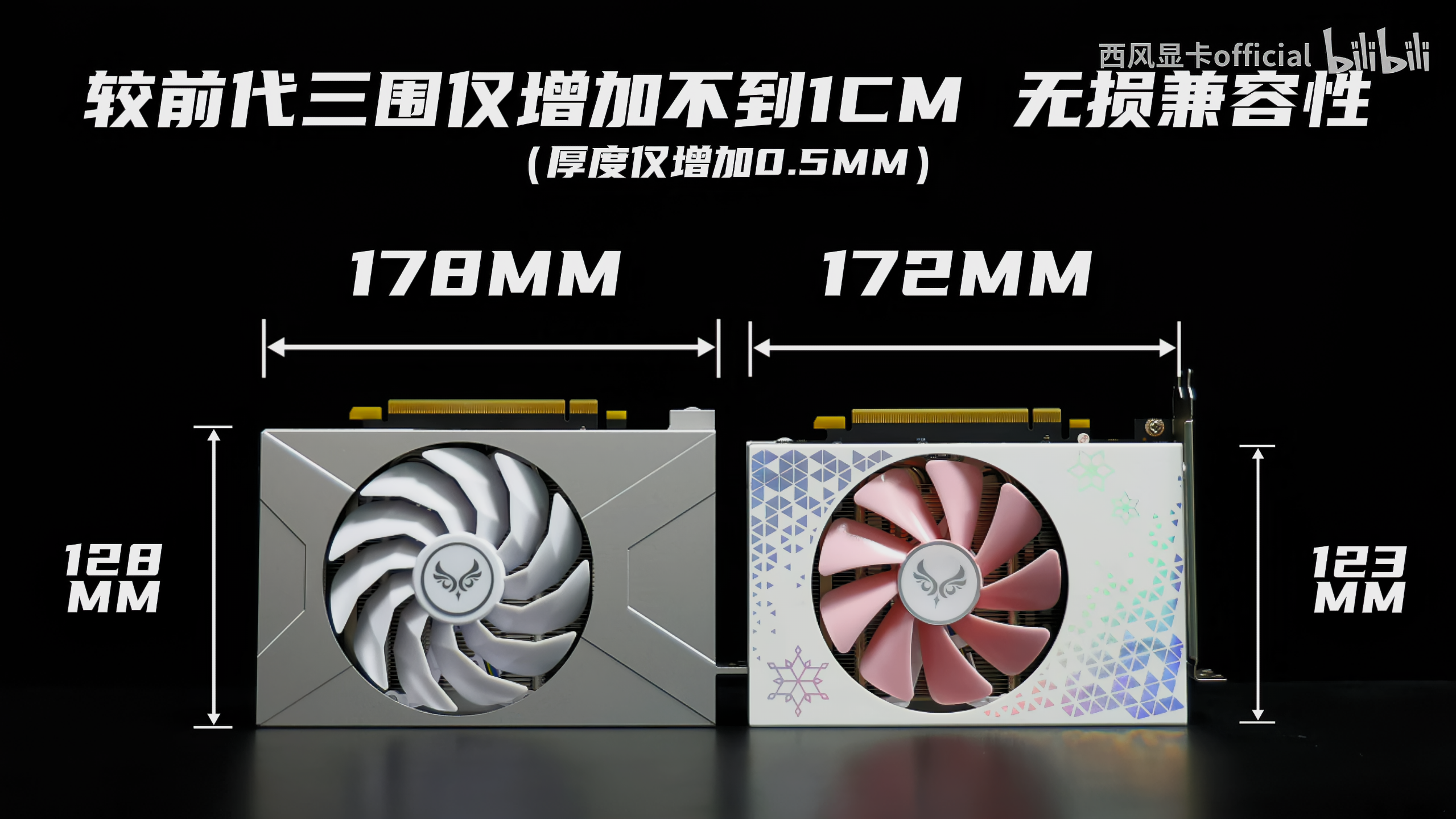

]]>As we near the second anniversary of the RTX 4070, Zephyr has introduced the RTX 4070 Sakura Snow X edition, showcasing an exotic all-CNC-machined shroud, including an integrated I/O bracket (via Videocardz). While an RTX 5070 might seem more logical, Zephyr doesn't generally consider performance when powering its distinctive small form factor designs. Likewise, porting such a design to the RTX 5070 might not be practical from a sales standpoint, given the current GPU market.

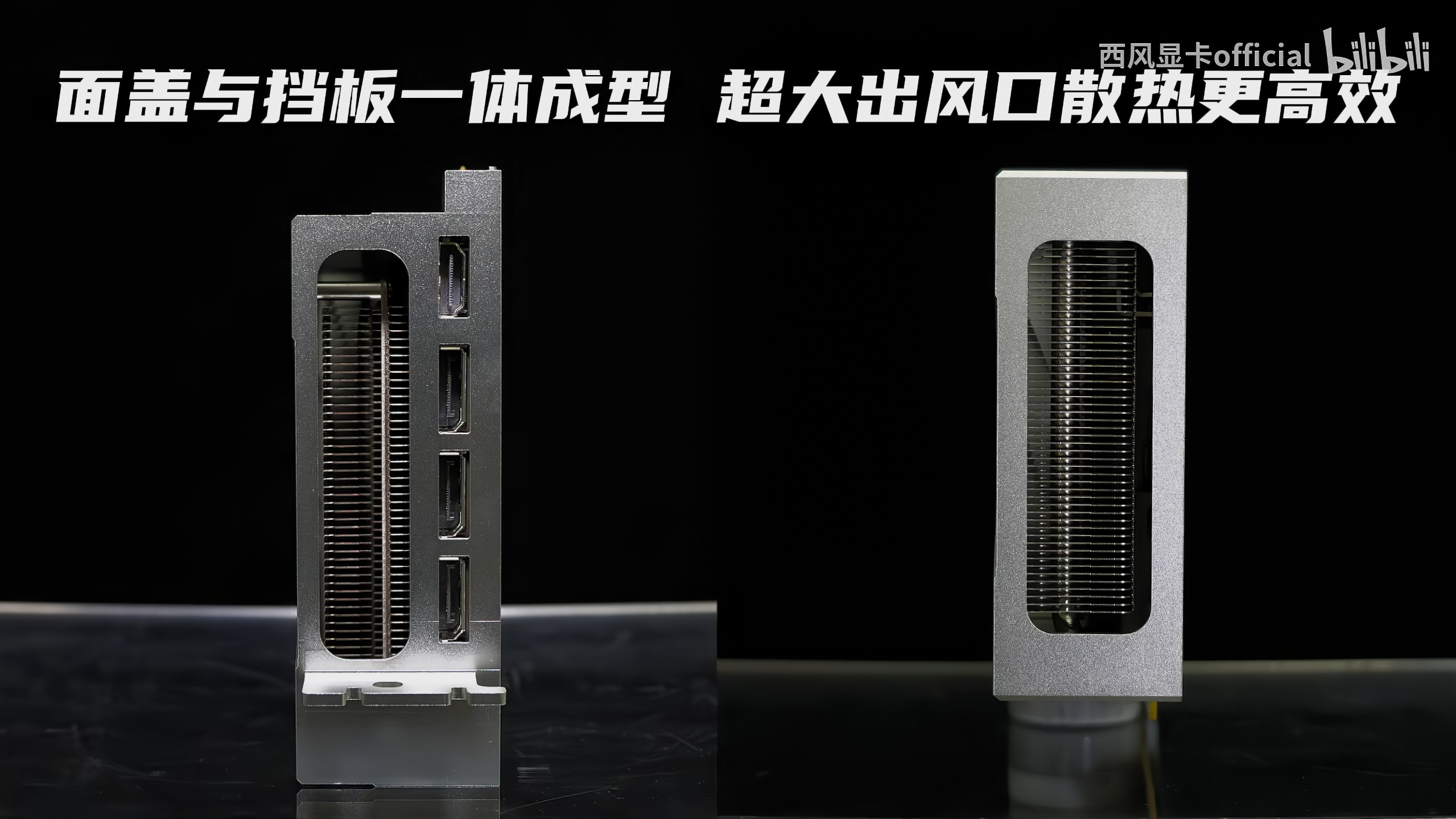

Zephyr is a relatively new and niche GPU manufacturer from China specializing in custom, compact-sized GPUs with extraordinary designs, like when they announced the "world's first" ITX form factor RTX 4070 last year. GPU shrouds are typically made of plastic or sometimes metals like Aluminium for high-end GPUs, like MSI's Suprim X models. Normally speaking, single-fan, mini-ITX GPUs forgo metal shrouds for budget considerations, but the Sakura Snow X is a unique exception.

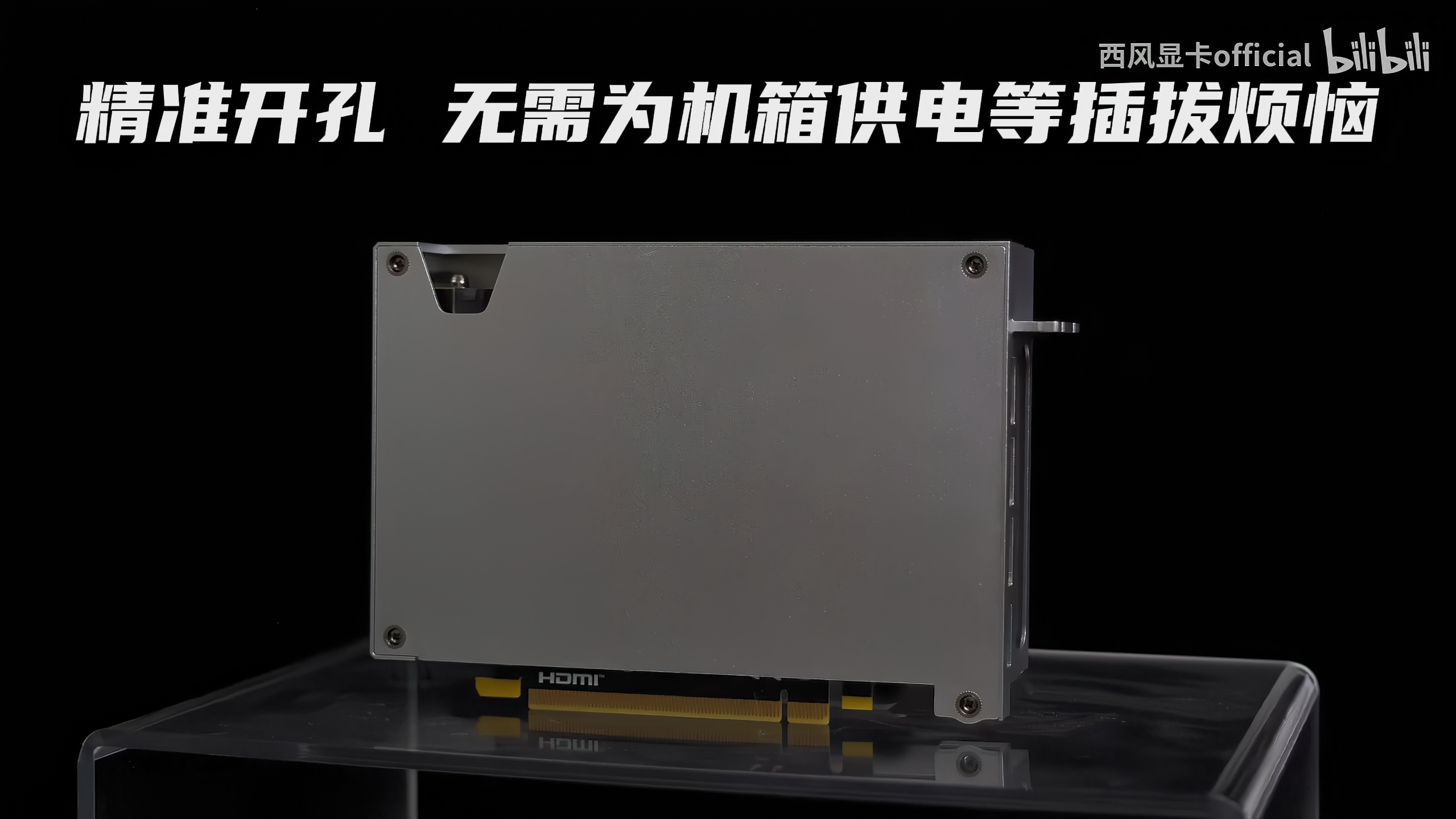

While renders show the Sakura Snow X as all-white, real-world photos indicate a more metallic grey finish instead, probably due to the lighting. The GPU offers a single-fan, dual-slot design, carrying the mini-ITX form factor, and is compatible with Nvidia's SFF guidelines. Listed at 176x127x41mm (LWH), the Sakura Snow X's dimensions don't include the bracket, so plan your build accordingly. Overall, the GPU looks pristine from every angle, almost like a clean-cut solid metal block, speaking to the precision achieved with CNC machines.

In-house testing shows that the 105mm diameter fan, coupled with the all-metal shroud, decreases GPU core temperatures by up to three degrees Celsius compared to the original Sakura edition. In terms of specifications, we're looking at an AD104-250 die with 5,888 CUDA cores and 12GB of G6X memory, which is standard for all RTX 4070 GPUs. The RTX 4070 still holds pretty well against its Blackwell successor, landing just 16% slower. The upside? You might be able to find this GPU in stock.

Despite the reduced dimensions, Zephyr still adheres to Nvidia's reference clock speeds with a 200W TGP. You can always undervolt to reduce temperatures and power consumption and even increase performance if your GPU is thermal throttling. The RTX 4070 Sakura Snow X is available through Chinese e-commerce platforms for 4,399 RMB or $600.

]]>3D Gloop! is finally leaving dad’s garage and getting its own pad – or rather, a 10,000 square foot warehouse with space for a new lab and storefront. After nearly seven years in business, Andrew Mayhall and Andrew Martinussen have outgrown Mayhall’s garage (and living room, crawl space, storage locker, and a few trailers). They are moving their “science sauce” production to its own facility.

3D Gloop is a solvent-based adhesive that chemically welds together plastics like PLA, PETG, and ABS/ASA. The exceptionally strong bond is arguably the strongest you can create for 3D printed parts.

The pair began 3D Gloop! as a start-up in 2018 and donned lab coats to promote their “ludicrously” strong plastics adhesive at 3D printing festivals across the country. You may have seen them with Jephf, an automotive welding robot turned tug-of-rope warrior, at Open Sauce last year. Visitors were challenged to play tug of war with a rope held together by 3D printed parts and Gloop.

Mayhall said the business could use a little help during its next growth phase. They have covered the basics and will begin renovating the warehouse in April after they take possession. However, the local municipality where Gloop is moving threw a wrench in the works: it needs a retail showroom.

The new space will include more than just shelves of glue. Mayhall said they are already working with local school districts to host 3D printing workshops at their new facility and inspire a whole new generation of makers.

To cover the additional costs, they offer Gloop fans a chance to purchase a chunk of their literal foundation to become “Foundational Supporters”. Supporters will have their name permanently embedded into the showroom’s floor with Gloop’s iconic purple splatters made of copper, aluminum, and dyed concrete.

Foundational Supporters will also receive a gift of 3D Gloop and a lifetime discount. Top-tier supporters will also be able to participate in the Alpha and Beta tests for future products.

Foundational Supporters start at $250 and go up to $1,500. You can buy a limited number of splatters on the floor. Head over to 3D Gloop to check it out.

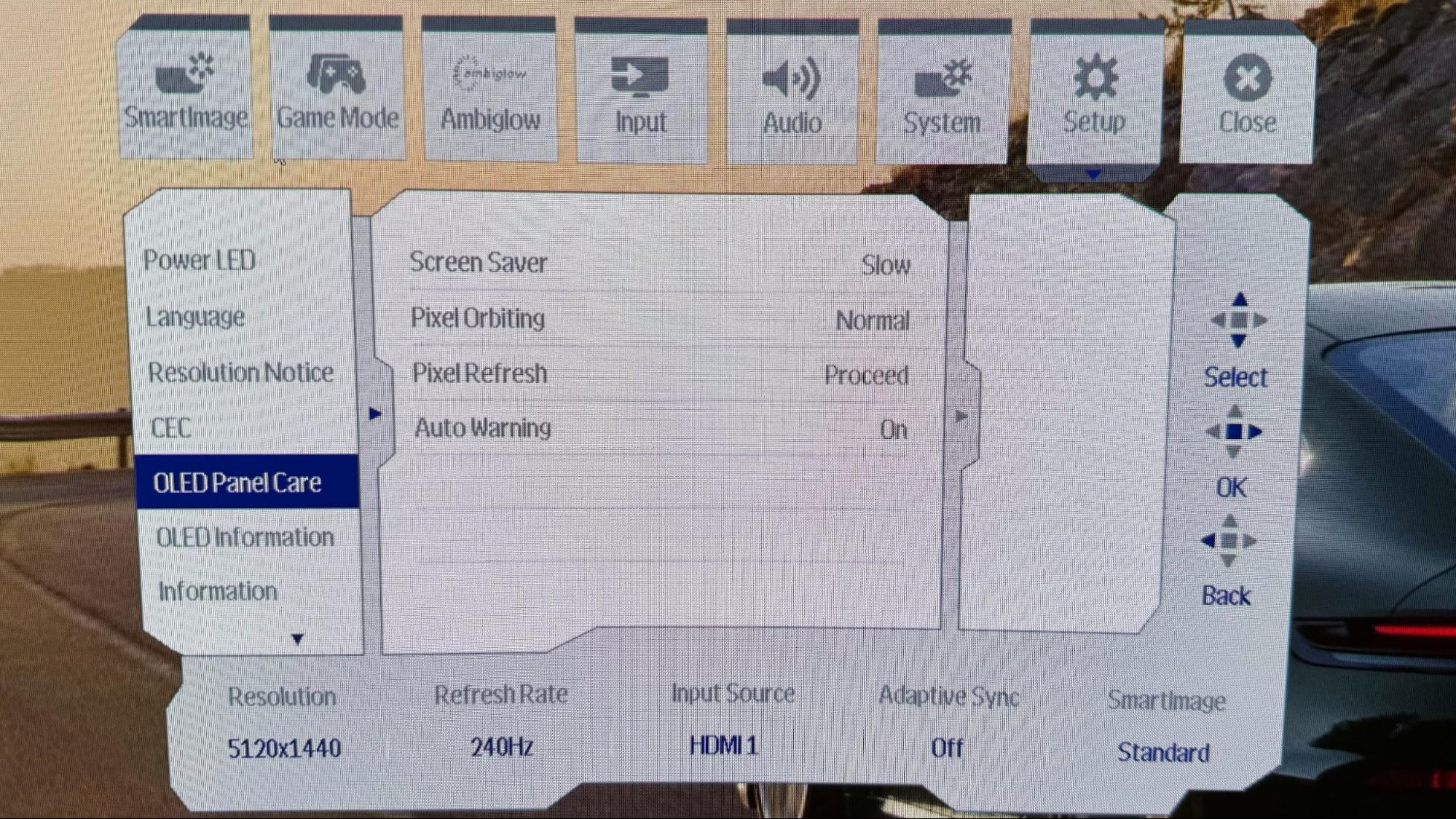

]]>Now is one of the best times to get a top-quality gaming monitor. More specifically, the HP Omen Transcend 32-inch quantum dot OLED (QD-OLED) gaming display is available at Newegg for its lowest price ever. It's typically priced around $999, but today it's been discounted to just $759.

We reviewed the HP Omen Transcend 32 monitor last month and loved its performance, rating it 5 out of 5 stars. It oozed quality and made for a top-notch experience in every metric we tested it against. Our only con was a light suggestion for a remote control. That said, if you want to see how well this monitor stacks up against others on the market, check out our list of the best gaming monitors.

HP Omen Transcend 32-Inch QD-OLED 4K Monitor: now $759 at Newegg (was $999)

This monitor is huge, spanning 32 inches and with a dense 4K UHD resolution. It's AMD FreeSync Premium Pro certified and has DisplayPort, HDMI, and even USB options for video input.View Deal

The HP Omen Transcend 32 monitor features a 31.5-inch QD-OLED panel. It has a dense 4K UHD resolution of 3840 x 2160px. The refresh rate can reach as high as 240Hz, while the response time can reach an impressively low 0.03 ms. It's AMD FreeSync Premium Pro-certified for its performance.

You get a handful of video input options, including one USB port, a DisplayPort 2.1 input, and two HDMI 2.1 ports. The screen covers 100% of the sRGB color gamut and is illuminated by a maximum possible brightness of 1000 Nits. It has three USB Type-C Ports alongside three USB Type-A ports. As far as audio support goes, it has built-in speakers, but you can also take advantage of its 3.5mm jack for connecting external audio peripherals.

So far, no expiration date has been specified for the discount, so we're not sure how long it will be available. For purchase options, visit the HP Omen Transcend 32-Inch QD-OLED 4K gaming monitor product page at Newegg.

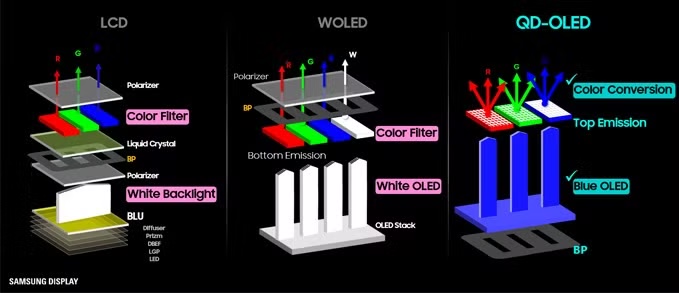

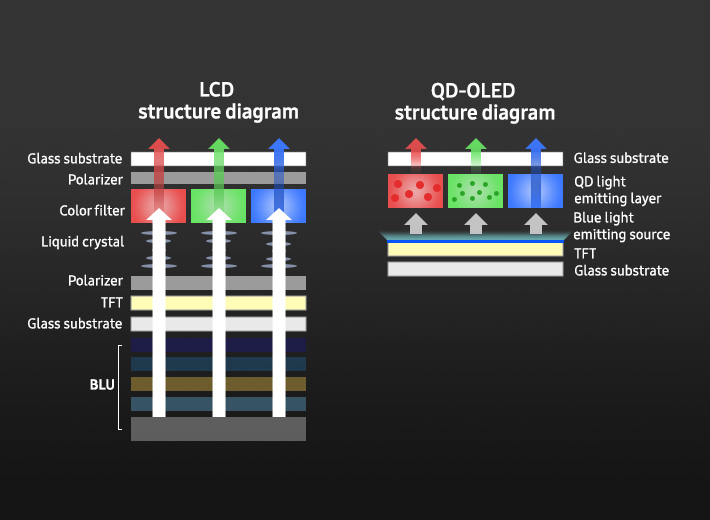

]]>Organic light-emitting diode (OLED) technology has swept through the computing space, delivering a superior viewing experience in devices ranging from smartphones to tablets to laptops to the best OLED gaming monitors. When it comes to PC monitors, there are generally two popular options available to consumers: WOLED and QD-OLED.

WOLED Panels

WOLED stands for White OLED and has been popularized by LG. WOLEDs feature four subpixels: red, green, blue, and white. WOLEDs do away with the individual emitters for the red, green, and blue filters, and rely on a single layer that emits white light. The white subpixel doesn’t have a filter, so it lets the white light from the emitter pass through uninterrupted.

This arrangement allows WOLEDs to carry the same benefits of traditional OLEDs – namely, per-pixel control of light output resulting in incredible contrast – but it also has an added advantage. By using a single white emitter to pass through the color filters, you don’t run into a problem where individual emitters for red, green, and blue age at different rates, resulting in color shifting and burn-in.

WOLED technology doesn’t completely eliminate burn-in or image retention on monitors, but it can lessen the severity of the phenomena over time.